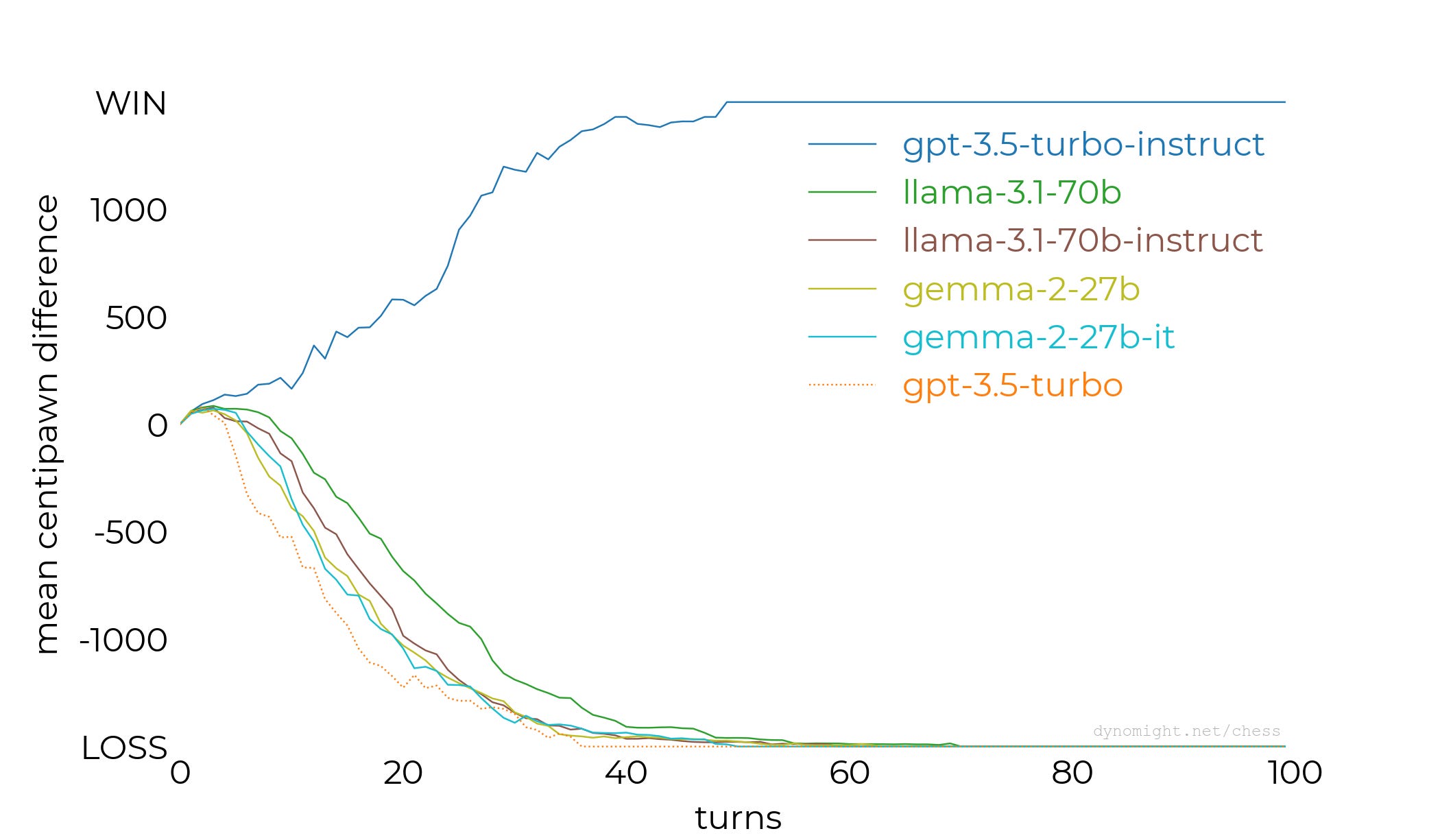

At first glance, trying to play chess against a large language model (LLM) seems like a daft idea, as its weighted nodes have, at most, been trained on some chess-adjacent texts. It has no concept of board state, stratagems, or even whatever a ‘rook’ or ‘knight’ piece is. This daftness is indeed demonstrated by [Dynomight] in a recent blog post (Substack version), where the Stockfish chess AI is pitted against a range of LLMs, from a small Llama model to GPT-3.5. Although the outcomes (see featured image) are largely as you’d expect, there is one surprise: the gpt-3.5-turbo-instruct model, which seems quite capable of giving Stockfish a run for its money, albeit on Stockfish’s lower settings.

Each model was given the same query, telling it to be a chess grandmaster, to use standard notation, and to choose its next move. The stark difference between the instruct model and the others calls investigation. OpenAI describes the instruct model as an ‘InstructGPT 3.5 class model’, which leads us to this page on OpenAI’s site and an associated 2022 paper that describes how InstructGPT is effectively the standard GPT LLM model heavily fine-tuned using human feedback.

Ultimately, it seems that instruct models do better with instruction-based queries because they have been programmed that way using extensive tuning. A [Hacker News] thread from last year discusses the Turbo vs Instruct version of GPT 3.5. That thread also uses chess as a comparison point. Meanwhile, ChatGPT is a sibling of InstructGPT, per OpenAI, using Reinforcement Learning from Human Feedback (RLHF), with presumably ChatGPT users now mostly providing said feedback.

OpenAI notes repeatedly that InstructGPT nor ChatGPT provide correct responses all the time. However, within the limited problem space of chess, it would seem that it’s good enough not to bore a dedicated chess AI into digital oblivion.

If you want a digital chess partner, try your Postscript printer. Chess software doesn’t have to be as large as an AI model.