Today, Intel announced the Arc B580 and B570 ‘Battlemage’ GPUs, in what was perhaps one of the worst-kept secrets of the graphics card industry. While Intel won’t comment on future products, these are the first two of what we expect will eventually be a full range of discrete GPUs for the Battlemage family, designed for both desktop and mobile markets. The Arc B580 with 12GB of VRAM debuts at $248, while the B570 comes equipped with 10GB of VRAM and retails for $219.

| Graphics Card | Launch Price | Xe-Cores | VRAM Capacity (GiB) | TBP | GPU Cores (Shaders) | XMX Cores | Ray Tracing Cores | Graphics Clock (MHz) | Max Boost Clock (MHz) | VRAM Speed (Gbps) | VRAM Bus Width (bits) | Render Output Units | Texture Mapping Units | Peak TFLOPS FP32 | Peak XMX FP16 TFLOPS (INT8 TOPS) | Memory Bandwidth (GBps) | Interface | TBP (watts) | Launch Date |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Arc B580 | $249 | 20 | 12 | 190W | 2560 | 160 | 20 | 2670 | 2850 | 19 | 192 | 80 | 160 | 14.6 | 117 (233) | 456 | PCIe 4.0 x8 | 190 | Dec 13, 2024 |

| Arc B570 | $219 | 18 | 10 | 150W | 2304 | 144 | 18 | 2500 | 2750 | 19 | 160 | 80? | 144 | 12.7 | 101 (203) | 380 | PCIe 4.0 x8 | 150 | Jan 16, 2025 |

We’ve known the Battlemage name officially for a long time, and in fact, we know the next two GPU families Intel plans to release in the coming years: Celestial and Druid. But this is the first time Intel has officially spilled the beans on specifications, pricing, features, and more. Most of the details line up with recent leaks, but Intel also gave some concrete performance expectations. Let’s start there, as that’s probably the most interesting aspect of the announcement.

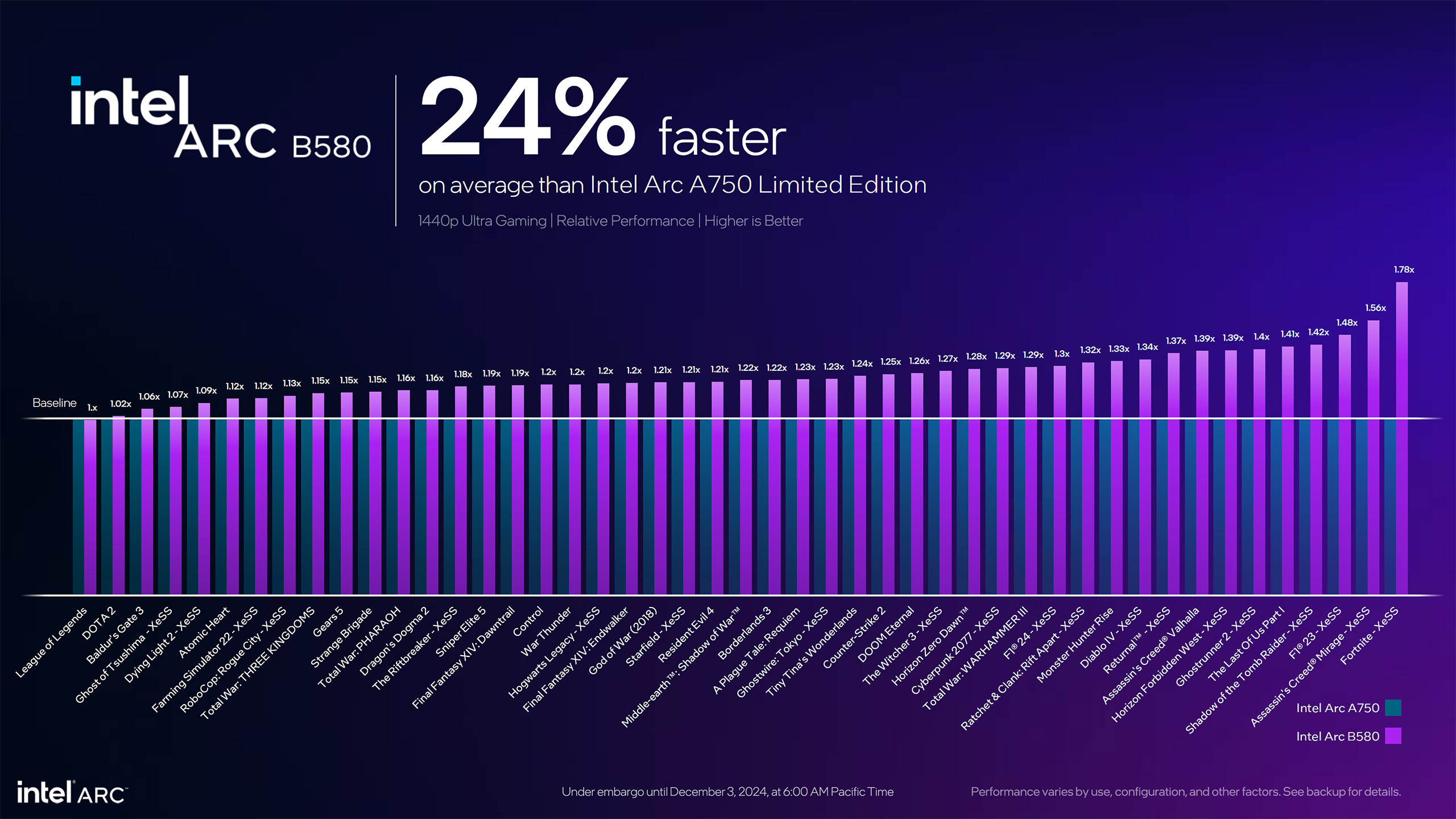

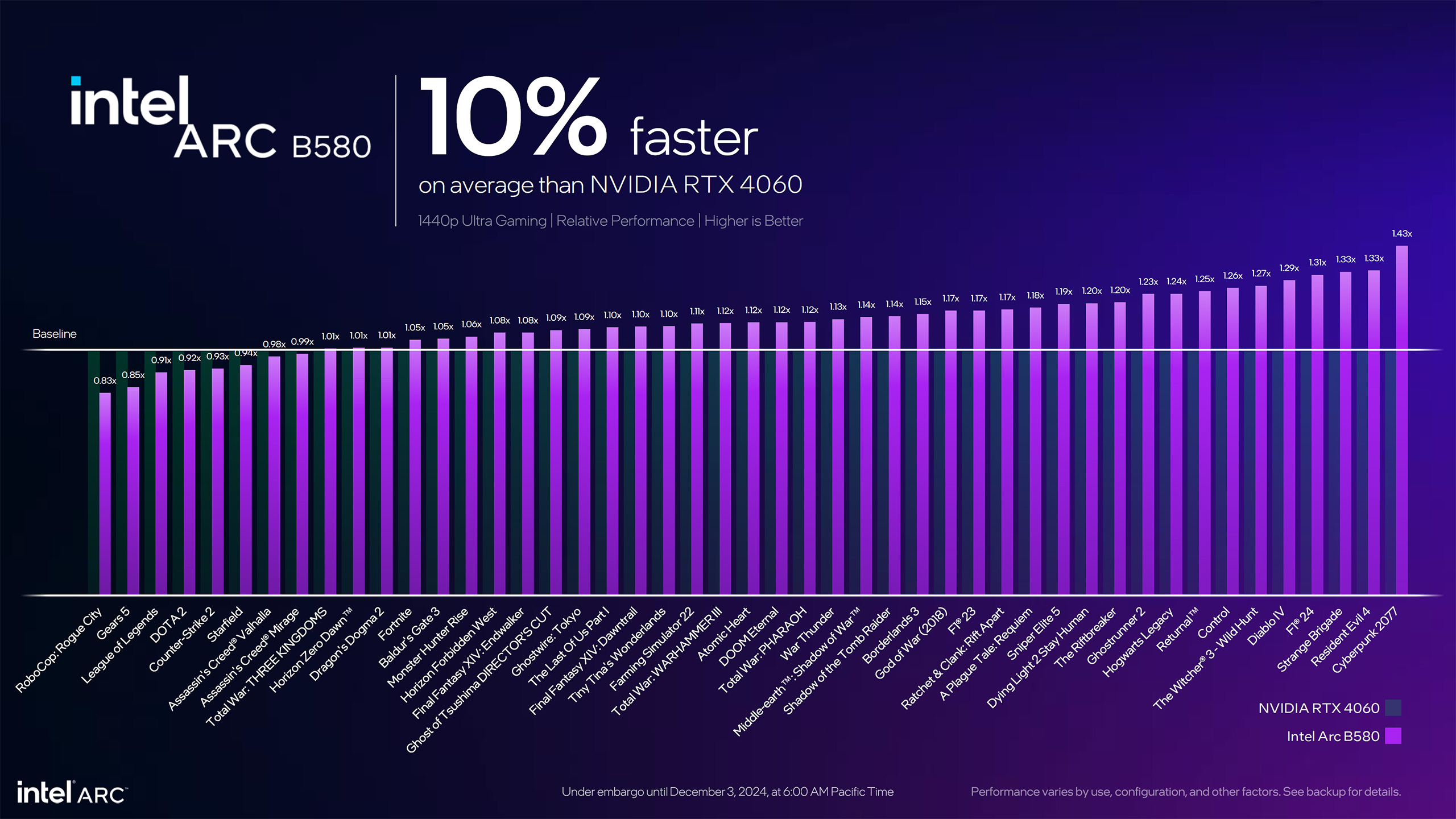

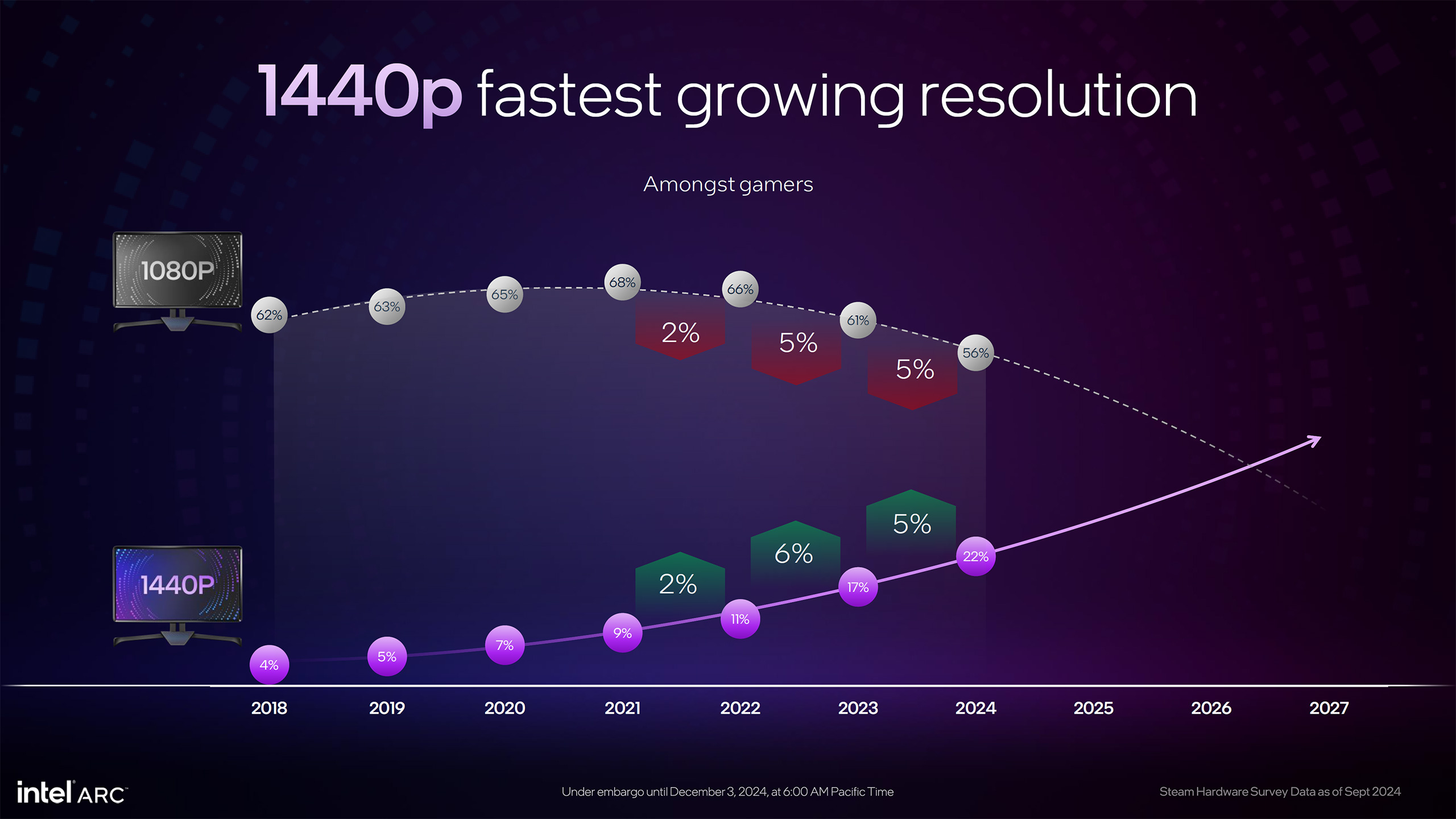

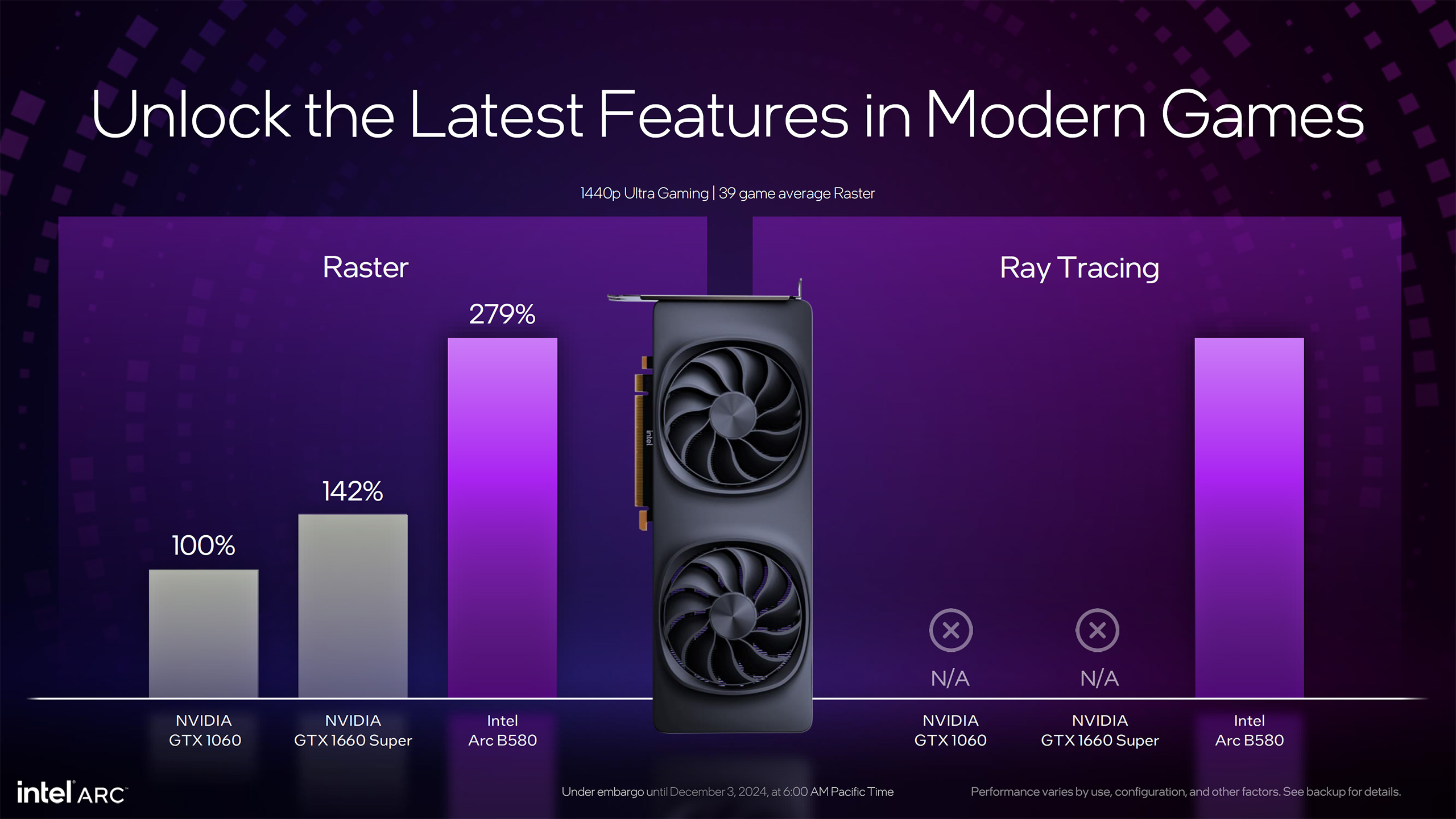

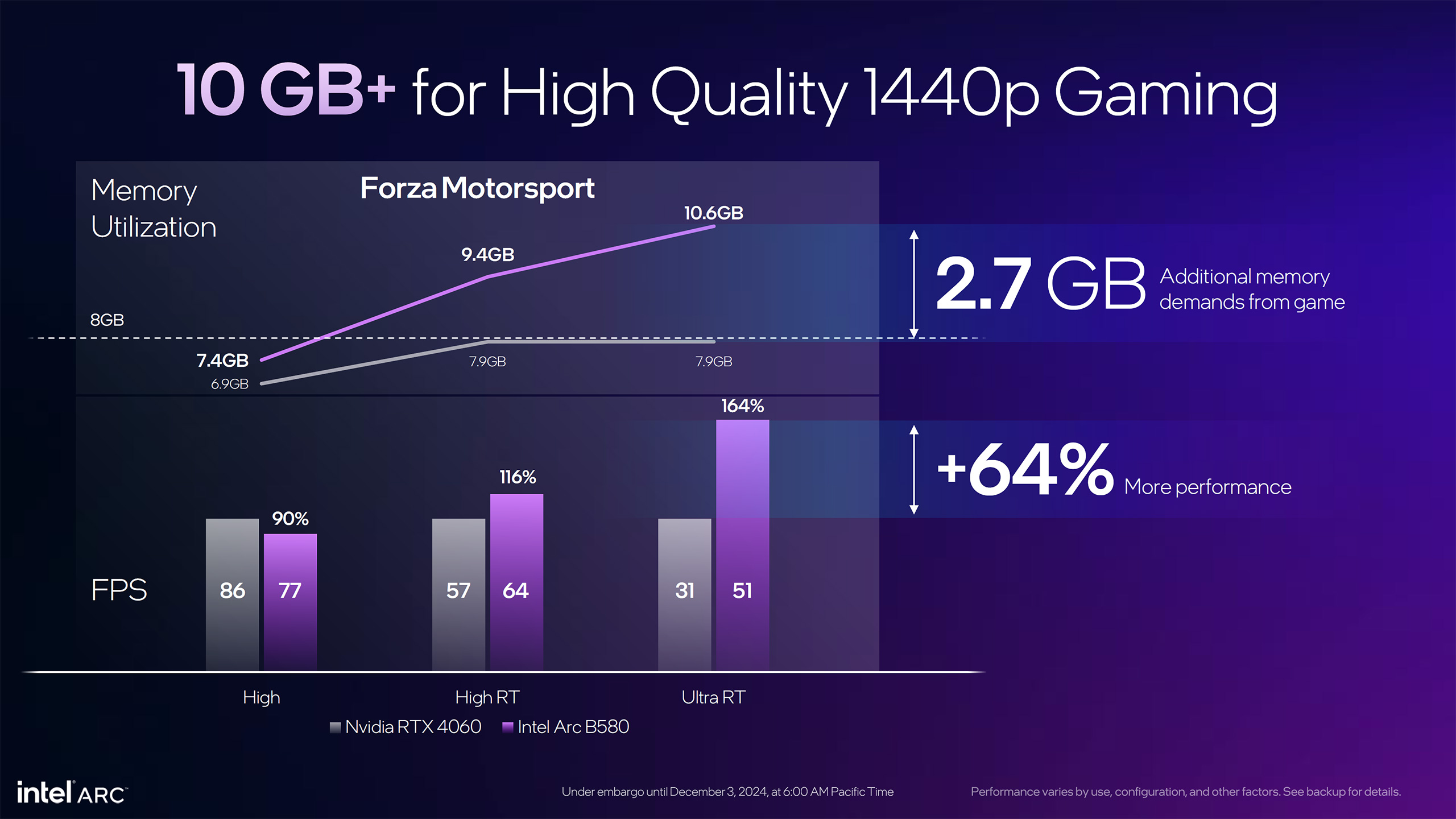

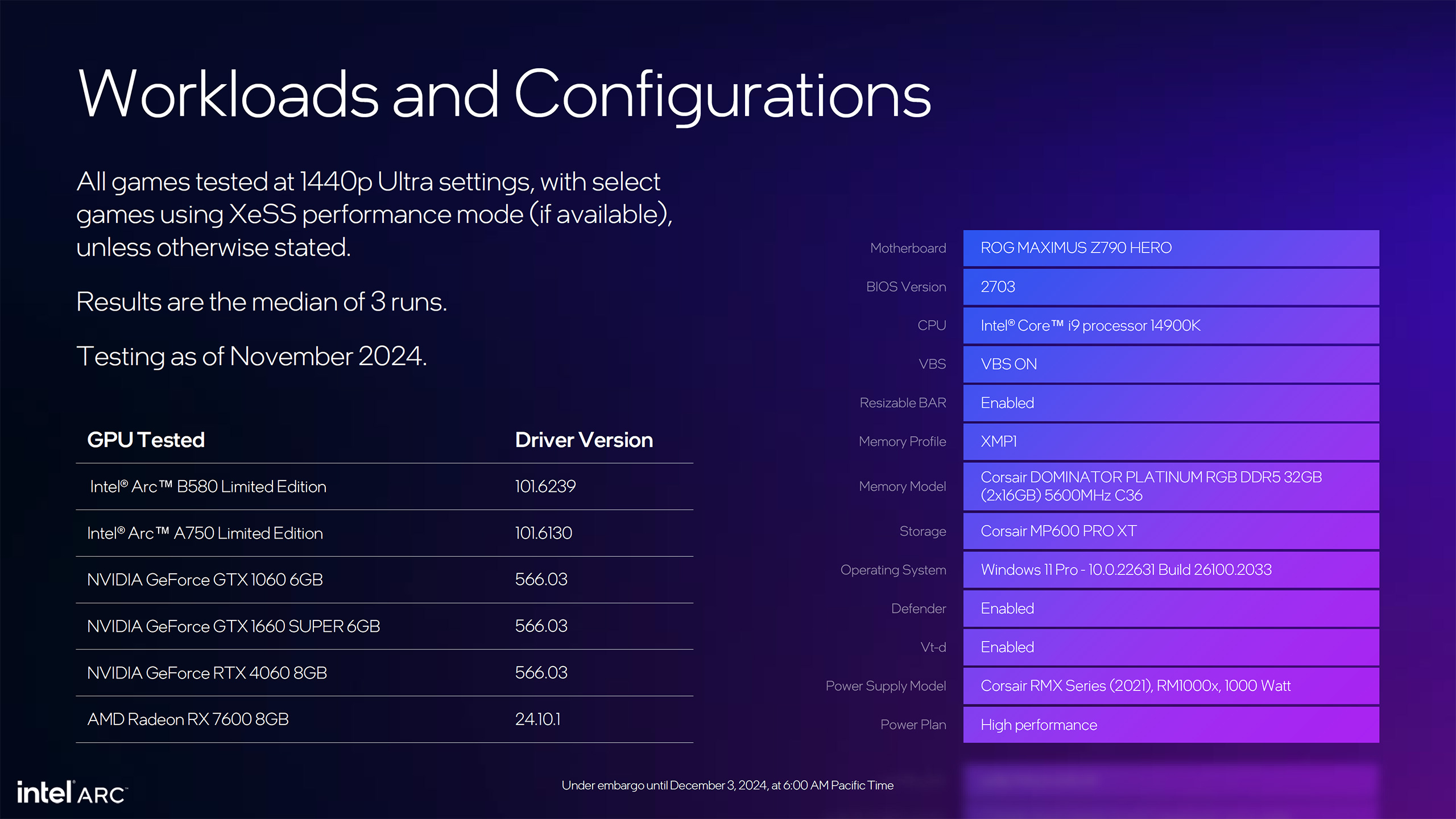

Intel made two important comparisons with the Arc B580 announcement. First is how it stacks up against the existing Arc A750, and second is how it compares with the Nvidia RTX 4060. The testing was done at 1440p, as Intel says that’s the target resolution for its new GPU. Nvidia said the RTX 4060 targeted 1080p gaming, though we’d argue that was far more about saddling the GPU with only 8GB of VRAM than it was about the raw compute available.

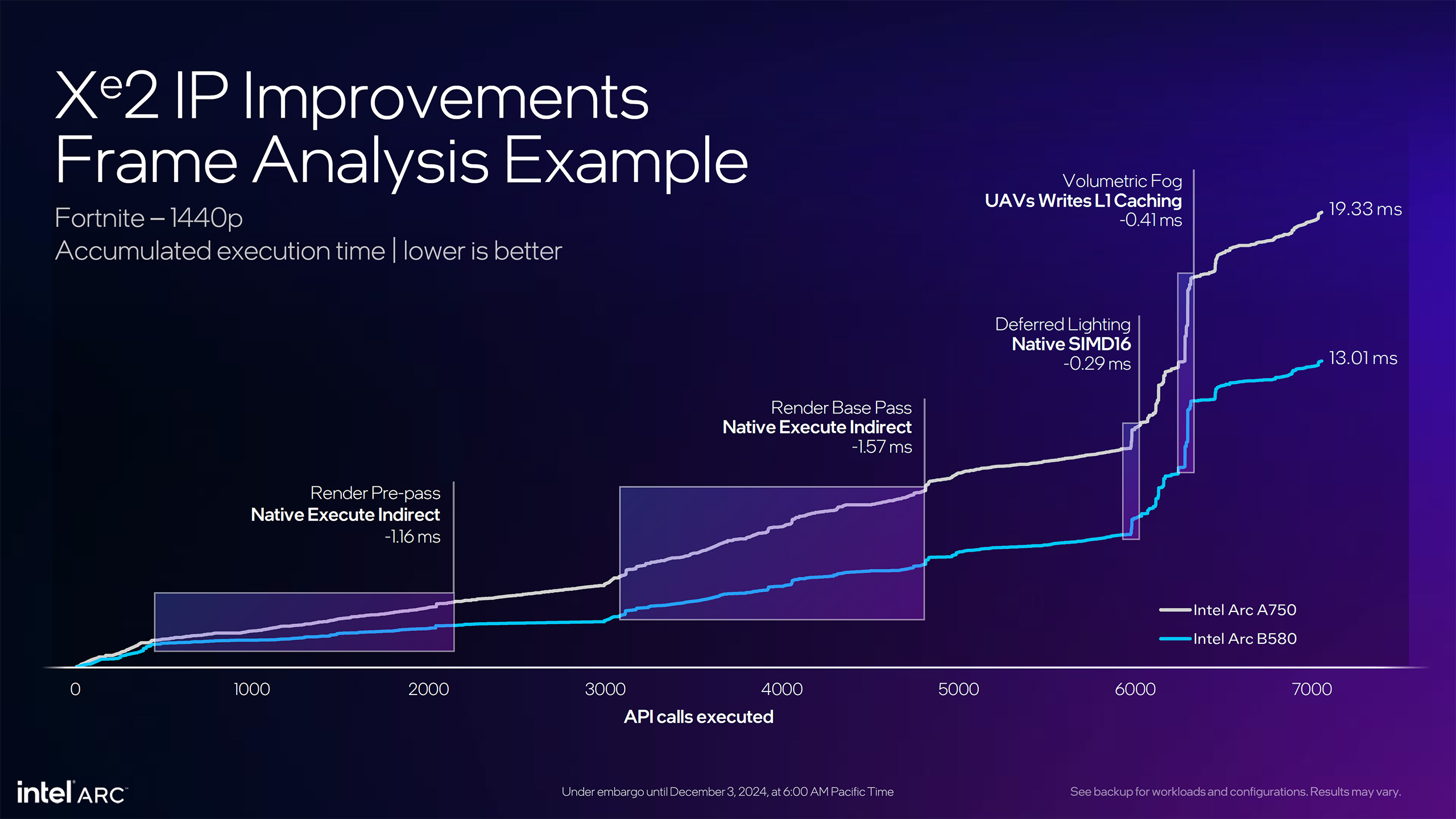

Starting with its own A750, Intel shows a performance uplift of 24% on average across an extensive 47-game test suite. Twenty of the games have XeSS support, which is enabled in the testing, but since it’s Intel vs. Intel, that shouldn’t have a major influence on the results. The performance improvement ranges from 0% — League of Legends and DOTA 2 are almost completely CPU limited — to as much as 78% in Fortnite, with 31 games showing an 18–42 percent improvement.

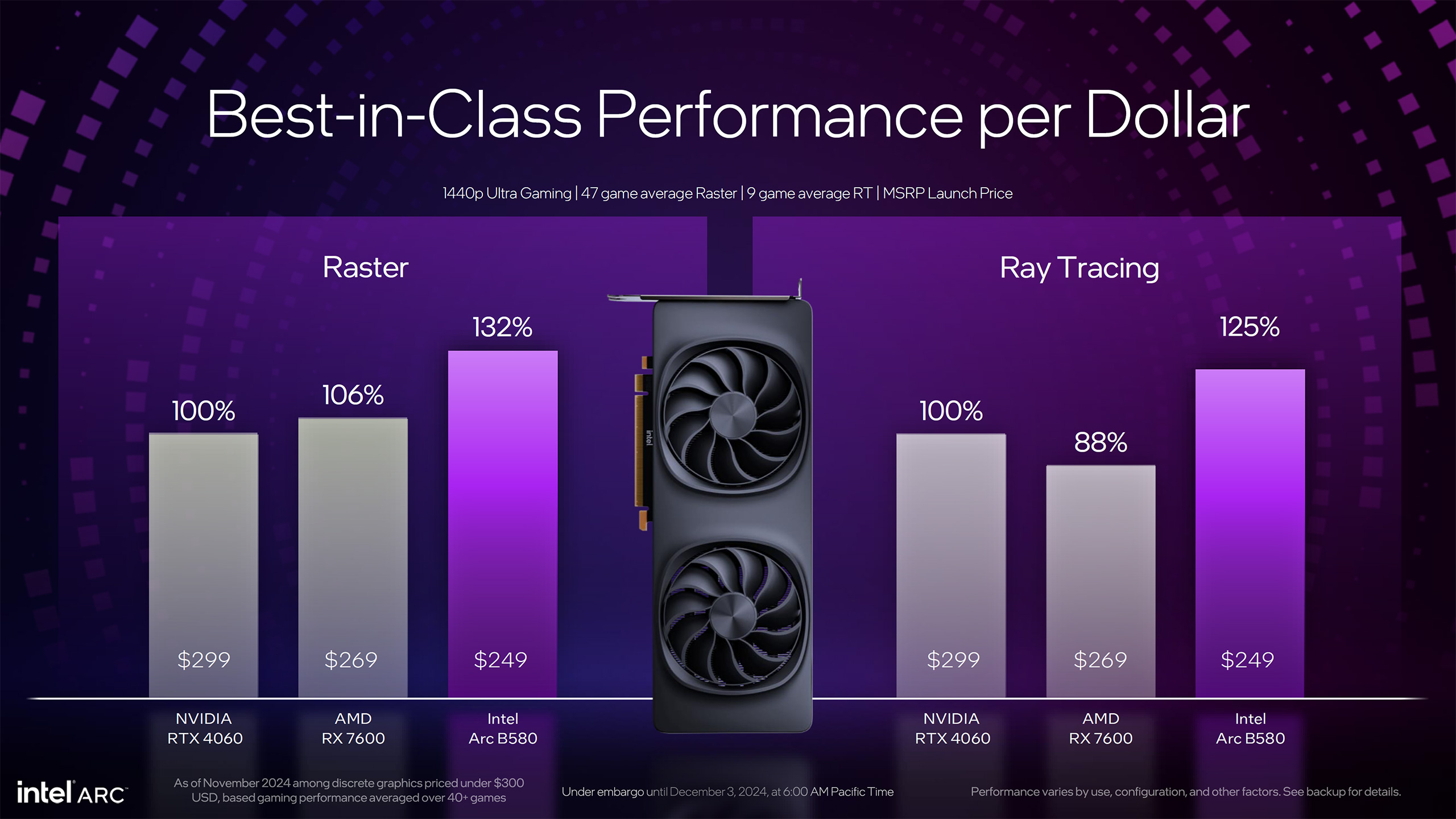

Against the RTX 4060, Intel shows a 10% performance advantage across the same 47-game test suite, but this time without enabling XeSS or DLSS upscaling. We feel that’s a fair comparison, since upscaling algorithms differ in how they work and the resulting image quality. There’s a lot more variability with the RTX 4060 comparison as well, with a delta of -17% to as much as +43%. Six of the games show a minor to modest performance loss, while ten of the games show more than a 20% improvement.

It’s worth noting here that our own GPU benchmarks hierarchy puts the RTX 4060 14% ahead of the Arc A750 (at 1440p ultra testing), with the Arc A770 16GB 11% ahead of the A750. By that metric, Intel’s numbers match up with our own. That means it’s a reasonably safe bet that the B580 will offer performance roughly on par with the RX 7600 XT (23% faster than the A750), or alternatively 2019’s Nvidia RTX 2080 Super.

Earlier rumors suggested the B580 would match the RTX 4060 Ti, but our testing puts that GPU 41% ahead of the A750. Of course, the test suites do matter, and we’re presently in the process of revamping all of our GPU testing for this launch and into 2025. Quite a few of the newer games will likely show more of a performance hit on 8GB cards like the base 4060 Ti.

Perhaps just as important as the performance is the intended pricing. We’ve already spoiled that with the headline, but now, with performance data in tow, the $249 launch price looks even more impressive. That puts the Arc B580 into direct price competition with AMD’s RX 7600 8GB card, while Nvidia doesn’t have any current generation parts below the 4060 — you’d have to turn to the previous generation RTX 30-series, which doesn’t make much sense as a comparison point these days as everything besides the RTX 3050 isn’t really in stock anymore.

But again, we have to exercise at least some caution here. The RTX 4060 launched in mid-2023, about 18 months ago. It’s due for replacement in mid-2025. Whether that will happen or not remains to be seen, but beating up on what was arguably one of the more questionable 40-series GPUs isn’t exactly difficult. And that brings us to the specifications for Intel’s new Arc B580 and B570.

| Graphics Card | Arc B580 | Arc B570 |

|---|---|---|

| Architecture | BMG-G21 | BMG-G21 |

| Process Technology | TSMC N5 | TSMC N5 |

| Transistors (Billion) | 19.6 | 19.6 |

| Die size (mm^2) | 272 | 272 |

| Xe-Cores | 20 | 18 |

| GPU Cores (Shaders) | 2560 | 2304 |

| XMX Cores | 160 | 144 |

| Ray Tracing Cores | 20 | 18 |

| Graphics Clock (MHz) | 2670 | 2500 |

| Max Boost Clock (MHz) | 2850 | 2750 |

| VRAM Speed (Gbps) | 19 | 19 |

| VRAM Capacity (GiB) | 12 | 10 |

| VRAM Bus Width (bits) | 192 | 160 |

| L2 (L1) Cache Size MiB | 18 (5) | 13.5 (4.5) |

| Render Output Units | 80 | 80? |

| Texture Mapping Units | 160 | 144 |

| Peak TFLOPS FP32 | 14.6 | 12.7 |

| Peak XMX FP16 TFLOPS (INT8 TOPS) | 117 (233) | 101 (203) |

| Memory Bandwidth (GBps) | 456 | 380 |

| Interface | PCIe 4.0 x8 | PCIe 4.0 x8 |

| TBP (watts) | 190 | 150 |

| Launch Date | Dec 13, 2024 | Jan 16, 2025 |

| Launch Price | $249 | $219 |

Battlemage will have higher GPU clocks, up to 2670 MHz on the B580 and 2500 MHz on the B570. Except, as with Alchemist, these are more approximate clocks and the real-world clocks are likely to be higher. Arc A770, for example, had a stated 2100 MHz graphics clock, a conservative estimate based on running a bunch of workloads. The maximum boost clock was 2400 MHz; in our testing, we saw average clocks of 2330–2370 MHz.

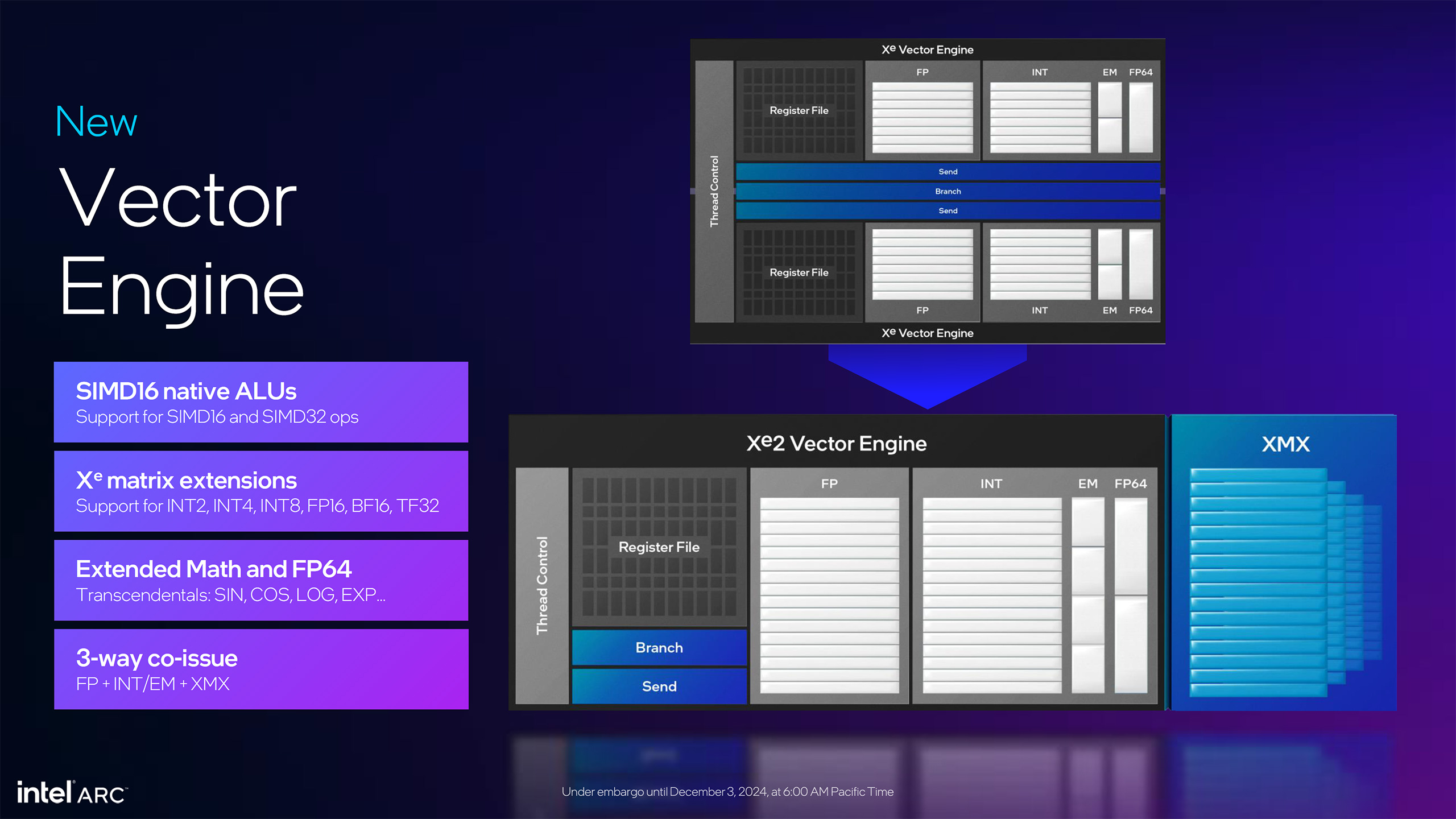

Each Xe-core has eight vector units and eight XMX units. That’s half the count of Alchemist, except each unit is twice as wide — 512 bits for the new vector engines and 2048 bits on the XMX units. In practical terms, that means the total number of vector or matrix instructions executed per Xe-core remains the same: 256 FP32 vector operations (using FMA, fused multiply add), and 2048 FP16 or 4096 INT8 operations on the XMX units.

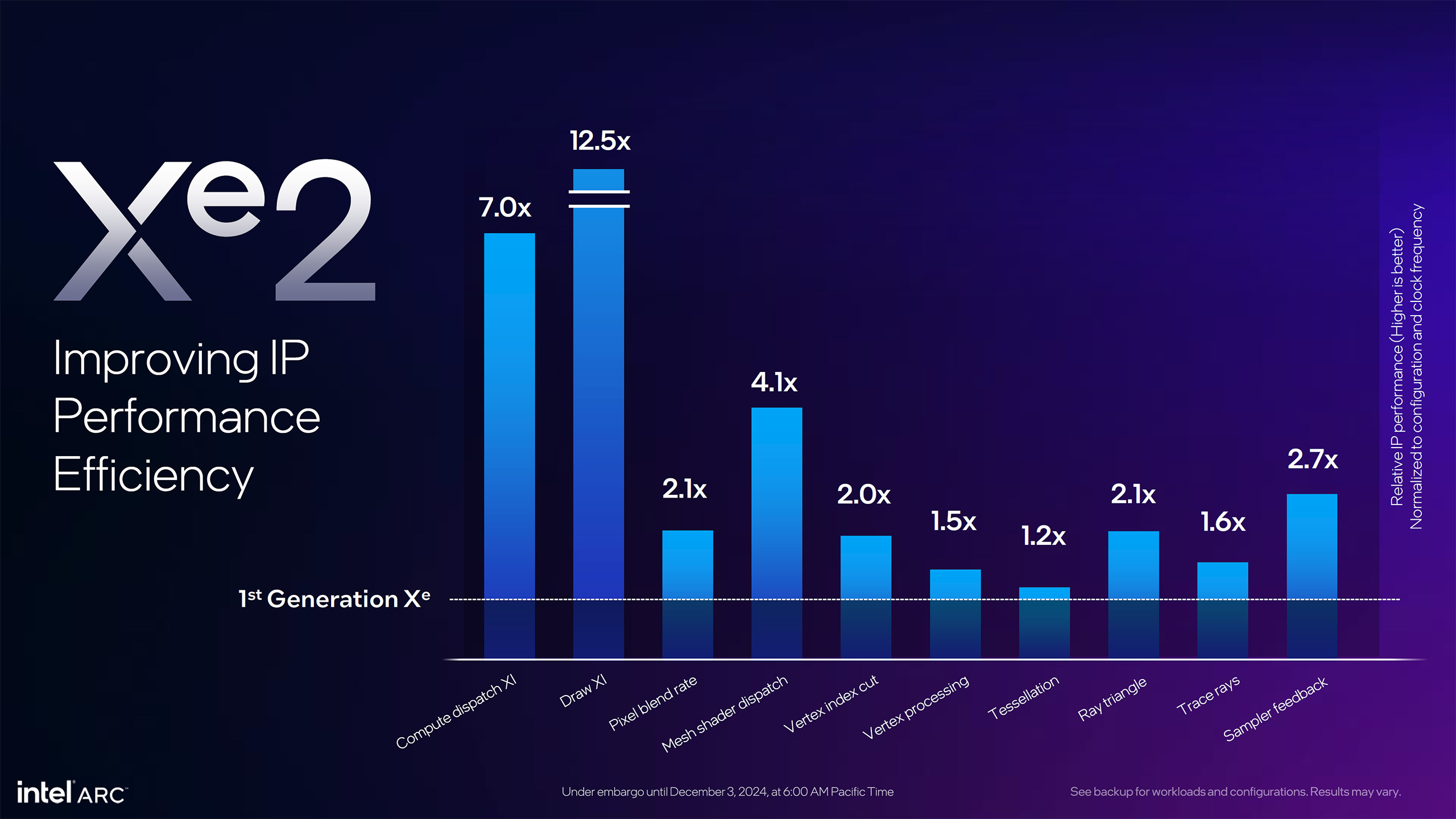

Boost clocks multiplied by core counts give us the total theoretical compute, but here’s where things get interesting. If you compare the raw specs for the new Battlemage GPUs with the raw specs for the existing Alchemist GPUs, it looks like Intel took a step back in certain aspects of the design. For example, the A750 has 17.2 TFLOPS of FP32 compute, while the new B580 ‘only’ offers 14.6 TFLOPS. This is where architectural changes play a role.

One of the big changes is a switch from native SIMD32 execution units to SIMD16 units — SIMD being “single instruction multiple data” with the number being the concurrent pieces of data that are operated on. With SIMD32 units, Alchemist had to work on chunks of 32 values (typically from pixels), while SIMD16 only needed 16 values. Intel says this improves GPU utilization, as it’s easier to fill 16 execution slots than 32. The net result is that Battlemage should deliver much better performance per theoretical TFLOPS than Alchemist.

We saw this when we looked at Lunar Lake’s integrated graphics performance. The new Xe2 architecture in Lunar Lake has up to 4.0 TFLOPS of FP32 compute while the older Xe architecture in Meteor Lake has 4.6 TFLOPS, but Lunar Lake ultimately ended up being 42% faster at 720p and 32% faster at 1080p. Other factors are at play, including memory bandwidth, but given Intel’s claimed performance, it looks like Battlemage will deliver a decent generational performance uplift.

In terms of the memory subsystem, there are some noteworthy changes. The B580 will use a 192-bit interface with 12GB of GDDR6 memory, while the B570 cuts that down to a 160-bit interface with 10GB of GDDR6 memory. In either case, the memory runs at 19 Gbps effective clocks. That results in a slight reduction in total bandwidth relative to the A580 and A750 (both 512 GB/s), with the A770 at 560 GB/s. The good news is that these new budget / mainstream GPUs will both have more than 8GB of VRAM, which has become a limiting factor on quite a few newer games.

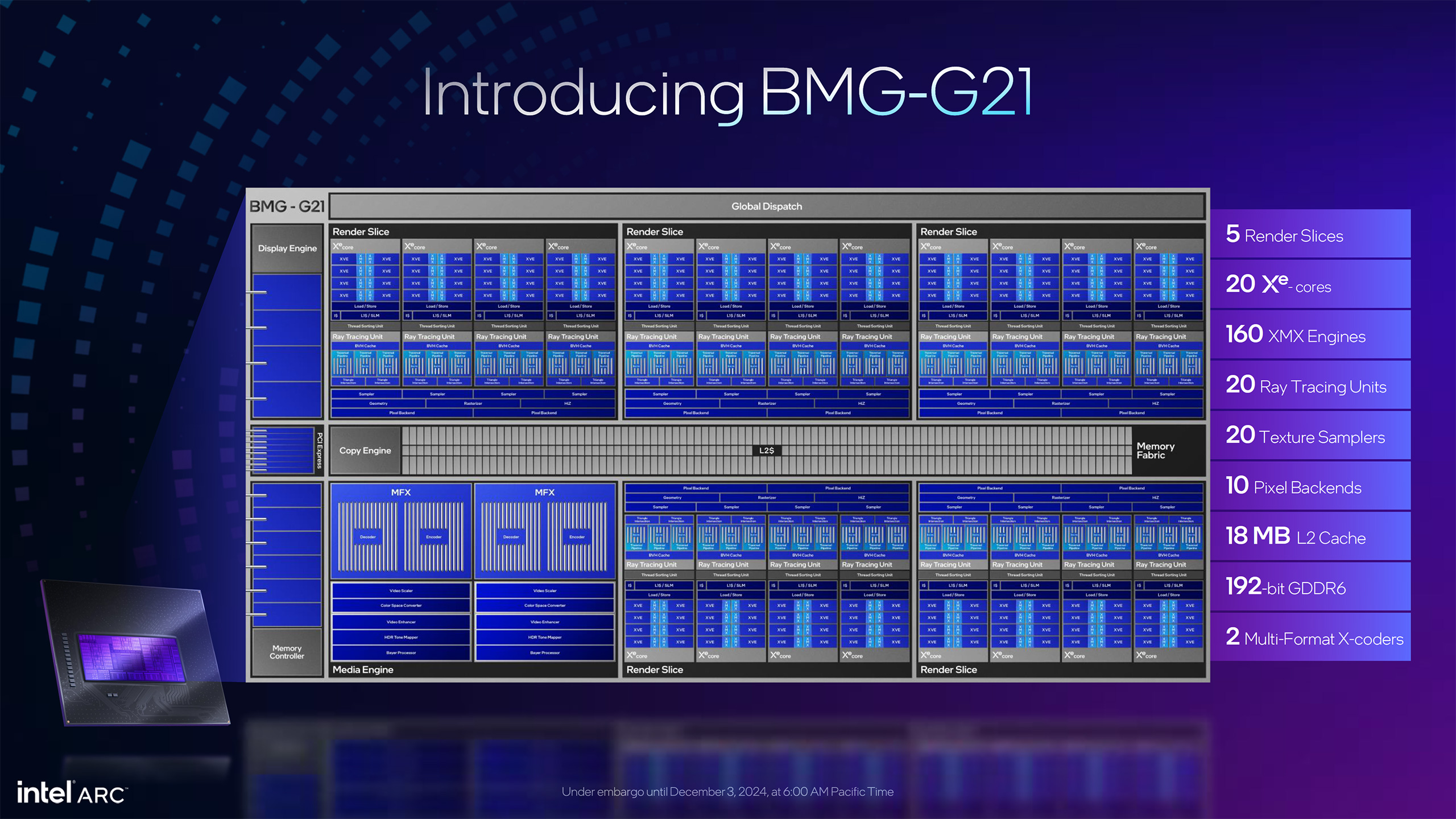

Battlemage will also be more power efficient, as the total graphics power is 190W on the B580 compared to 225W on the A750. That means Battlemage delivers 24% more performance while using 16% less power, a net potential improvement of nearly 50% (depending on real-world power use, which we haven’t measured yet). Intel also claims a 70% improvement in performance per Xe-core, based on the architectural upgrades, which we’ll discuss more in the architecture section.

Part of the power efficiency improvements come from the move to TSMC’s N5 node versus the N6 node used on Alchemist. N5 offered substantial density and power benefits, and that’s reflected in the total die size as well. The ACM-G10 GPU used in the A770 had 21.7 billion transistors in a 406 mm^2 die, and BMG-G21 had 19.6 billion transistors in a 272 mm^2 die. That’s an overall density of 72.1 MT/mm^2 for Battlemage compared to 53.4 MT/mm^2 on Alchemist.

Note, however, that Nvidia’s various Ada Lovelace GPUs have overall transistor densities of 109–125 MT/mm^2 on TSMC’s custom 4N process. AMD’s RDNA 3 GPUs also have densities of 140–152 MT/mm^2 on the main GCD that uses TSMC’s N5 node. That means Intel still isn’t matching its competition on transistor density or die size, though AMD muddies the waters with RDNA 3 by using GPU chiplets.

And finally, Intel will use a PCIe 4.0 x8 interface on B580 and B570. Given their budget-mainstream nature, that’s probably not going to be a major issue, and AMD and Nvidia have both opted for a narrower x8 interface on lower-tier parts. Presumably, it determined that there was no need for a wider x16 interface, and likewise, there wasn’t enough benefit to moving to PCIe 5.0 — which tends to have shorter trace lengths and higher power requirements.

With the core specifications out of the way, let’s dig deeper into the various architectural upgrades. The first Arc GPUs marked Intel’s return to the dedicated GPU space after being absent for over two decades, with the Intel DG1 with Xe Graphics as a limited release precursor to pave the way. Intel has been doing integrated graphics for a very long time, but it’s a fundamentally different way of doing things.

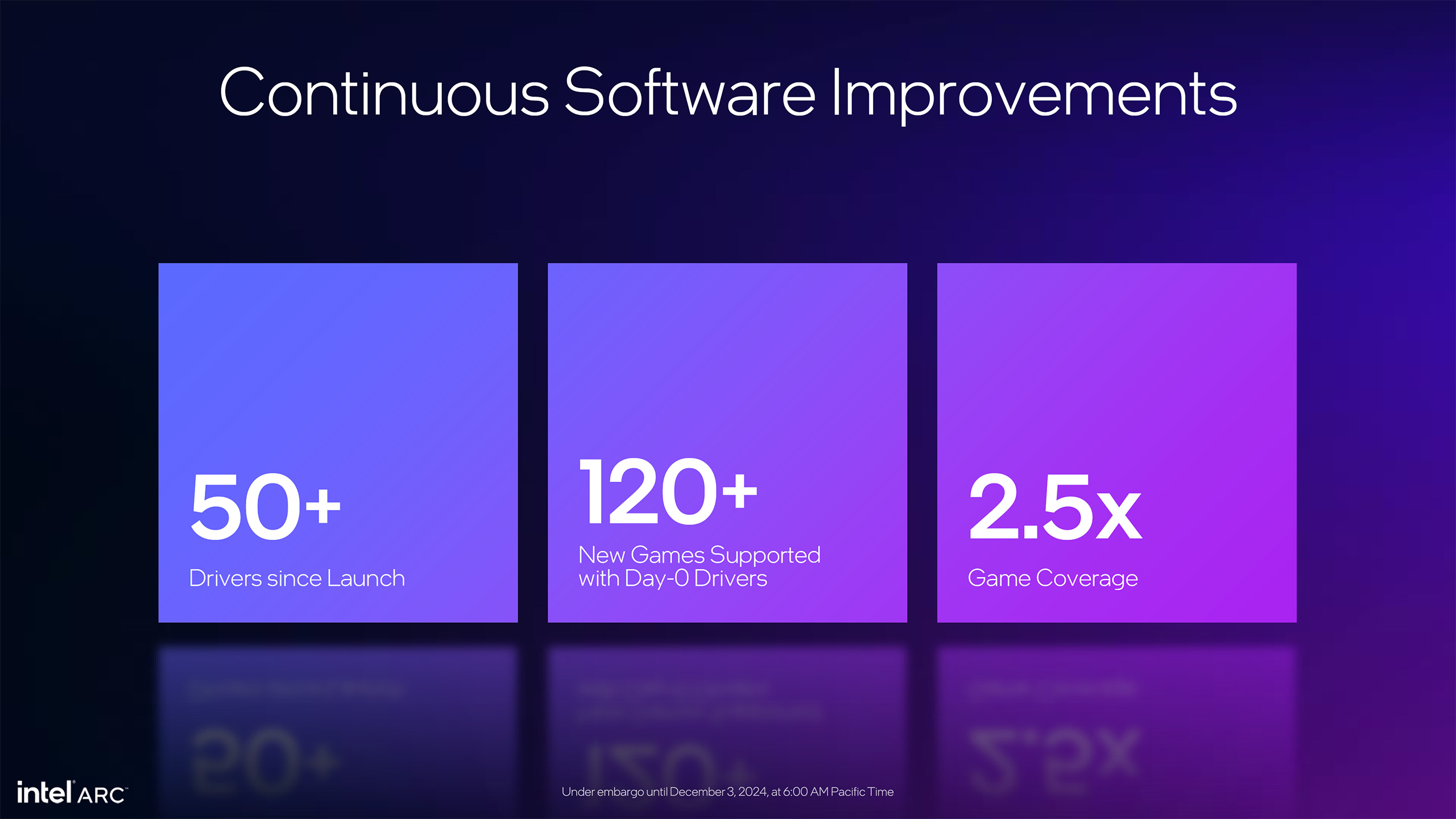

Intel Arc Alchemist was the first real attempt at scaling up the base architecture to significantly higher power and performance. And that came with a lot of growing pains, both in terms of the hardware and the software and drivers. Battlemage gets to take everything Intel learned from the prior generation, incorporating changes that can dramatically improve certain aspects of performance. Intel’s graphics team set out to increase GPU core utilization, improve the distribution of workloads, and reduce software overhead.

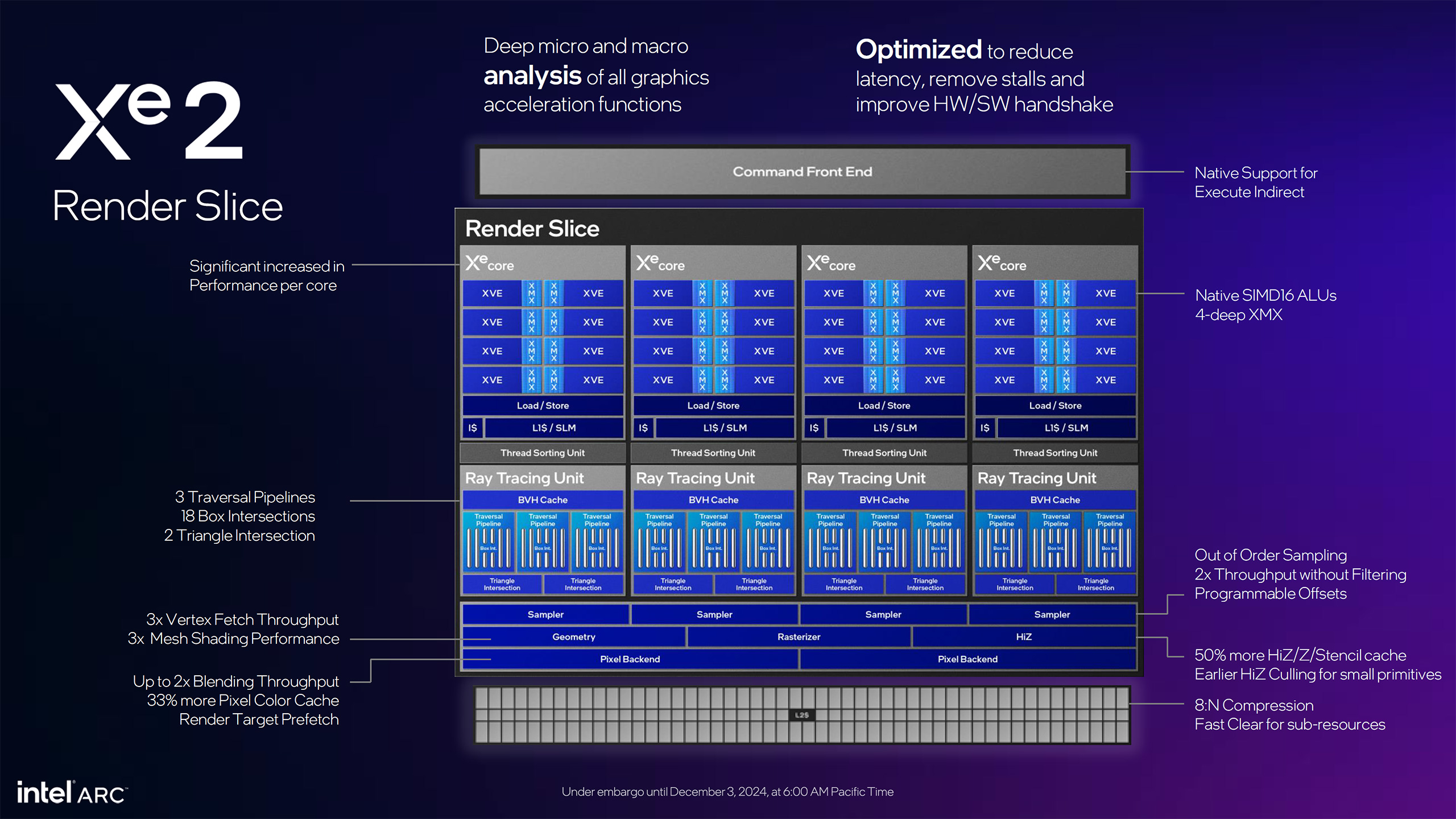

The fifth slide in the above set gives a high-level overview of all the changes. Intel added native support for Execute Indirect, significantly improving performance for certain tasks. We already mentioned the change from SIMD32 to SIMD16 ALUs (arithmetic logic units). Vertex and mesh shading performance per render slice is three times higher compared to Alchemist, and there are other improvements in the Z/stencil cache, earlier culling of primitives, and texture sampling.

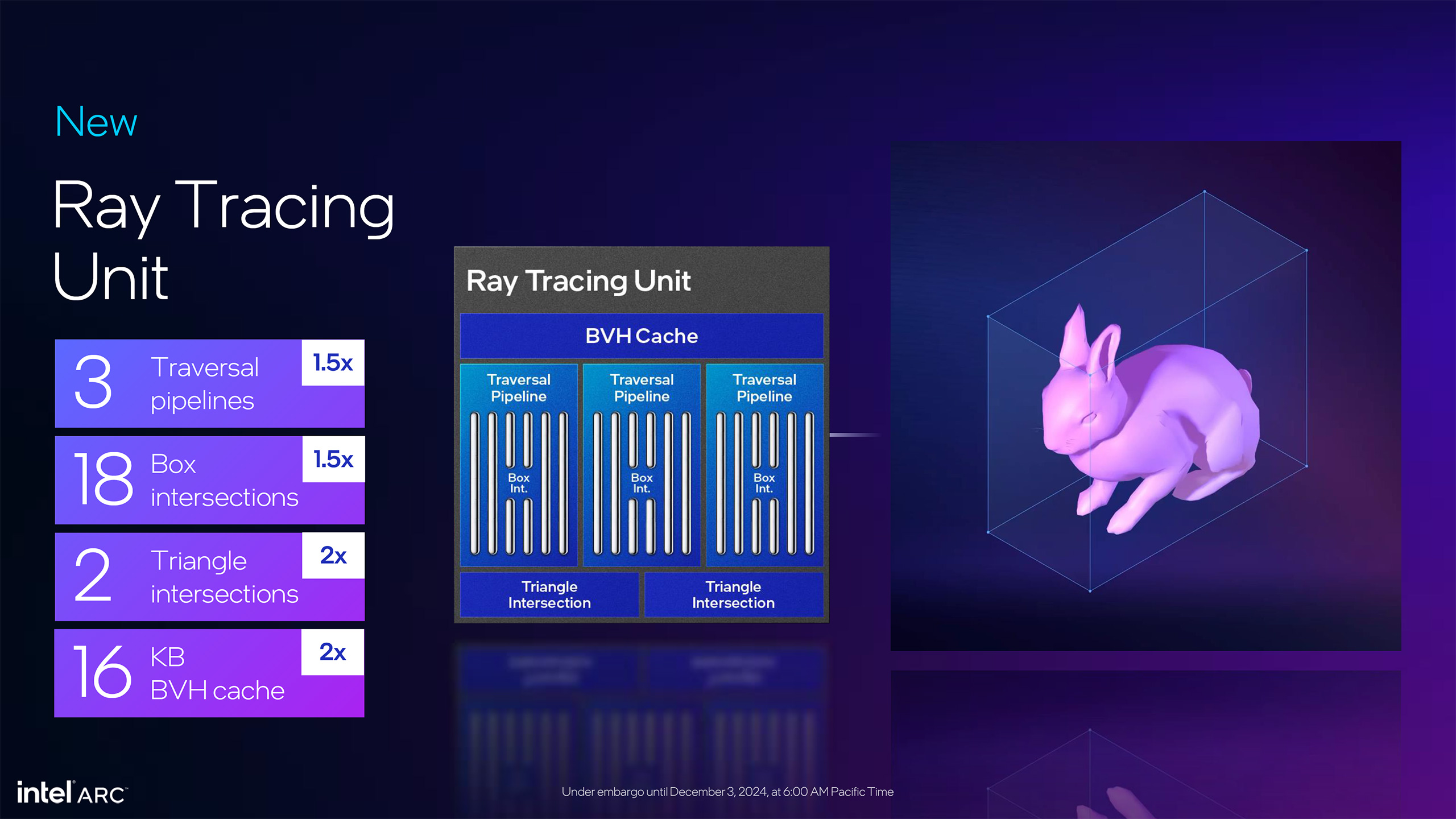

The ray tracing units also see some major upgrades, with each now having three traversal pipelines, the ability to compute 18 box intersections per cycle, and two triangle intersections. By way of reference, Alchemist had two BVH traversal pipelines and could do 12 box intersections and one triangle intersection per cycle. That means the ray tracing performance of each Battlemage RT unit is 50% higher on box intersections, with twice as many ray triangle intersections. There’s also a 16KB dedicated BVH cache in Battlemage, twice the size of the BVH cache in Alchemist.

Battlemage has updates to the caching hierarchy for the memory subsystem as well. Each Xe-core comes with a shared 256KB L1/SLM cache, 33% larger than Alchemist’s 192KB shared L1/SLM. The L2 cache gets a bump as well, though how much of a bump varies by the chosen comparison point. BMG-G21 has up to 18MB of L2 cache, while ACM-G10 had up to 16MB of L2 cache. However, the A580 cut that down to 8MB, and presumably, any future GPU — like BMG-G20 for B770/B750 — would increase the amount of L2 cache. What that means in terms of effective memory bandwidth remains to be seen.

Most supported number formats remain the same as Alchemist, with INT8, INT4, FP16, and BF16 support. New to Battlemage are native INT2 and TF32 support. INT2 can double the throughput again for very small integers, while TF32 (tensor float 32) looks to provide a better option for throughput and precision relative to FP16 and FP32. It uses a 19-bit format, with an 8-bit exponent with a 10-bit mantissa (the fraction portion of the number). The net result is that it has the same dynamic range of FP32 with less precision, but it also needs less memory bandwidth. TF32 has proven effective for certain AI workloads.

Battlemage now supports 3-way instruction co-issue, so it can independently issue one floating-point, one Integer/extended math, and one XMX instruction each cycle. Alchemist also had instruction co-issue support and seemed to have the same 3-way co-issue, but in our briefing, Intel indicated that Battlemage is more robust in this area.

The full BMG-G21 design has five render slices, each with four Xe-cores. That gives 160 vector and XMX engines and 20 ray tracing units and texture samplers. It also has 10-pixel backends, each capable of handling eight render outputs. Rumors are that Intel has a larger BMG-G10 GPU in the works as well, which will scale up the number of render slices and the memory interface. Will it top out at eight render slices and 32 Xe-cores like Alchemist? That seems likely, though there’s no official word on other Battlemage GPUs at present.

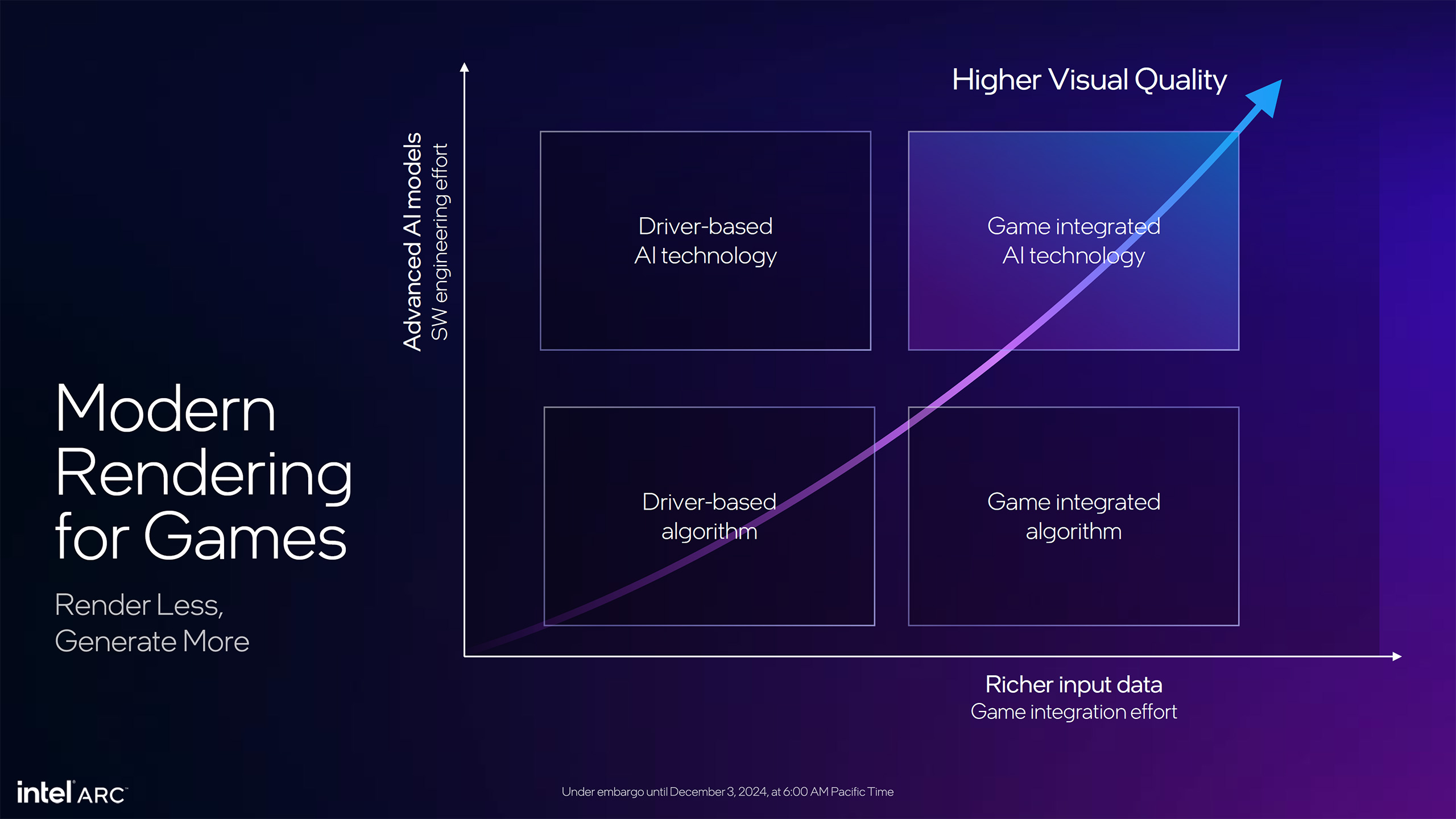

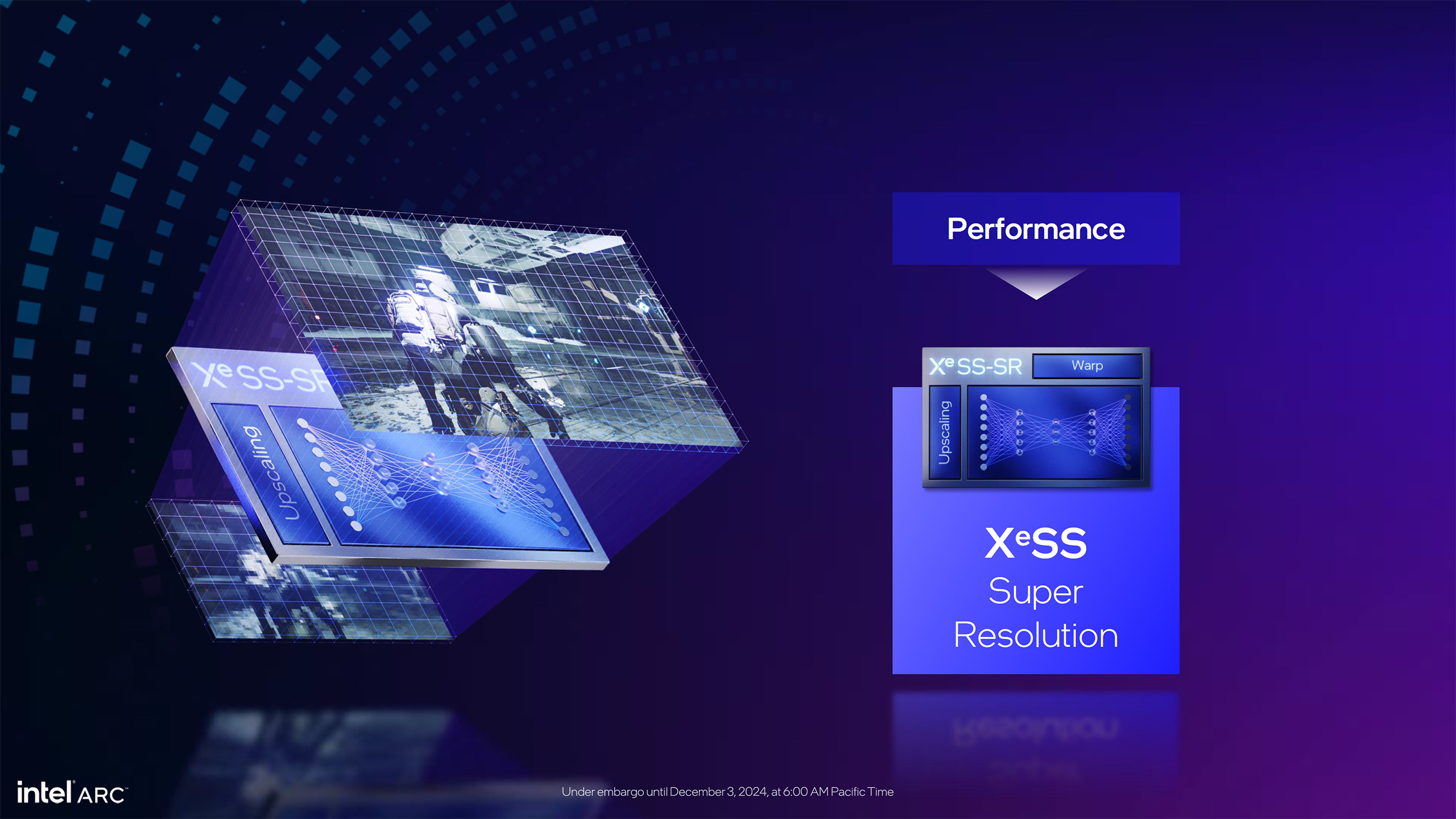

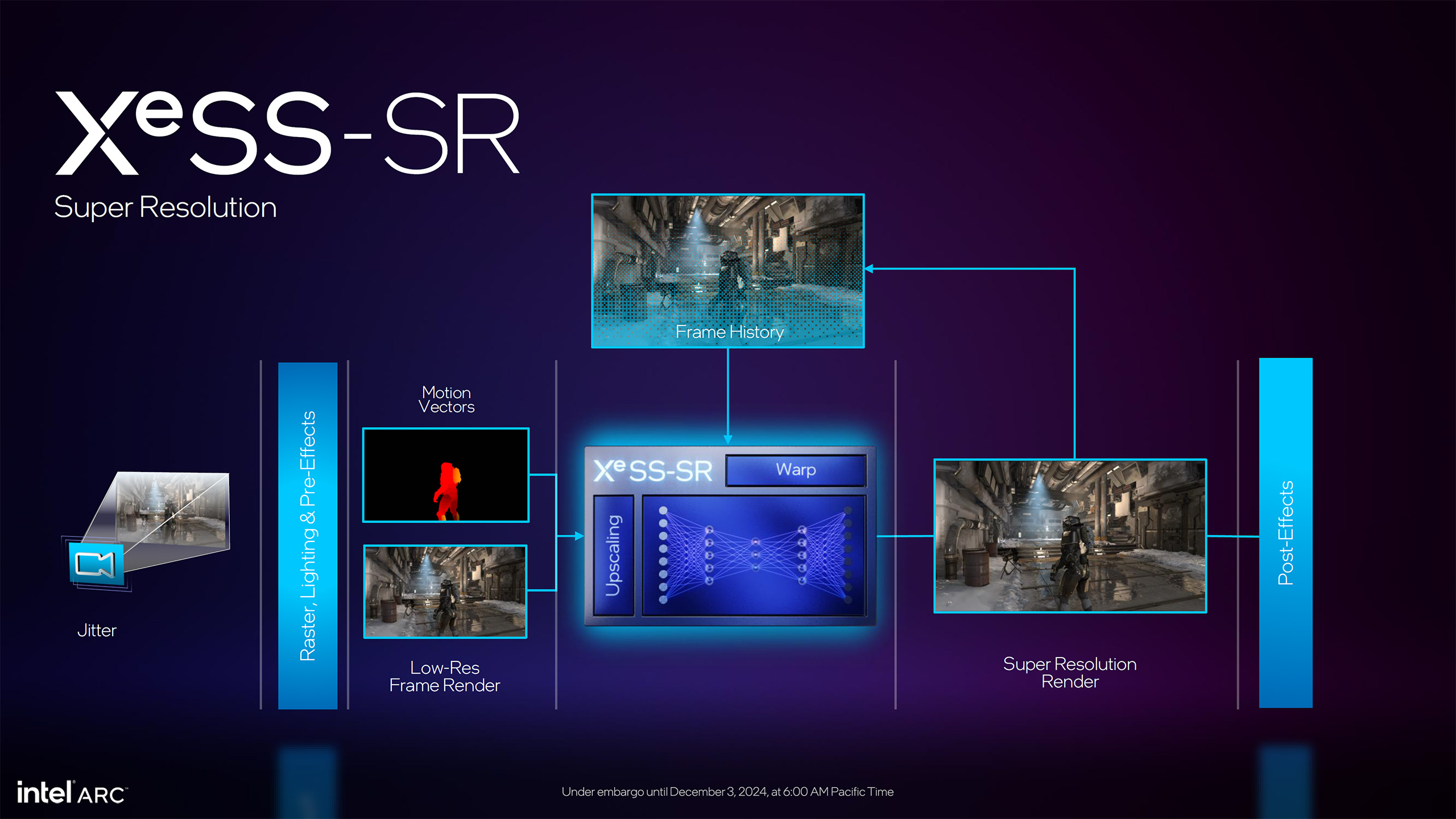

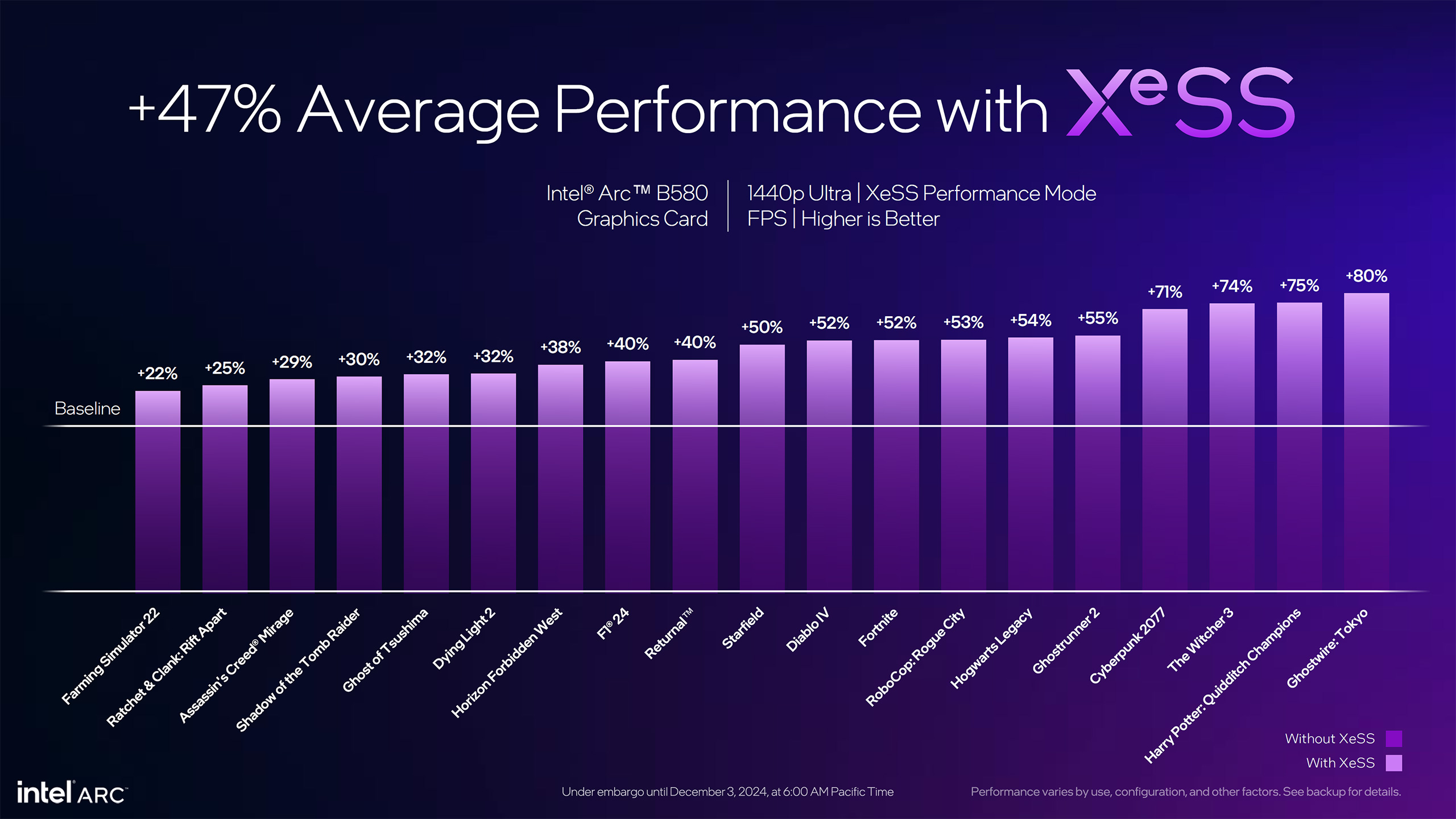

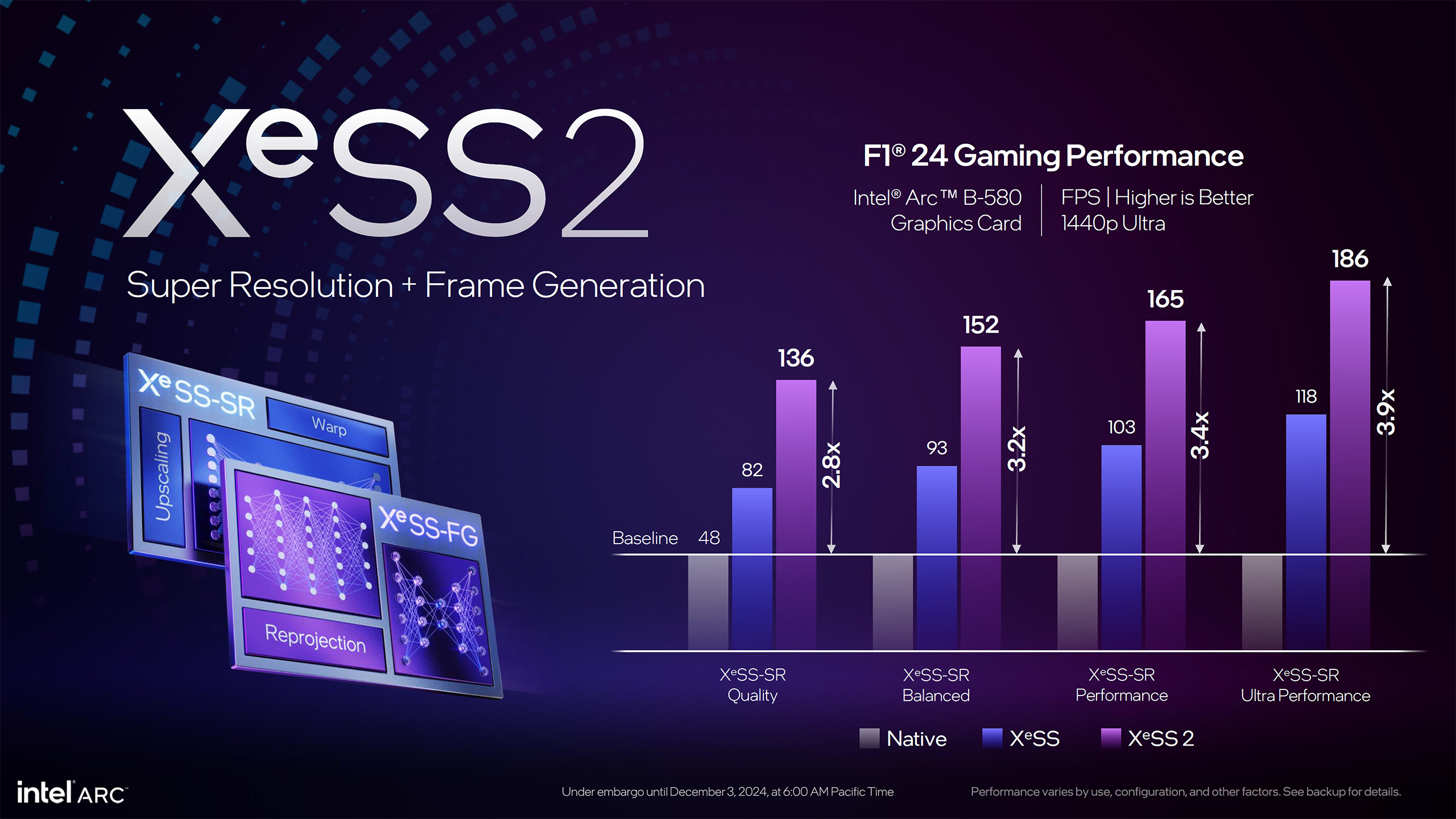

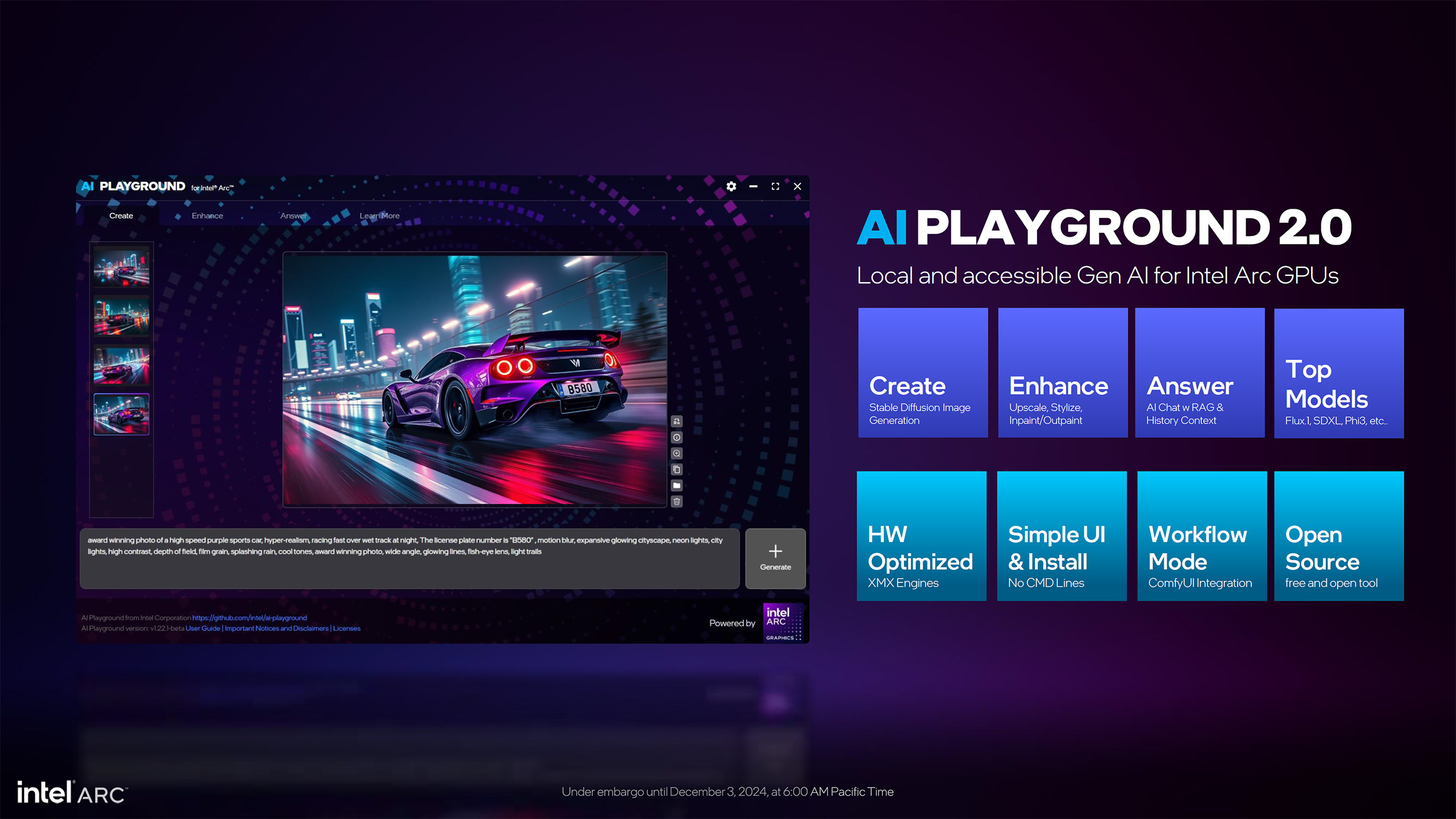

Besides the core hardware, Intel also had plenty to say about its XeSS upscaling technology. It’s not too surprising that Intel will now add frame generation and low-latency technology to XeSS. It puts all of these under the XeSS 2 brand, with XeSS-FG, XeSS-LL, and XeSS-SR sub-brands (for Frame Generation, Low Latency, and Super Resolution, respectively).

XeSS continues to follow a similar path to Nvidia’s DLSS, with some notable differences. First, XeSS-SR supports non-Intel GPUs via DP4a instructions (basically optimized INT8 shaders). However, XeSS functions differently in DP4a mode than in XMX mode, with XMX requiring an Arc GPU — basically Alchemist, Lunar Lake, or Battlemage.

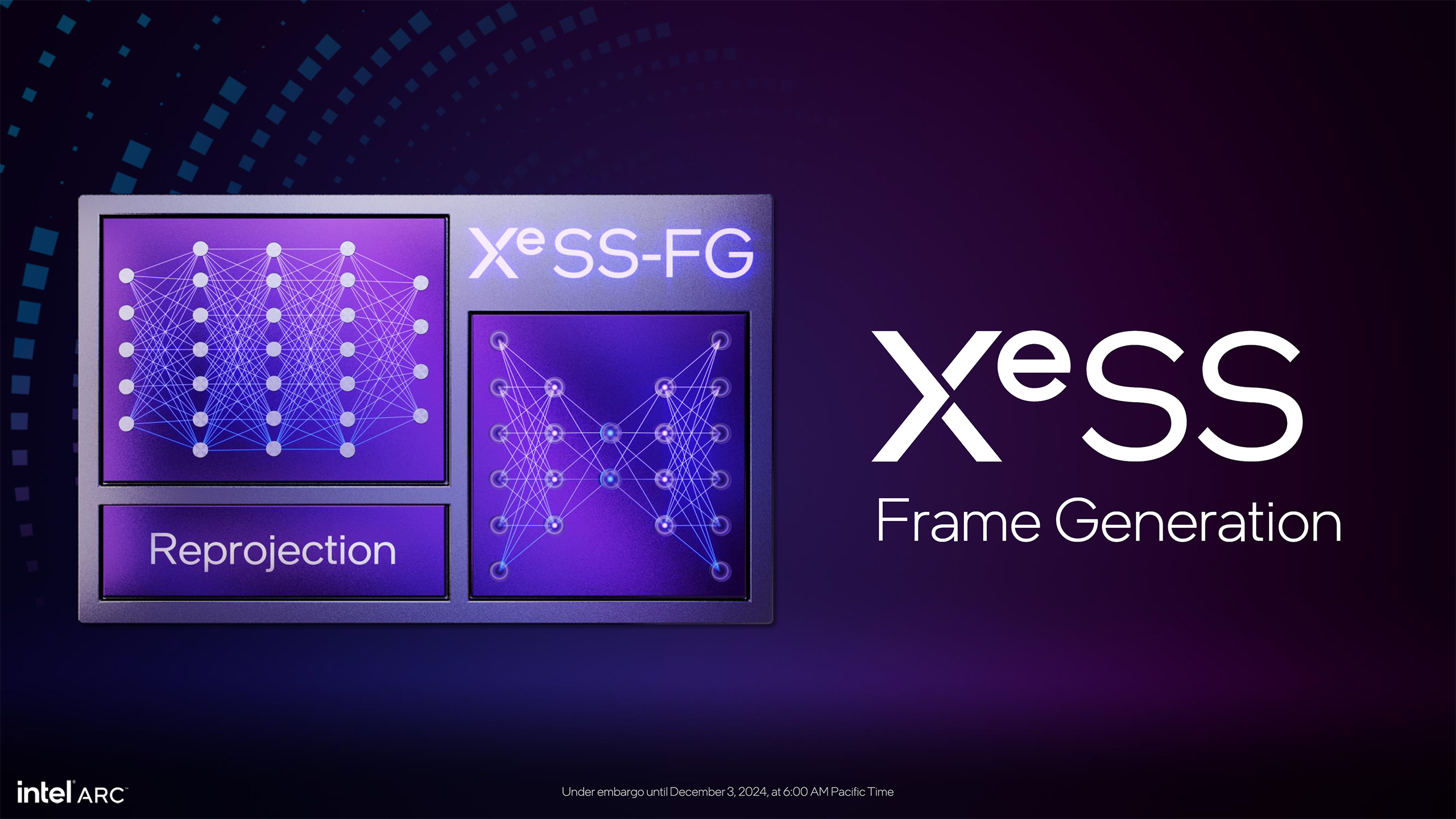

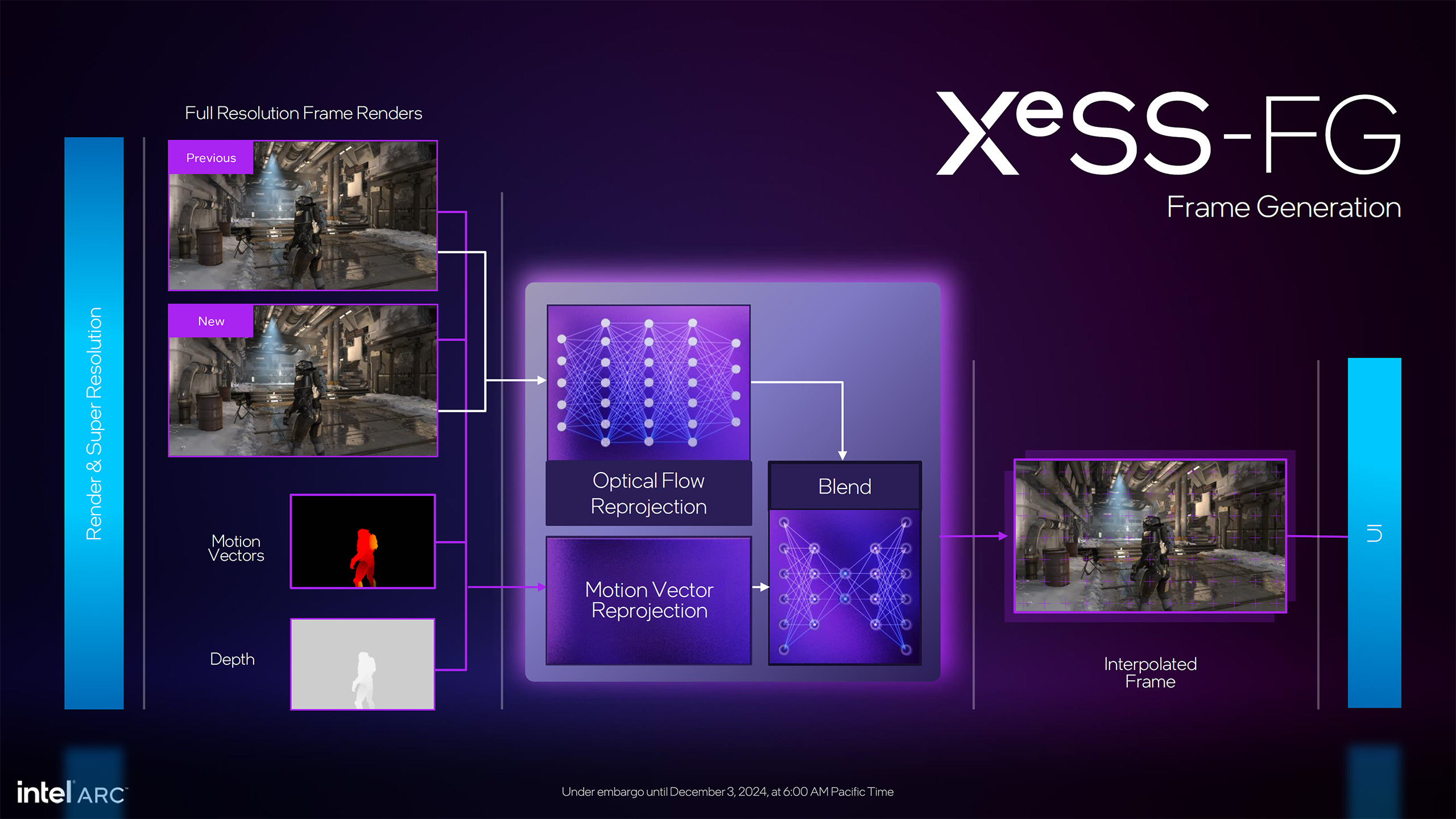

XeSS-FG frame generation interpolates an intermediate frame between two already rendered frames, in the same way that DLSS 3 and FSR 3 framegen interpolate. However, where Nvidia requires the RTX 40-series with its newer OFA (Optical Flow Accelerator) to do framegen, Intel does all the necessary optical flow reprojection via its XMX cores. It also does motion vector reprojection, and then uses another AI network to blend the two to get an ‘optimal’ output.

This means that XeSS-FG will run on all Arc GPUs — but not on Meteor Lake’s iGPU, as that lacks XMX support. It also means that XeSS-FG will not run on non-Arc GPUs, at least for the time being. It’s possible Intel could figure out a way to make it work with other GPUs, similar to what it did with XeSS-SR and DP4a mode, but we suspect that won’t ever happen due to performance requirements.

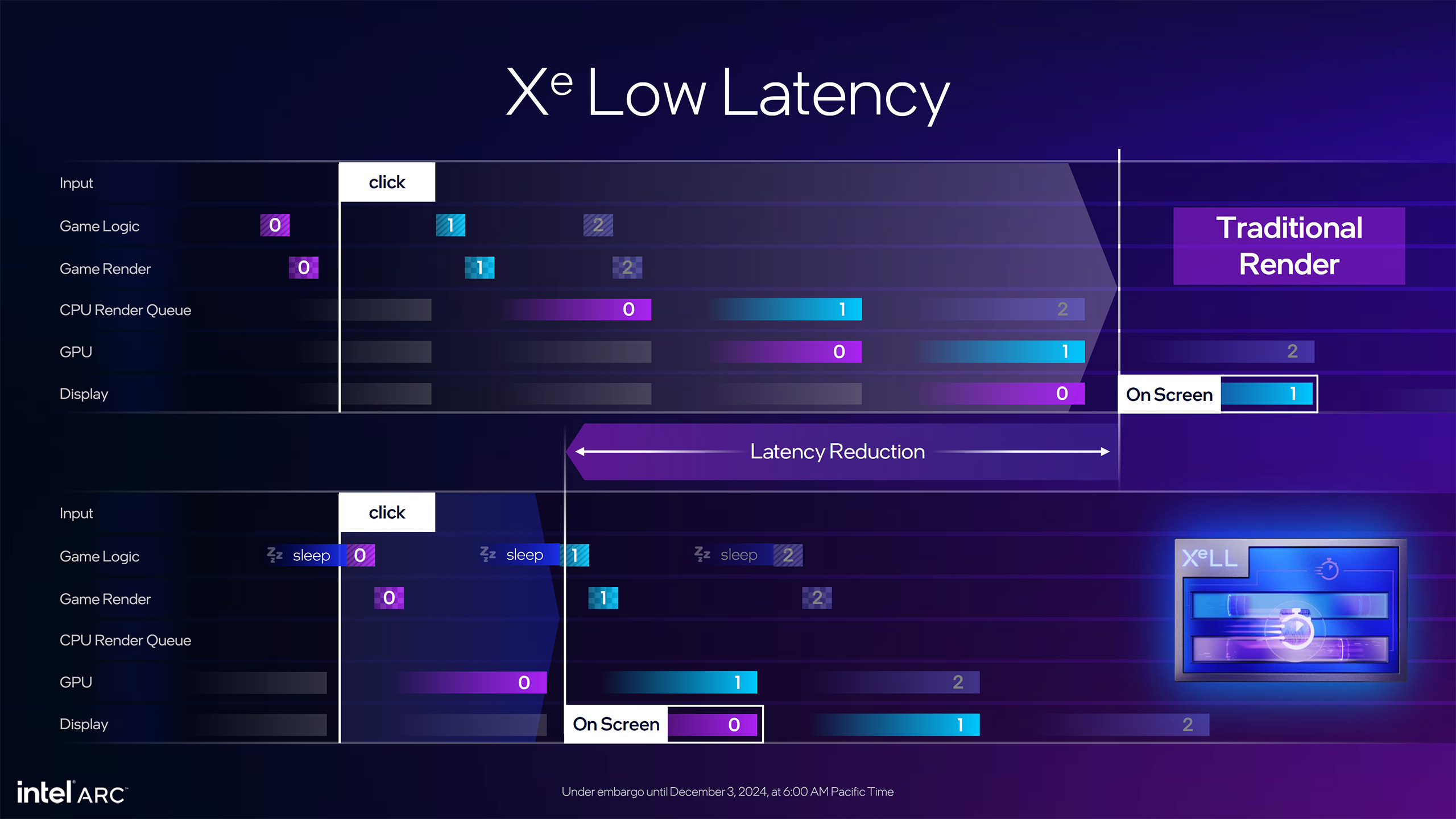

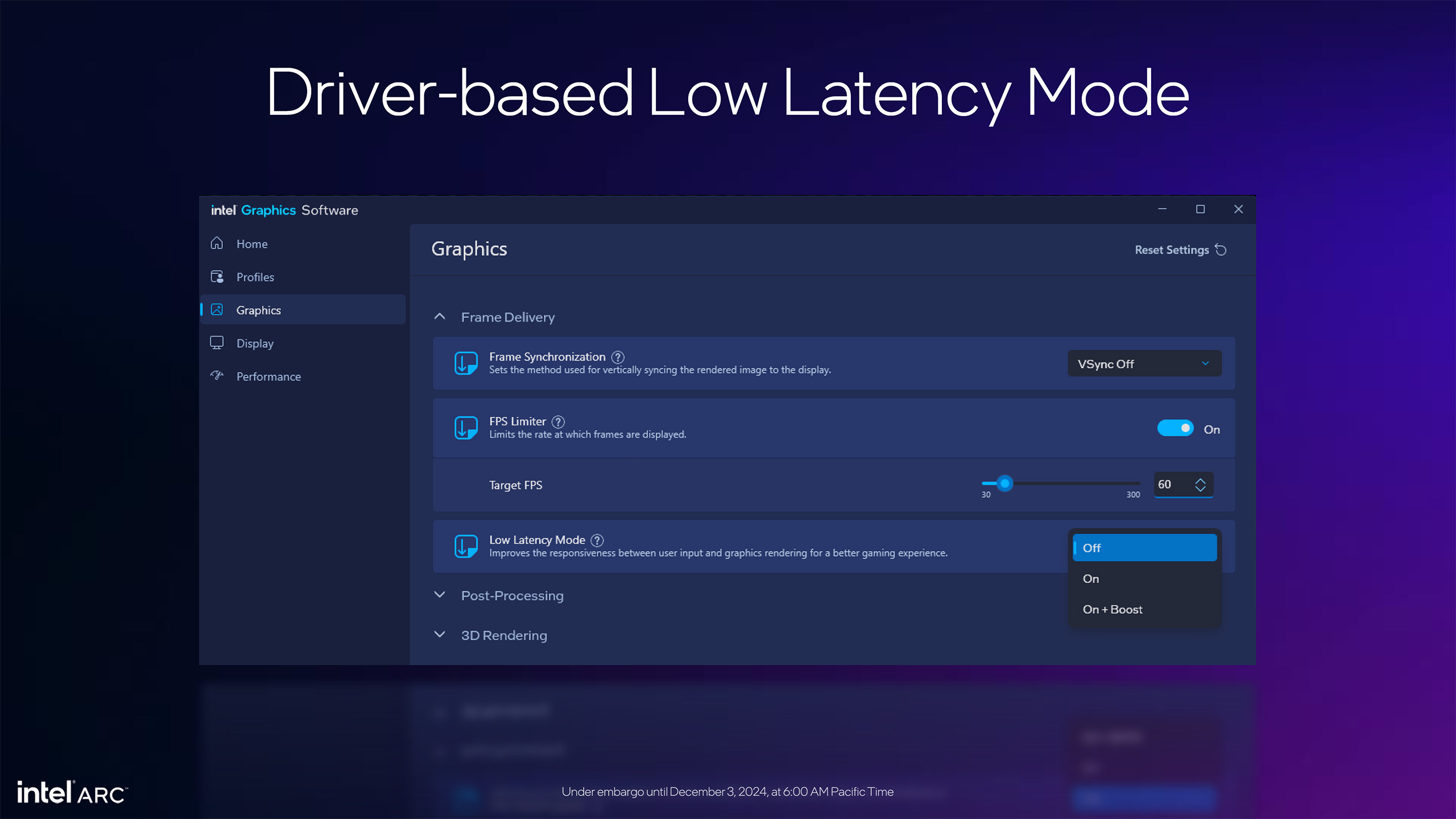

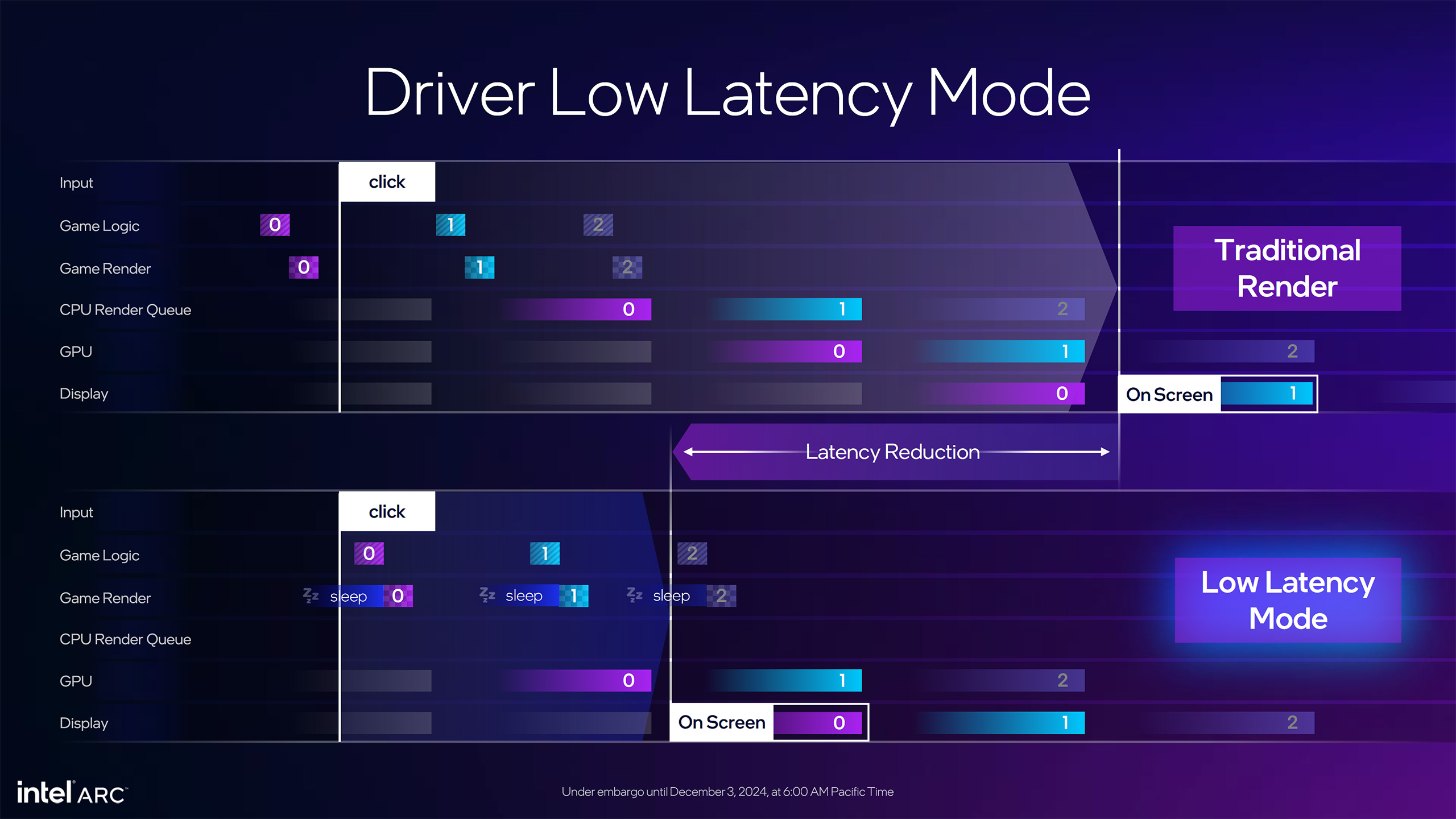

XeSS-LL pairs with framegen to help reduce the added latency created by framegen interpolation. In short, it moves certain work ahead of additional game logic calculations to reduce the latency between user input and having that input be reflected on the display. It’s roughly equivalent to Nvidia’s Reflex and AMD’s Anti-Lag 2 in principle, though the exact implementations aren’t necessarily identical.

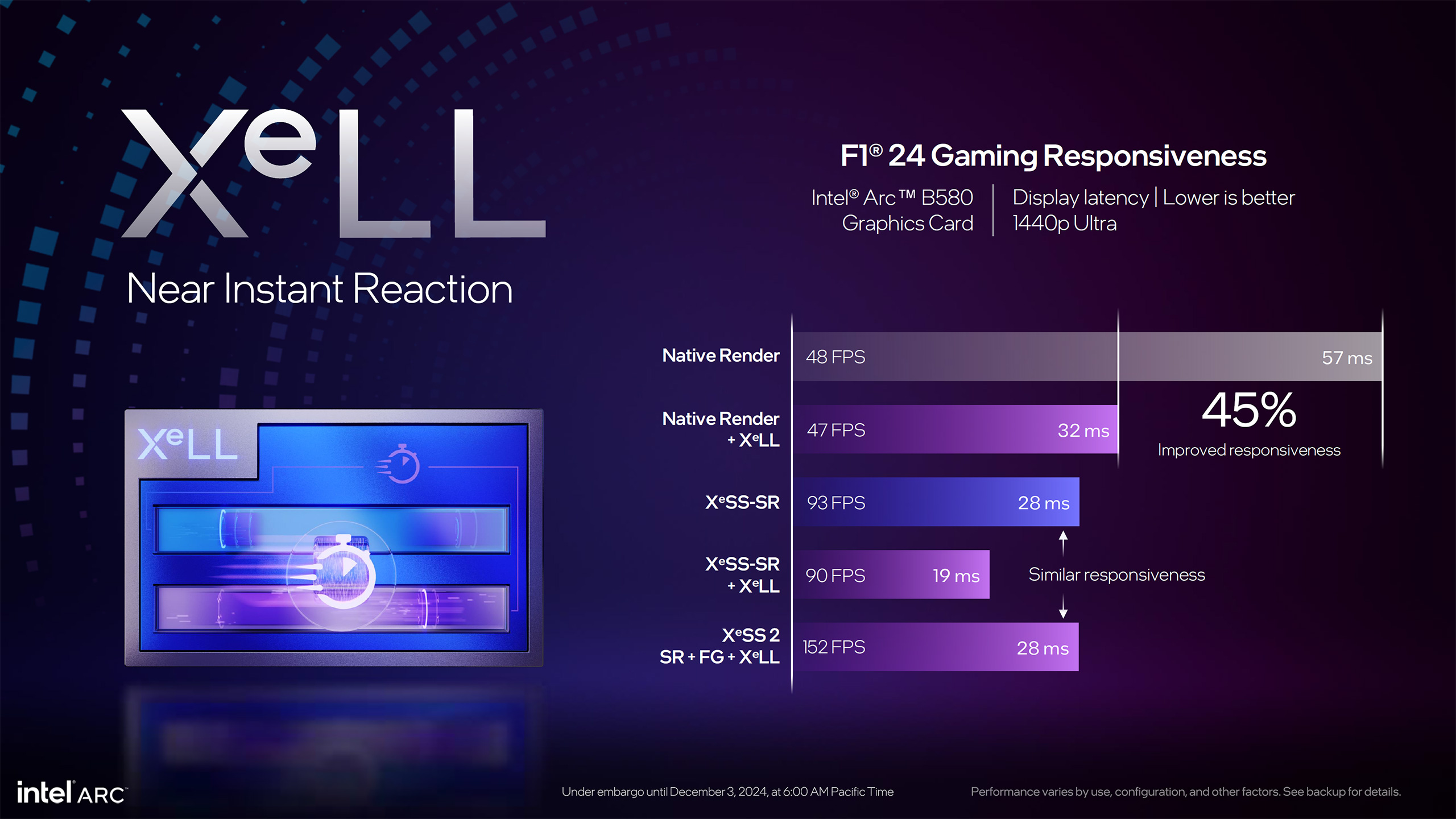

Like DLSS 3 with Reflex and FSR 3 with Anti-Lag 2, Intel says you can get the same latency with XeSS 2 running SR, FG, and LL as with standard XeSS-SR. It gave an example using F1 24 where the base latency at native rendering was 57ms, which dropped to 32ms with XeSS-LL. Turning on XeSS-SR upscaling instead dropped latency to 28ms, and SR plus LL resulted in 19ms of latency. Finally, XeSS SR + FG + LL ends up at the same 28ms of latency as just doing SR, but with 152 fps instead of 93 fps. So, you potentially get the same level of responsiveness but with higher (smoother) framerates.

XeSS has seen plenty of uptake by game developers since it first launched in 2022. There are now over 150 games that support some version of XeSS 1.x. However, as with FSR 3 and DLSS 3, developers will need to shift to XeSS 2 if they want to add framegen and low latency support. Some existing games that already support XeSS will almost certainly get upgraded, but at present Intel only named eight games that will have XeSS 2 in the coming months — with more to come.

And no, you can’t get XeSS 2 in a game with XeSS 1.x support by swapping GPUs, as there are other requirements for XeSS 2 that the game wouldn’t support. But as we’ve seen with FSR 3 and DLSS 3, it should be possible for modders to hack in support with a bit of creativity.

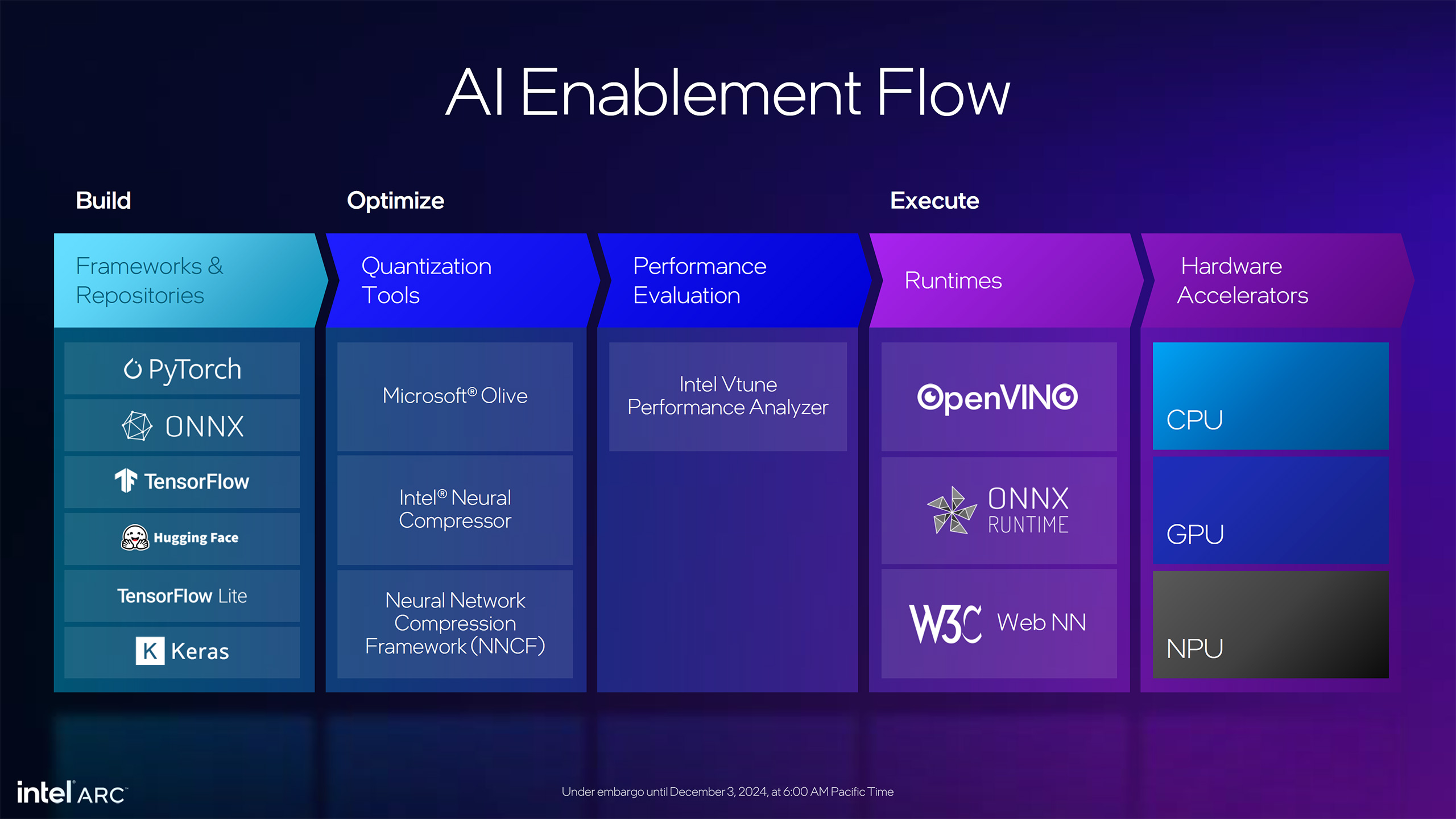

Intel also spent some time talking about its updated XMX engines, AI in general, other software changes, and overclocking. We’re not going to get into too much detail on these, as most of them are explained in the above slides, and people interested in AI should already be familiar with what’s going on in that fast-moving field.

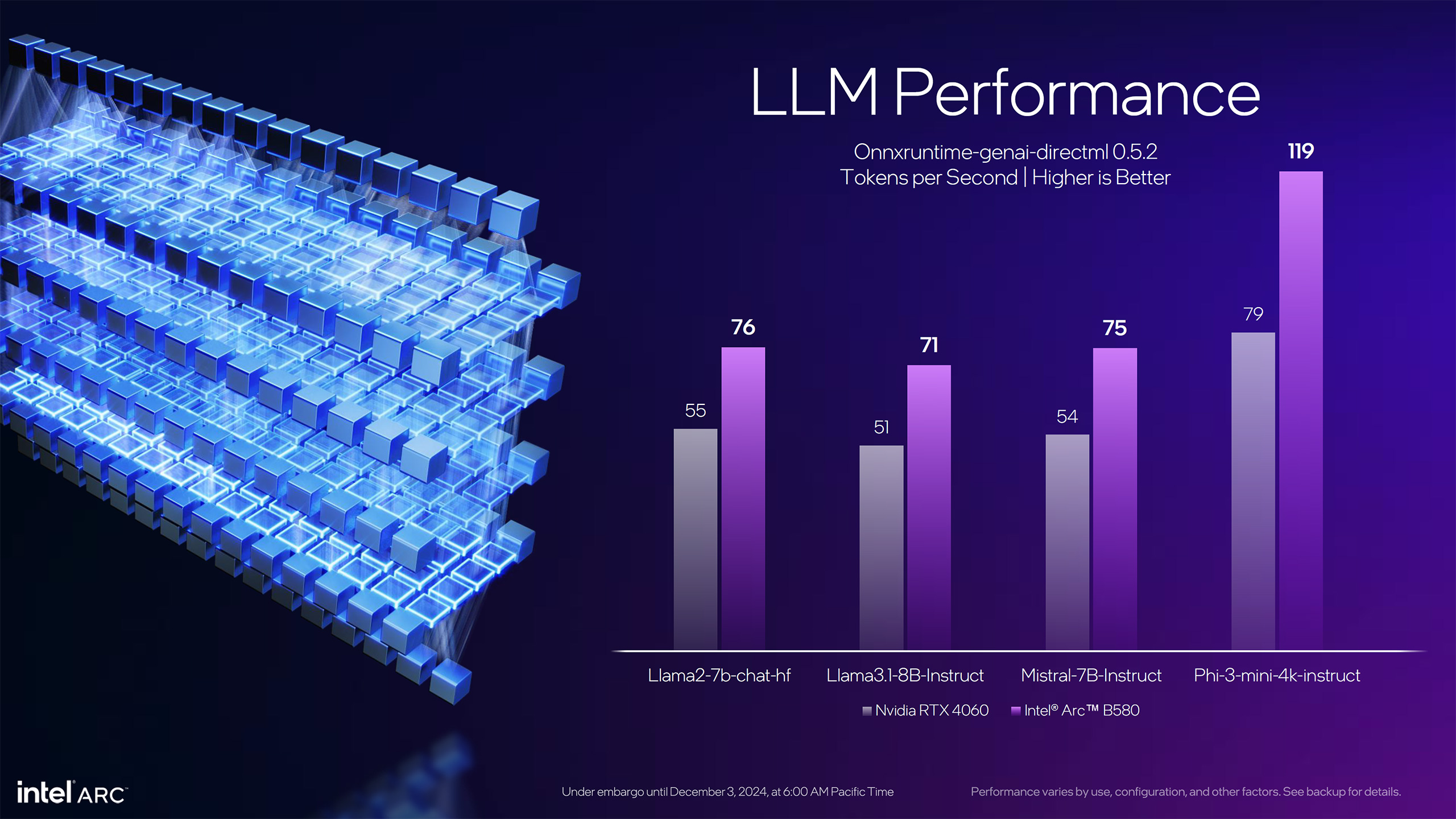

One thing Intel did show was that it’s getting better LLM performance in terms of tokens per second via several text generation models. Depending on the model, Intel says Arc B580 delivers around 40–50 percent higher AI performance than the RTX 4060. That’s going after some pretty low-hanging fruit, as the RTX 4060 isn’t exactly an AI powerhouse, though at least Battlemage should outpace AMD’s RDNA 3 offerings in the AI realm.

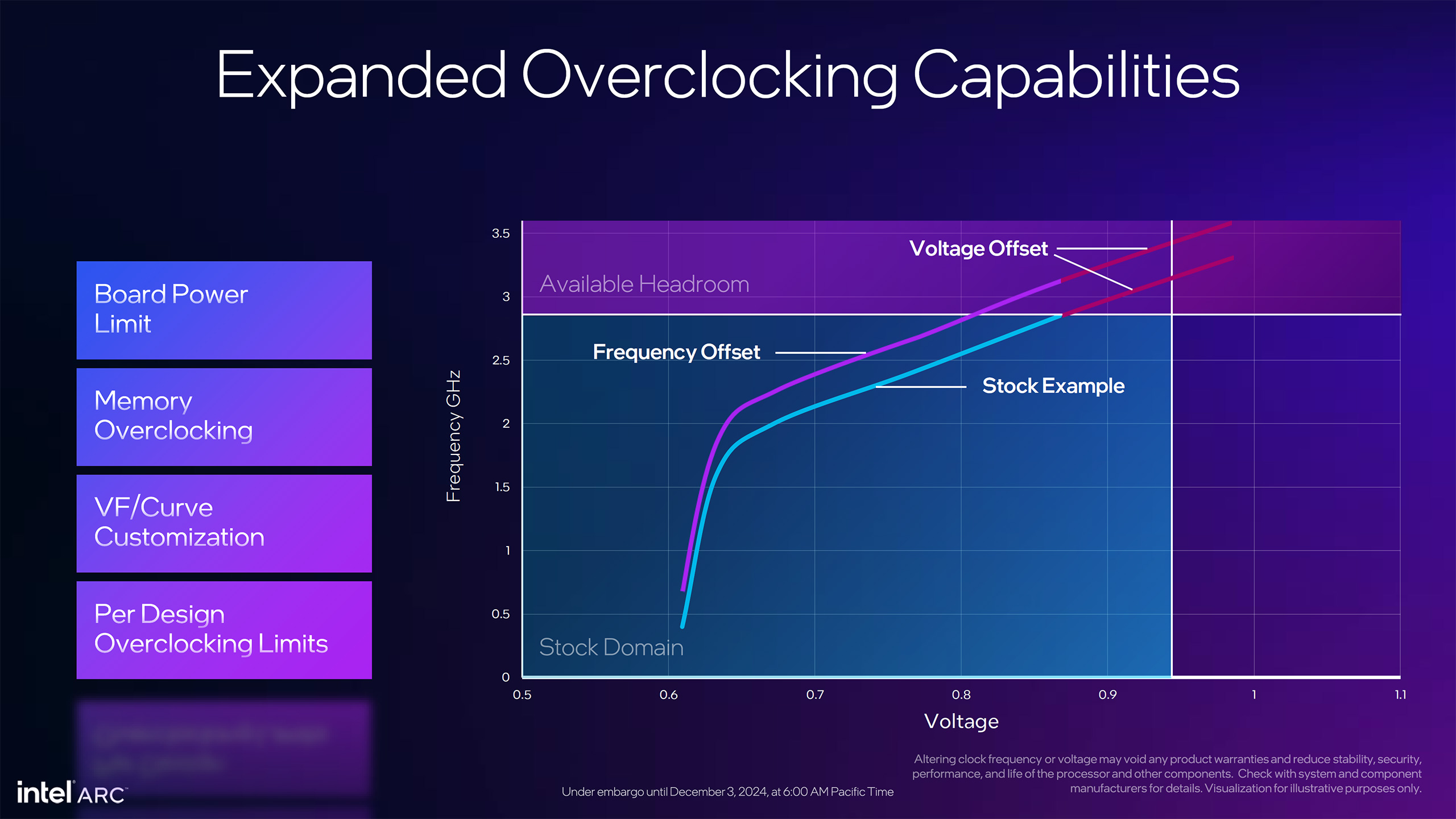

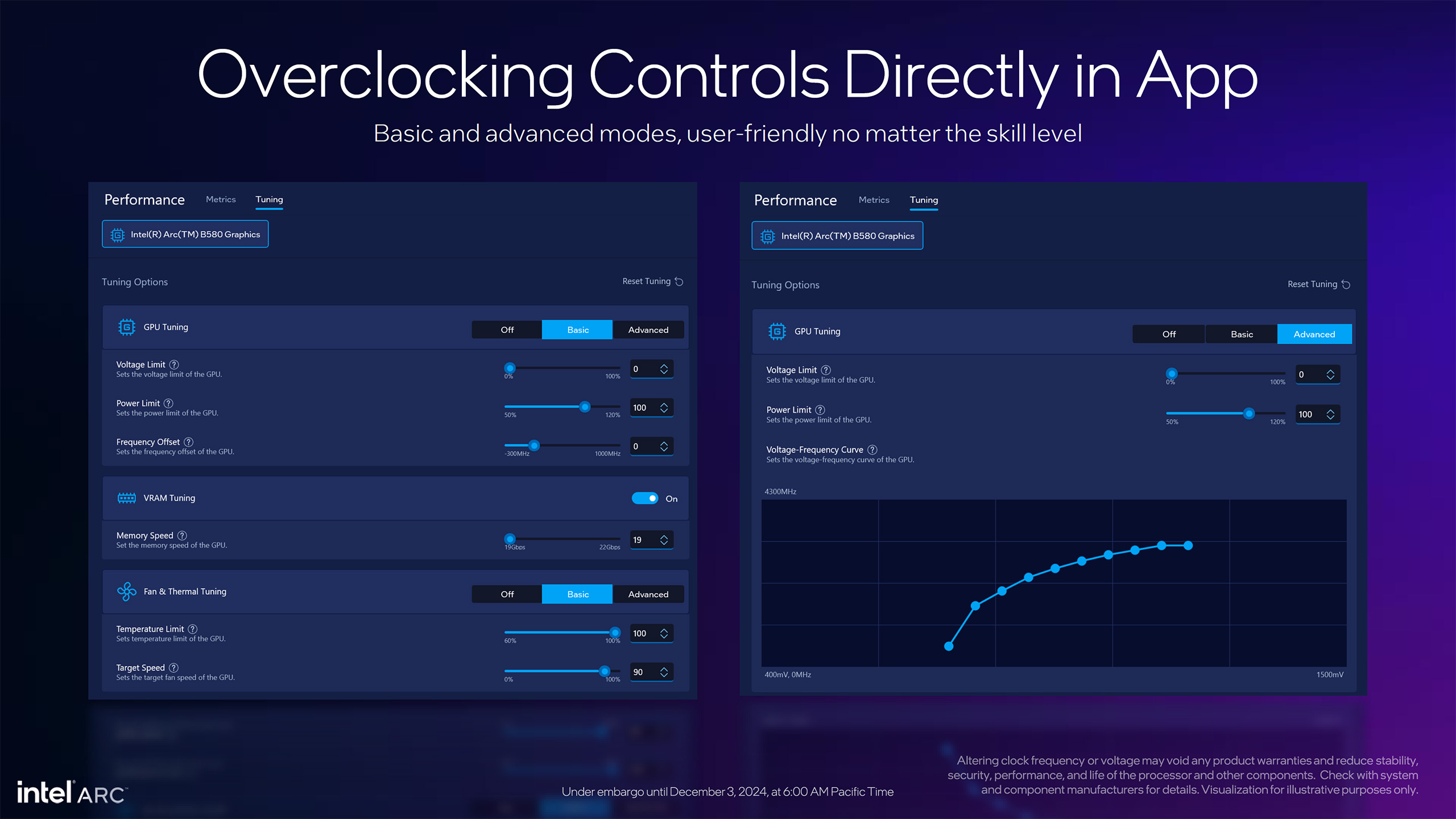

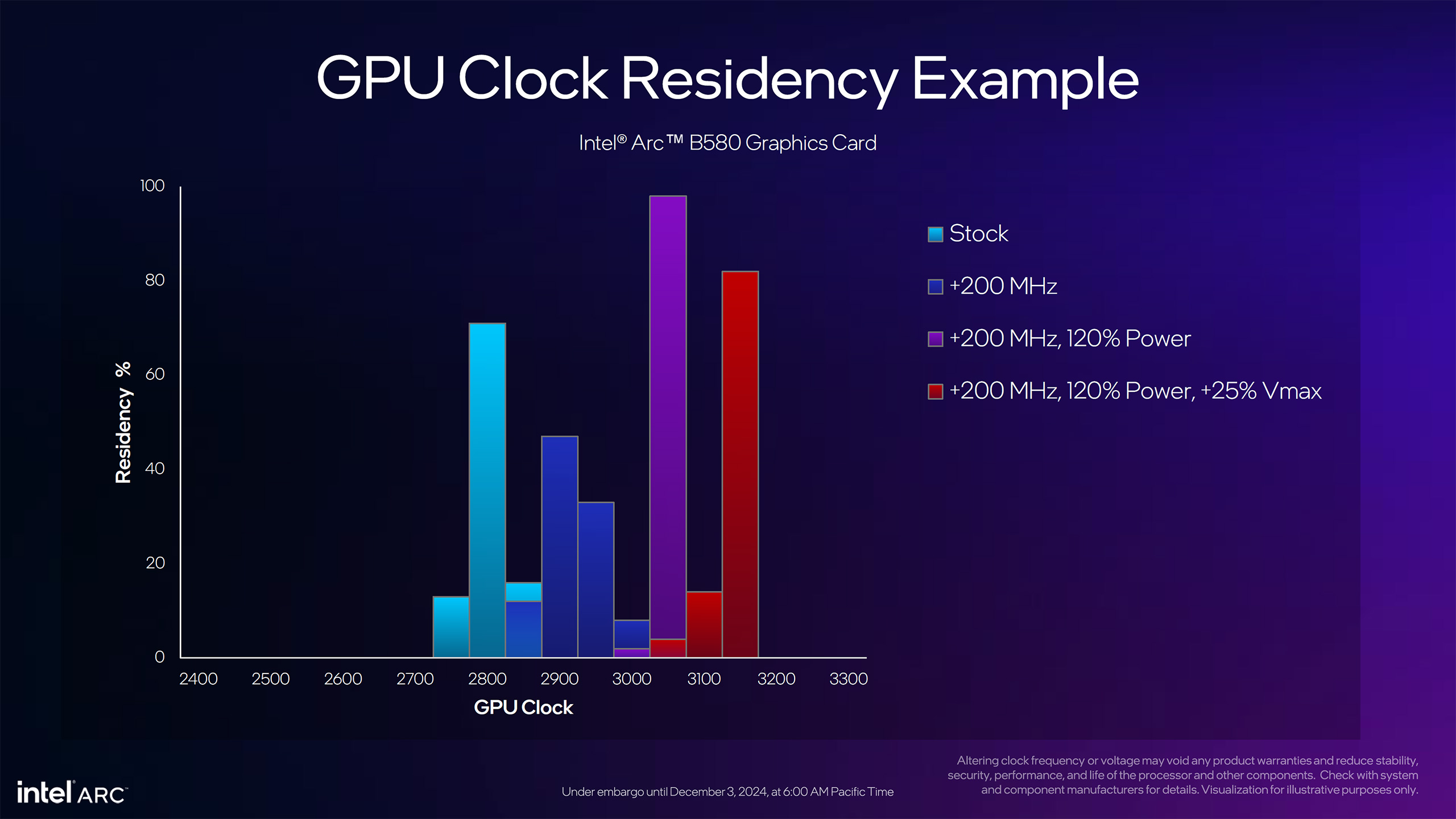

On the software front, Intel will be adding some new settings to its drivers for displays, 3D graphics profiles (per game/application), and overclocking. One interesting tidbit we can glean from the overclocking information is that the typical boost clocks while gaming look to be around 2800 MHz, so around ~150 MHz higher than the official graphics clock. But even that ends up power constrained at times, and the overclocking controls will allow the application of clock offsets, higher power limits, and increased voltages.

Increasing just the clock offset by 200 MHz resulted in about 125 MHz higher clocks than stock. Bumping the power limit to 120% yielded an additional ~125 MHz. And finally, increasing the voltage alongside the clock offset and power limit resulted in average GPU clocks in the 3150 MHz range. Of course, as with any overclocking, stability and results aren’t guaranteed, and pushing things too hard could void your warranty.

Wrapping things up, we have Intel’s own Arc B580 Limited Edition graphics card. There are some obvious changes from the Alchemist A750/A770 design, specifically with the rear fan blowing through the cooling fins of the heatsink with no obstructions. We’ve seen similar designs on recent AMD and Nvidia GPUs, and it helps keep temperatures down while also reducing noise levels.

While Intel will make an Arc B580 graphics card, it won’t be creating a B570 model. All B570 cards will come from Intel’s AIC (add-in card) partners. And there are a couple of new names in that area. Acer, ASRock, Gunnir, and Sparkle have already created Arc Alchemist GPUs, and now Maxsun and Onix will join the Arc club. This is the first time I’ve heard of Onix; while Maxsun tends to be focused on the Asian market — you can sometimes find Maxsun GPUs on places like Amazon.

We’re looking forward to testing the Arc B580 over the coming weeks, and we’ll be debuting a new GPU test suite and test system for this round of testing. Battlemage so far doesn’t look to reach new levels of performance, but the value proposition looks very promising.

Getting 12GB of VRAM in a $250 graphics card hasn’t really been an option previously, at least not on brand-new hardware. AMD’s RX 6700 XT/6750 XT bottomed out at around $300, and Nvidia’s RTX 3060 12GB mostly stayed above $300 as well. Performance from the Arc B580 should easily beat Nvidia’s older 3060, though it looks like it will generally fall short of the RTX 4060 Ti — but again, at a significantly lower price.

Overall, including ray tracing and rasterization games, the B580 should bring some much-needed competition to the GPU space. Check back in about ten days for our full review. The full Intel slide deck is below for those who’d like to see additional details we may have glossed over.

.jpeg)