Tech

Nvidia rings in the holidays with a cheaper AI dev board

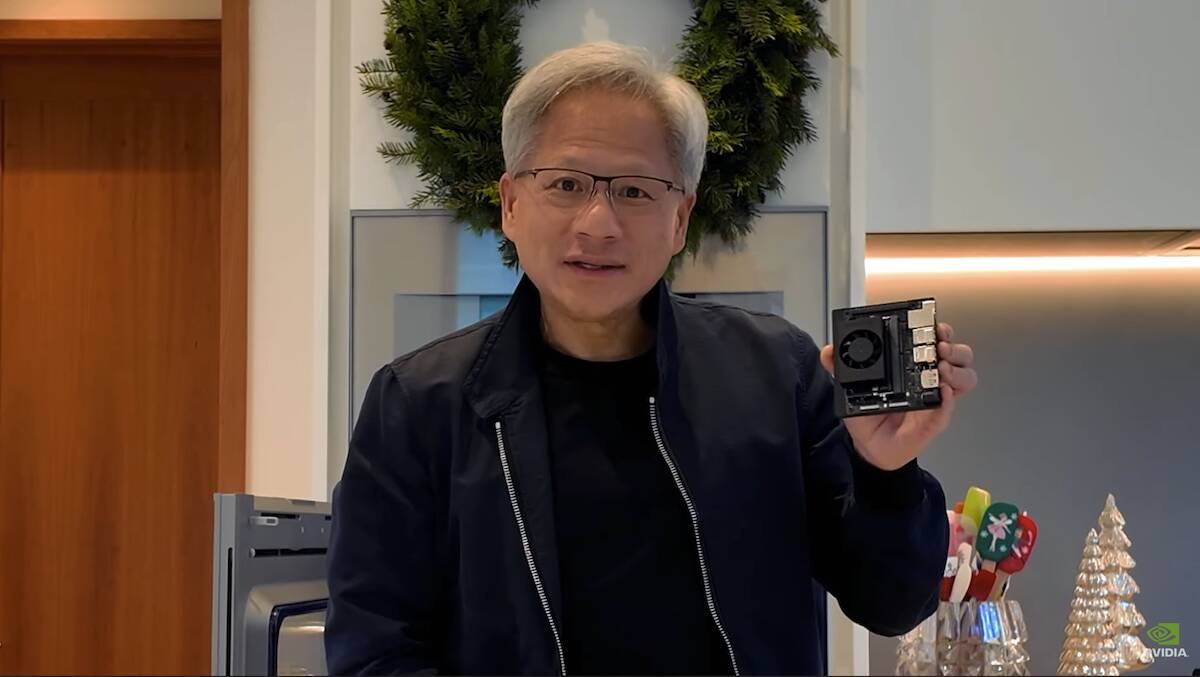

Nvidia is bringing the AI hype home for the holidays with the launch of a tiny new dev board called the Jetson Orin Nano Super.

Nvidia cheekily bills the board as the “most affordable generative AI supercomputer,” though that might be stretching the term quite a bit.

The dev kit consists of a system on module, similar to a Raspberry Pi Compute Module, that sits atop a reference carrier board for I/O and power. And, just like a Raspberry Pi, Nvidia’s diminutive dev kit is aimed at developers and hobbyists looking to experiment with generative AI.

Similar to a Raspberry Pi Compute Module, Nvidia’s Jetson Orin Nano development kit consists of a compute module containing the SoC that attaches to a carrier board for I/O and power. – Click to enlarge

Under the hood, the Orin Nano features six Arm Cortex-A78AE cores along with an Nvidia GPU based on its older Ampere architecture with 1024 CUDA cores and 32 tensor cores.

The design appears to be identical to the original Jetson Orin Nano. However, Nvidia says the “Super” edition of the board is both faster and less expensive, coming in at $249 versus the original $499 price tag.

In terms of performance, the Jetson Orin Nano Super packs 67 TOPS at INT8, which is faster than the NPUs in any of Intel, AMD, or Qualcomm’s latest AI PCs backed by 8GB of LPDDR5 memory capable of 102GB/s of memory bandwidth. According to Nvidia, these specs reflect a 70 percent uplift in performance and 50 percent more memory bandwidth than its predecessor.

The bandwidth boost is particularly important for those looking to play with the kind of large language models (LLMs) that power modern AI chatbots at home. At 102GB/s, we estimate the dev kit should be able to generate words at around 18-20 tokens a second when running a 4-bit quantized version of Meta’s 8-billion-parameter Llama 3.1 model.

If you’re curious about how TOPS, memory capacity, and bandwidth relate to model performance, you can check out our guide here.

For I/O, the dev kit’s carrier board features the usual fare of connectivity for an SBC, including gigabit Ethernet, DisplayPort, four USB 3.2 Gen 2 type-A ports, USB-C, dual M.2 slots with M and E keys, along with a variety of expansion headers.

In terms of software support, you might think those Arm cores might be problematic; however, that really isn’t the case. Nvidia has supported GPUs on Arm processors for years, with its most sophisticated designs – the GH200 and GB200 – utilizing its custom Arm-based Grace CPU. This means you can expect broad support for the GPU giant’s software suite including Nvidia Isaac, Metropolis, and Holoscan, to name a few.

Along with a variety of open AI models available via Nvidia’s Jetson AI lab, the dev kit also supports up to four cameras for robotics or vision processing workloads.

Alongside the new dev kit, Nvidia is also rolling out a software update to its older Jetson Orin NX and Nano system on modules, which it says should boost GenAI performance by 1.7x, so if you already picked up an Orin Nano, you shouldn’t be missing out on too much. ®

![Podcast [English World] Episode 76: Closing izakayas Podcast [English World] Episode 76: Closing izakayas](https://img.kyodonews.net/english/public/images/posts/8367a5e1916be92c4e60b9cb878b241b/cropped_image_l.jpg)