FT

OpenAI has been showcasing Sora, its artificial intelligence video-generation model, to media industry executives in recent weeks to drum up enthusiasm and ease concerns about the potential for the technology to disrupt specific sectors.

The Financial Times wanted to put Sora to the test, alongside the systems of rival AI video generation companies Runway and Pika.

We asked executives in advertising, animation, and real estate to write prompts to generate videos they might use in their work. We then asked them their views on how such technology may transform their jobs in the future.

Sora has yet to be released to the public, so OpenAI tweaked some of the prompts before sending the resulting clips, which it said resulted in better-quality videos.

On Runway and Pika, the initial and tweaked prompts were entered using both companies’ most advanced models. Here are the results.

Charlotte Bunyan, co-founder of Arq, a brand advertising consultant

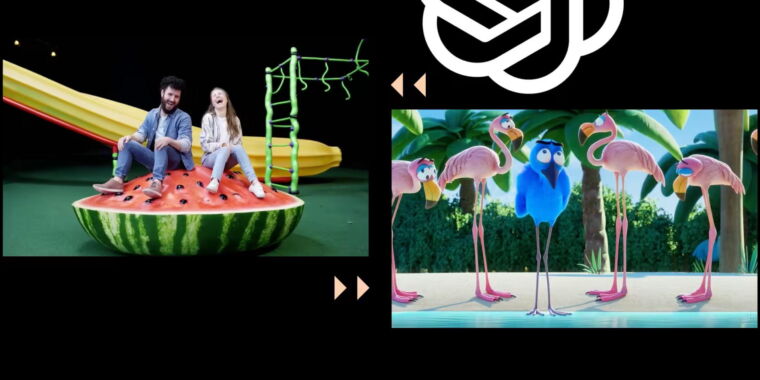

OpenAI’s revised version of Bunyan’s prompt to create a campaign for a “well-known high street supermarket”:

Pike and Runway’s videos based on Bunyan’s original prompt:

“Sora’s presentation of people was consistent, while the actual visualization of the fantastical playground was faithfully rendered in terms of the descriptions of the different elements, which others failed to generate.

“It is interesting that OpenAI changed ‘children’ to ‘people’, and I would love to know why. Is it a safeguarding question? Is it harder to represent children because they haven’t been trained on as many? They opted for ‘people’ rather than a Caucasian man with a beard and brown hair, which is what Sora actually generated, which raises questions about bias.

/static.texastribune.org/media/files/f5fdb1dff4d6fd788cba66ebaefe08d0/Paxton_GOP_Convention_2018_BD_TT.jpg)