Tech

AMD’s fifth-gen Epycs arrive with up to 192 cores

Intel’s 128-core Granite Rapids Xeons are barely two weeks old and AMD has already fired back with a family of fifth-gen Epycs that boast double-digit IPC gains with up to 192 cores or clock speeds as high as 5 GHz.

We got the details at the Advancing AI event in San Francisco on Thursday, and a close look at the House of Zen’s fifth-generation Epyc datacenter chips, codenamed Turin, which come in two flavors:

Turin is available in a performance-tuned scale-up variant based on the Zen 5 core and a throughput-optimized scale-out version built on the Zen 5C compact core – click to enlarge

The first of these is a performance-tuned part with between eight and 128 of the chip designer’s full-fat Zen 5 cores, while the second is a throughput-optimized chip, which can be had with 96 to 192 of its skinny Zen 5C cores.

If the latter sounds at all familiar, AMD previously employed compact Zen cores in its 128-core Bergamo line of cloud-focused Epycs last year. The 192-core Epyc launching today is for all intents and purposes its spiritual successor.

However, unlike the E-cores in Intel’s 144-core 6700E Xeon 6 processors, AMD didn’t strip out functionality like AVX-512 support, which could lead to incompatibility when migrating workloads from one box to another. Instead, AMD traded peak clock speeds and per-core cache for an overall smaller core and therefore greater density.

In other words, the cores themselves are functionally identical, which appears to be why AMD stuck with the Turin naming convention for both parts this time around.

The core wars heat up

Due to the timing of the launch, AMD has the luxury of comparing its latest Epycs against last year’s Emerald Rapids Xeons as opposed to Intel’s shiny new Granite Rapids 6900P-series processors.

Against Intel’s 64-core Xeon 8592+, the House of Zen says its 192-core Epyc 9965 commands a 2.7x lead in the SPECrate 2017 Integer benchmark. That lead extends considerably in real-world workloads like MySQL and video encoding, where the 192-core part commands a 3.9x and 4x lead.

The house of Zen’s Turin platform claims up to 4x higher performance than Intel’s barely year-old Emerald Rapids Xeons – click to enlarge

That puts AMD’s Zen 5C cores roughly on par with, if not makes them faster than, Raptor Cove cores in Intel’s Emerald Rapids depending on the workload. Of course, as with any vendor-supplied benchmarks, arm yourself with a grain of salt.

Meanwhile, if single-core perf is what you’re after, the fatter Zen 5 core-based Epycs pull even further ahead, with AMD claiming a 40 percent lead when pitting its 32-core Epyc 9355 against a similarly equipped Emerald Rapids Xeon.

In a variety of HPC workloads, AMD says Turin’s Zen 5 cores are as much as 60 percent faster than the Raptor Cove cores in Intel’s fifth-gen Xeons – click to enlarge

For HPC-centric workloads, AMD says the Zen 5 cores in its 64-core Epyc 9575F can provide a 60 percent performance advantage in HPC modeling and sim applications like Altair Radioss, Acusolve, Ansys Fluent, and LS-DYNA compared to Intel’s own 64-core CPU.

That particular Epyc stands out as it appears to mark the sweet spot between core count and clock speeds this generation, with max frequencies capable of hitting 5 GHz.

AMD is positioning this balance as an AI win in that it’s capable of keeping up with all the pre and post-processing, data prep, and other processes necessary to keep the GPUs chugging along.

As you may recall, when AMD launched its MI300X accelerators last December, many of the benchmarks were conducted using Intel Sapphire Rapids-based systems.

While the decision demonstrated that AMD was willing to overlook rivalries to push its Instinct parts, it was a bit embarrassing for a company that prides itself for its CPUs. With Turin, AMD appears confident its Epyc CPUs are now the right match for its AI accelerators and even its competitors.

Trading blows

Turin’s debut sees AMD regain its core count advantage over Intel, which won’t launch its monster 288-core Sierra Forest Xeons until early next year. Then again, AMD’s approach to core-heavy CPUs is quite different.

As we mentioned earlier, Intel’s Sierra Xeons achieve their massive core counts using stripped down efficiency cores, which not only don’t clock as high as their P-core counterparts, maxing out at between 2.6 and 3.2 GHz depending on the SKU, and lack support for several core features like AVX 512 and simultaneous multithreading. AMD simply traded clocks and cache for die area.

Because of this, comparing a Zen 5C-based Epyc against Sierra Forest is tricky. We expect that within a few months, Intel and AMD will have found workloads where their chips excel and competitors fall flat. Not all cores are created equal and simply having more doesn’t always translate into higher performance or throughput.

One area where Intel’s latest Xeons do appear to have a sizable advantage is memory bandwidth, which is often a major bottleneck to application performance in HPC and AI workloads. That’s because Intel’s Xeons support speedy MRDIMMs while AMD’s Turin does not.

With 12 channels of 8,800 MT/s MRDIMMs, a single 6900P commands 825 GBps of memory bandwidth or 6.4 GBps per core. By comparison, Turin lacks MRDIMM support this generation and the I/O die’s memory controller tops out at 6,000 MT/s. Well, technically, after initially claiming 6,000 MT/s support, AMD says that it will allow 6,400 MT/s support for customers who ask really nicely.

Assuming you aren’t a hyperscaler or cloud provider and are stuck with 6,000 MT/s DDR5, that still works out to 562 GBps on Turin or 4.4 GBps per core on the 128-core Zen 5 platform and 2.9 GBps per core on the 192-core Zen 5C platform. Obviously, per-core memory bandwidth will scale with core count.

Even if you can manage to get your hands on a 6,400 MT/s system, Intel’s MRDIMM support still gives it a bandwidth advantage over AMD, which could mean greater competition from the Xeon builder among HPC vendors like HPE Cray and Eviden as a result.

A trip through Turin’s underbelly

So where did all this performance come from? The simple answer is a potent combination of faster clocks, more cores, and a hefty 17 percent IPC uplift for server apps. Turin also benefits from a process shrink.

We’ve already explored AMD’s latest core architecture in some detail this summer, but to recap, Zen 5 now features a widened front-end to allow for more branch predictions per cycle and implements a dual-decode pipeline along with i-cache and op-cache improvements aimed at curbing latency and boosting bandwidth.

AVX-512 support has also been reworked this generation with support for a full 512-bit data path as opposed to the “double-pumped” 256-bit approach we saw in Zen 4.

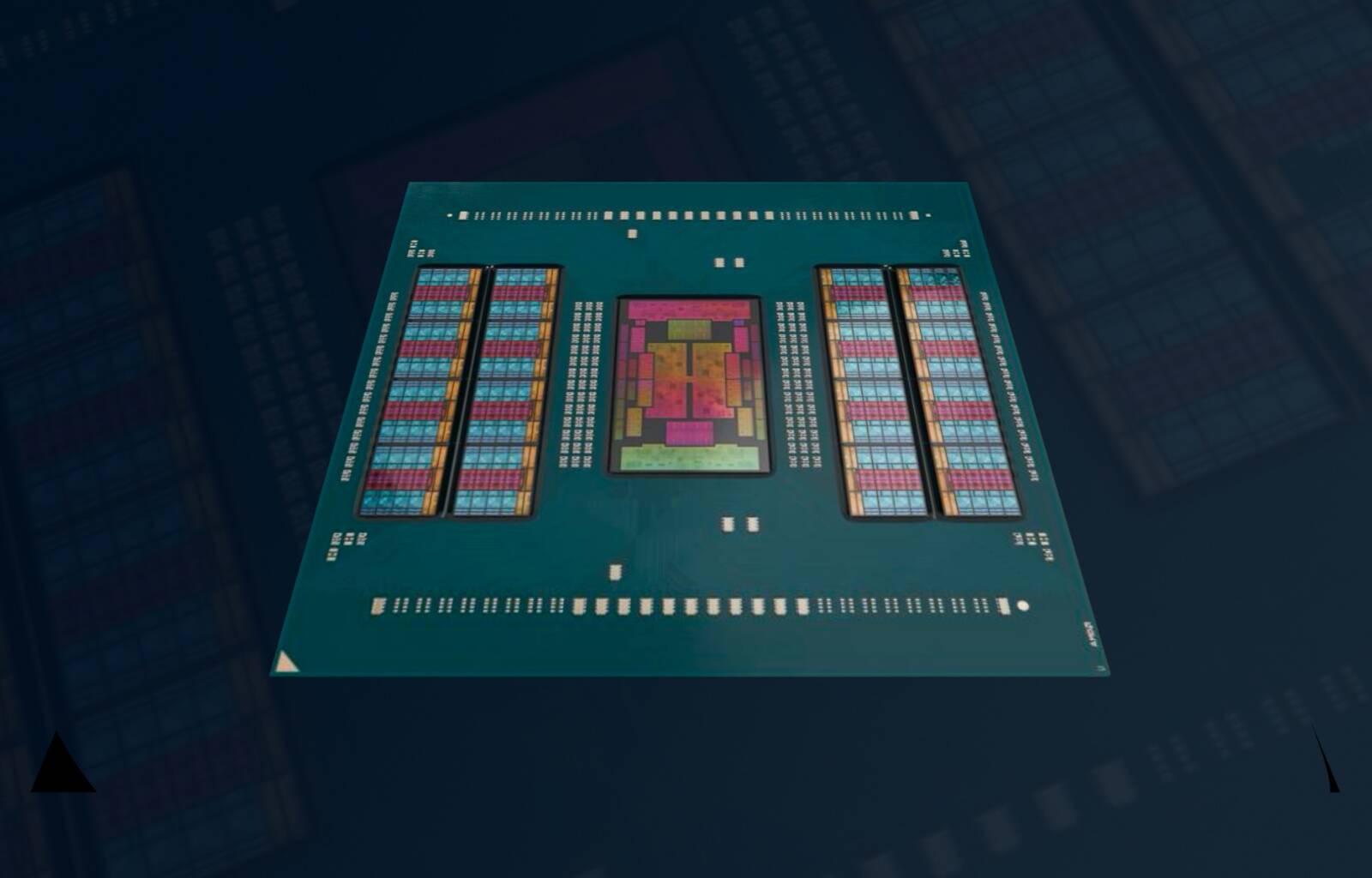

While these gains are architectural, Turin’s higher core count comes in the form of silicon – a lot more silicon. While the last-gen Genoa and Bergamo Epycs featured up to 12 and eight core complex dies (CCDs), respectively, Turin and its compact counterpart feature bump this up by four to 16 and 12.

These dies are also fabbed on TSMC’s more modern process tech with the Zen 5-based chips using the foundry giant’s 4nm node, while the core-heavy Zen 5C-based chips opt for its 3nm tech.

These dies are arranged in a familiar pattern with two clusters flanking either side of the I/O die. In fact, Turin shares the same SP5 socket as its predecessors, making it, with a few exceptions, a drop-in replacement – with a BIOS update, of course.

All of this silicon is necessary because AMD has kept the core density of its chiplets unchanged from past generations.

Like Genoa, Turin’s CCDs are equipped with the same eight cores and 32 MB of L3 cache as Genoa. Turin-C’s CCDs, meanwhile, follow a same pattern to Bergamo’s with 16 cores and 32 MB of L3, but consolidate the CCD as a single compute complex, which should help with latency in certain multithreaded workloads.

This approach has the benefit of allowing AMD to scale up its core count modularly. Need more cores, add eight at a time. And unlike Intel’s 6900P-series processors announced late last month, Epyc’s memory controller is located on the I/O die. This means that memory bandwidth scales independently from core count, which can be beneficial for HPC customers trying to tune for per-core memory bandwidth.

One potential downside to this approach is higher latency for workloads that have to traverse the multiple CCDs. This is one of the reasons that Intel has favored fewer higher-core-count chiplets over AMD’s more modular approach. However, the actual impact of this will be highly workload dependent.

Another side effect of all that extra silicon is increased power draw. Turin is rated to pull up to 500 W, at least on its densest and highest clocking parts – an increase of 100 W over Genoa. And therein lies the caveat to SP5 platform support. Those 500 W parts will need an all-new motherboard to keep up with power demand.

If 500 W for a CPU might sound like a lot, many of Intel’s Granite Rapids Xeons are rated for similar power consumption.

AMD makes consolidation play

As core counts inch ever higher, AMD continues to beat the server consolidation drum as it sets its sights on displacing all those Intel Cascade Lake servers due for replacement over the next few years.

With 192 cores, AMD says just one dual-socket Turin platform can replace seven aging Cascade Lake Xeon boxes – click to enlarge

AMD now says a pair of 192-core Epycs can replace seven dual-socket Cascade Lake systems, allowing administrators to consolidate 1,000 aging Xeon systems into just 131 core-dense systems while consuming 68 percent less power.

That’s just based on core count and doesn’t take into account the five years of IPC and frequency gains. Not only will those 14 systems have the same number of cores, they’ll be substantially faster.

As it stands, AMD estimates it controls 33.7 percent of the datacenter CPU market by revenue today. If Lisa Su and company can convince owners of aging Xeons to kick Intel to the side, it could be a huge boon to their market share.

But as attractive as a 7:1 consolidation might sound to CTOs looking to cut costs, it’s not a slam dunk for AMD, which has no monopoly on core-dense x86 processors. Intel is making similar consolidation claims around its Granite Rapids P-core and Sierra Forest E-core Xeons.

This also has implications for failover and resilience as now the blast radius for a single box going offline has the potential to take out seven nodes worth of workloads – a risk a fellow vulture highlighted in a recent opinion piece following Granite Rapid’s debut.

However, we’ll note this isn’t entirely on AMD to solve. A lot of these challenges can be mitigated by modern high availability approaches and/or container orchestration.

That doesn’t necessarily helps folks running large numbers of bare metal servers or VMs, which often rely on failover mechanisms rather than the fully redundant ones you might see in a Kubernetes cluster. As such, realizing Intel and AMD’s consolidation promises may require IT teams to modernize their application stacks, something that may be a bigger pain than sticking with lower-core-count parts.

The good news is AMD isn’t forcing customers to adopt these high-core-count parts if they’re not ready. The chip biz offers Turin processors ranging from a mere eight cores all the way up to 192.

Competition intensifies

While AMD’s Epycs continue to win share in the datacenter, the CPU space is only getting more competitive.

Intel has multiple CPUs incoming including its lower-core-count 6700P Granite Rapids Xeons, which support up to eight sockets, as well as its 288 E-core monster due out early next year.

Ampere Computing, meanwhile, continues to push its own Arm-based CPU architecture with next-gen chips pushing 256 and even 512 cores.

Speaking of Arm, all three of these companies face competition from a growing number of custom RISC-based CPUs. The three major cloud providers – Amazon, Microsoft, and Google – are also in the CPU game, with AWS’s Graviton now in its fourth generation.

That number could grow to four in a roundabout way given enough time as we recently learned Oracle owns nearly a third of Ampere with the option to buy controlling interest in the chip startup as early as 2027. Whether that will happen, we’ll have to wait and see.

In the midst of this, we could see the Turin family grow. Historically, AMD’s X-class Epycs have trailed their mainstream siblings. Genoa-X with its 1.1 GB of L3 cache arrived a little over six months after Genoa.

For the moment, Turin spins of those parts remain unconfirmed, and speaking with press ahead of Thursday’s keynote, Dan McNamara, VP of AMD’s server division, suggested that, if they are refreshed, it may not happen on the same timetable as Epyc 4. ®