Tech

BBC complains about incorrect Apple Intelligence notification summaries

The UK’s BBC has complained about Apple’s notification summarization feature in iOS 18 completely fabricating the gist of an article. Here’s what happened, and why.

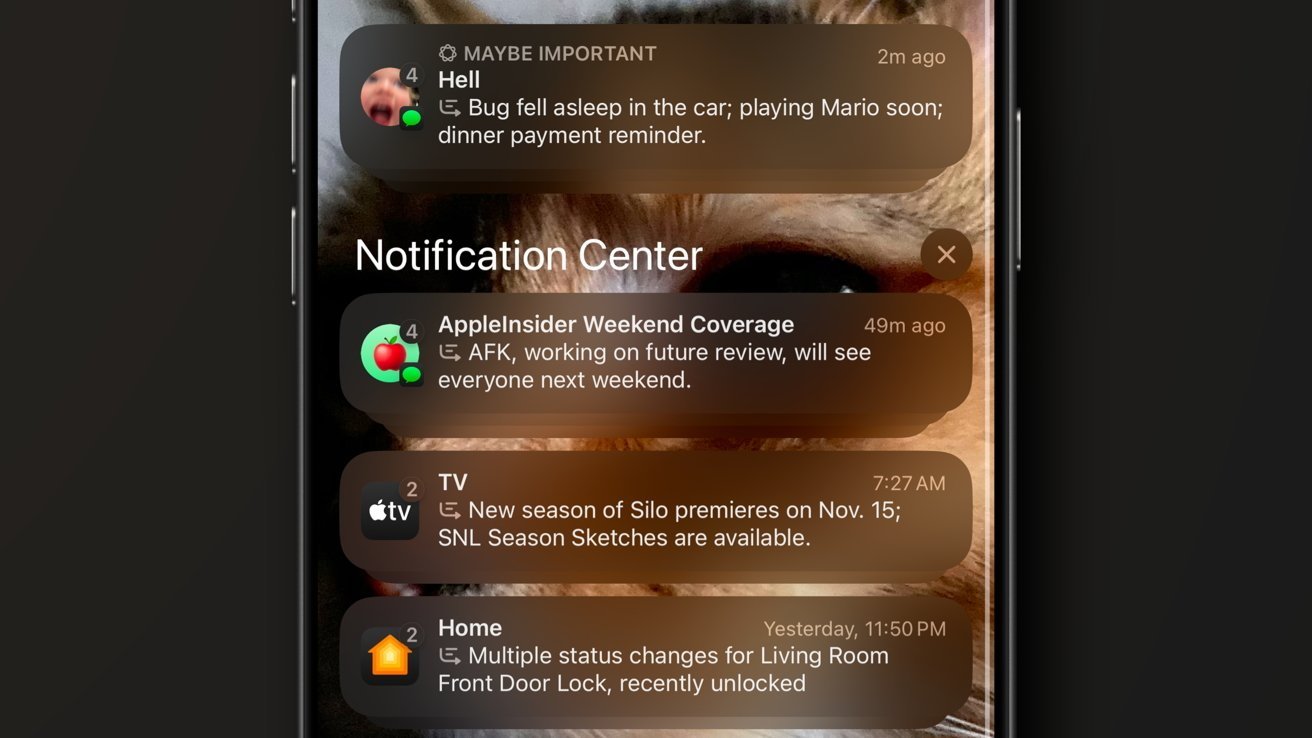

The introduction of Apple Intelligence included summarization features, saving users time by offering key points of a document or a collection of notifications. On Friday, the summarization of notifications was a big problem for one major news outlet.

The BBC has complained to Apple about how the summarization misinterprets news headlines and comes up with the wrong conclusion when producing summaries. A spokesperson said Apple was contacted to “raise this concern and fix the problem.”

In an example offered in its public complaint, a notification summarizing BBC News states “Luigi Mangione shoots himself,” referring to the man arrested for the murder of UnitedHealthcare CEO Brian Thompson. Mangione, who is in custody, is very much alive.

“It is essential to us that our audiences can trust any information or journalism published in our name and that includes notifications,” said the spokesperson.

Incorrect summarizations aren’t just an issue for the BBC, as the New York Times has also fallen victim. In a Bluesky post about a November 21 summary, it claimed “Netanyahu arrested,” however the story was really about the International Criminal Court issuing an arrest warrant for the Israeli prime minister.

Apple declined to comment to the BBC.

Hallucinating the news

The instances of incorrect summaries are referred to as “hallucinations.” This refers to when an AI model either comes up with not quite factual responses, even in the face of extremely clear sets of data, such as a news story.

Hallucinations can be a big problem for AI services, especially in cases where consumers rely on getting a straightforward and simple answer to a query. It’s also something that companies other than Apple also have to deal with.

For example, early versions of Google’s Bard AI, now Gemini, somehow combined Malcolm Owen the AppleInsider writer with the dead singer of the same name from the band The Ruts.

Hallucinations can happen in models for a variety of reasons, such as issues with the training data or the training process itself, or a misapplication of learned patterns to new data. The model may also be lacking enough context in its data and prompt to offer a fully correct response, or make an incorrect assumption about the source data.

It is unknown what exactly is causing the headline summarization issues in this instance. The source article was clear about the shooter, and said nothing about an attack on the man.

This is a problem that Apple CEO Tim Cook understood was a potential issue at the time of announcing Apple Intelligence. In June, he acknowledged that it would be “short of 100%,” but that it would still be “very high quality.”

In August, it was revealed that Apple Intelligence had instructions specifically to counter hallucinations, including the phrases “Do not hallucinate. Do not make up factual information.”

It is also unclear whether Apple will want to or be able to do much about the hallucinations, due to choosing not to monitor what users are actively seeing on their devices. Apple Intelligence prioritizes on-device processing where possible, a security measure that also means Apple won’t get back much feedback for actual summarization results.