Intel formally introduced its Gaudi 3 accelerator for AI workloads today. The new processors are slower than Nvidia’s popular H100 and H200 GPUs for AI and HPC, so Intel is betting the success of its Gaudi 3 on its lower price and lower total cost of ownership (TCO).

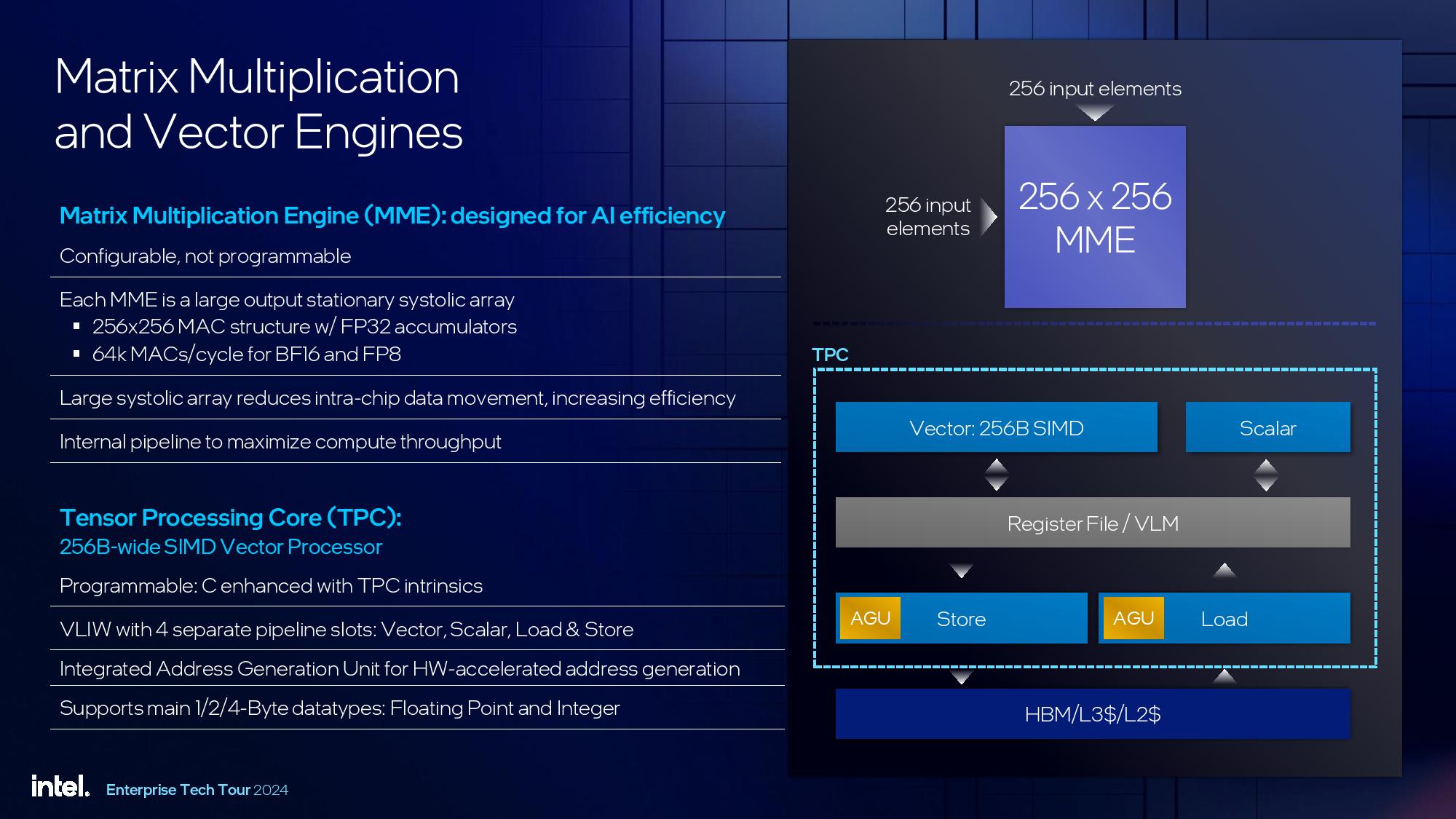

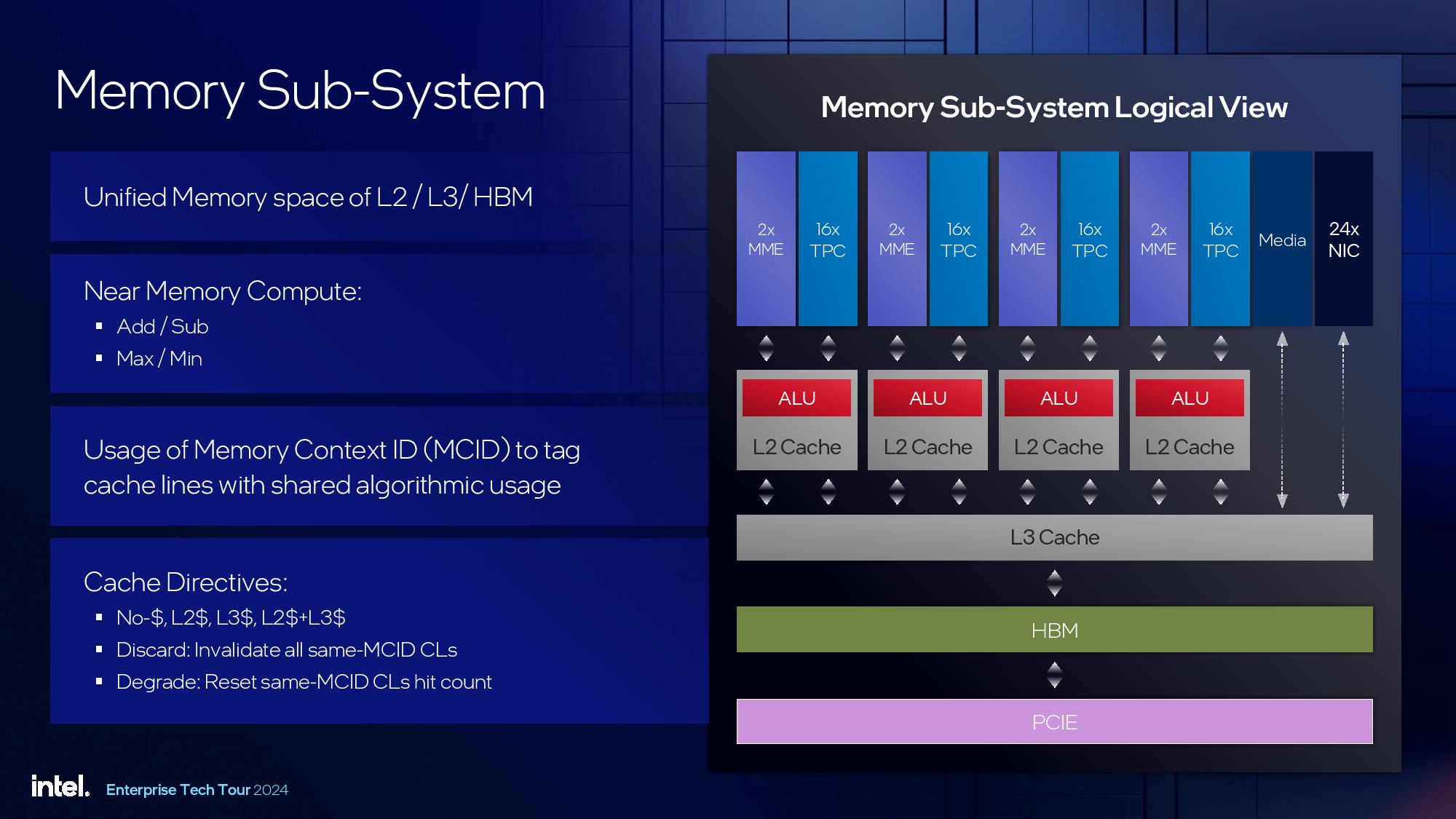

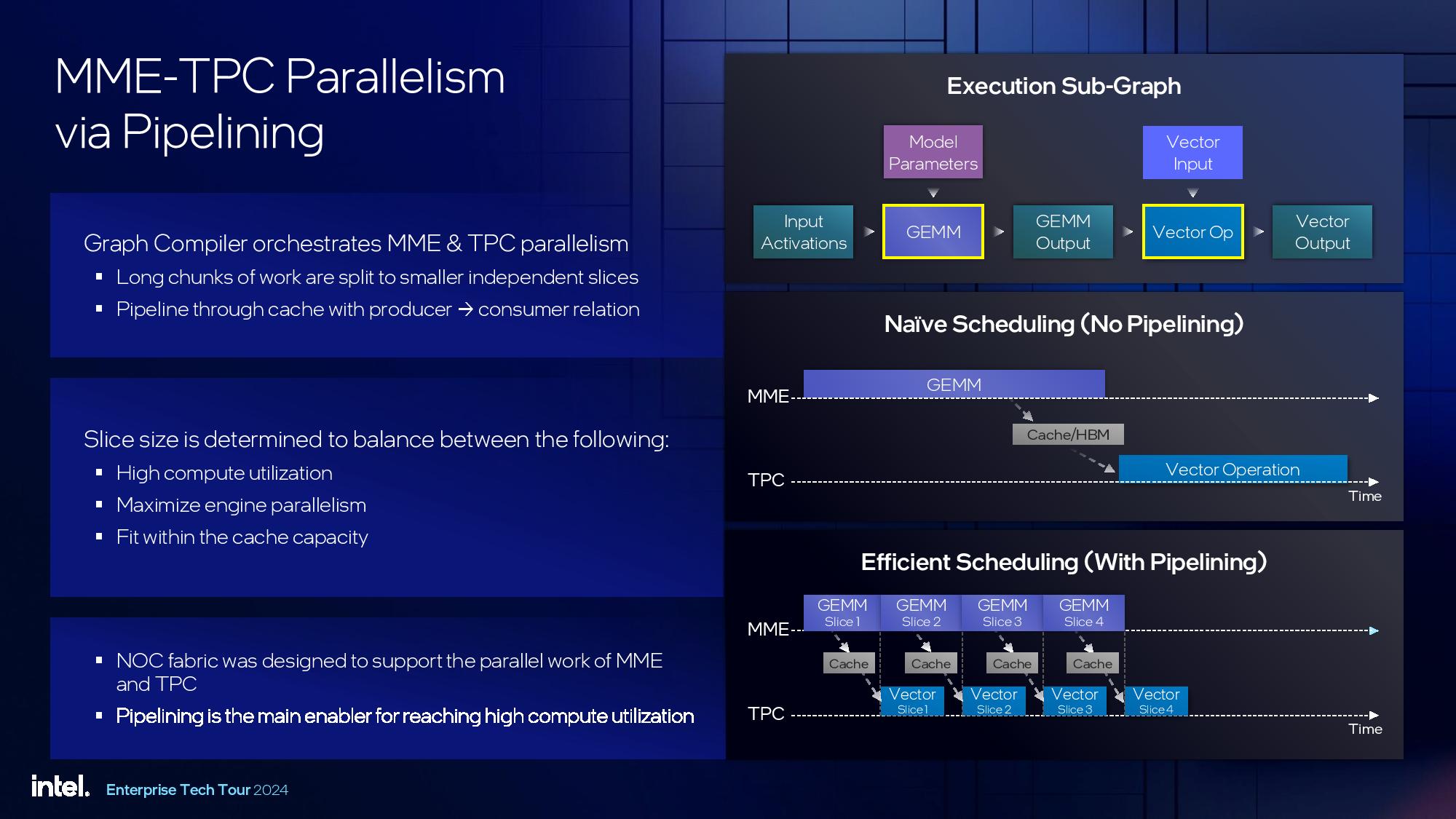

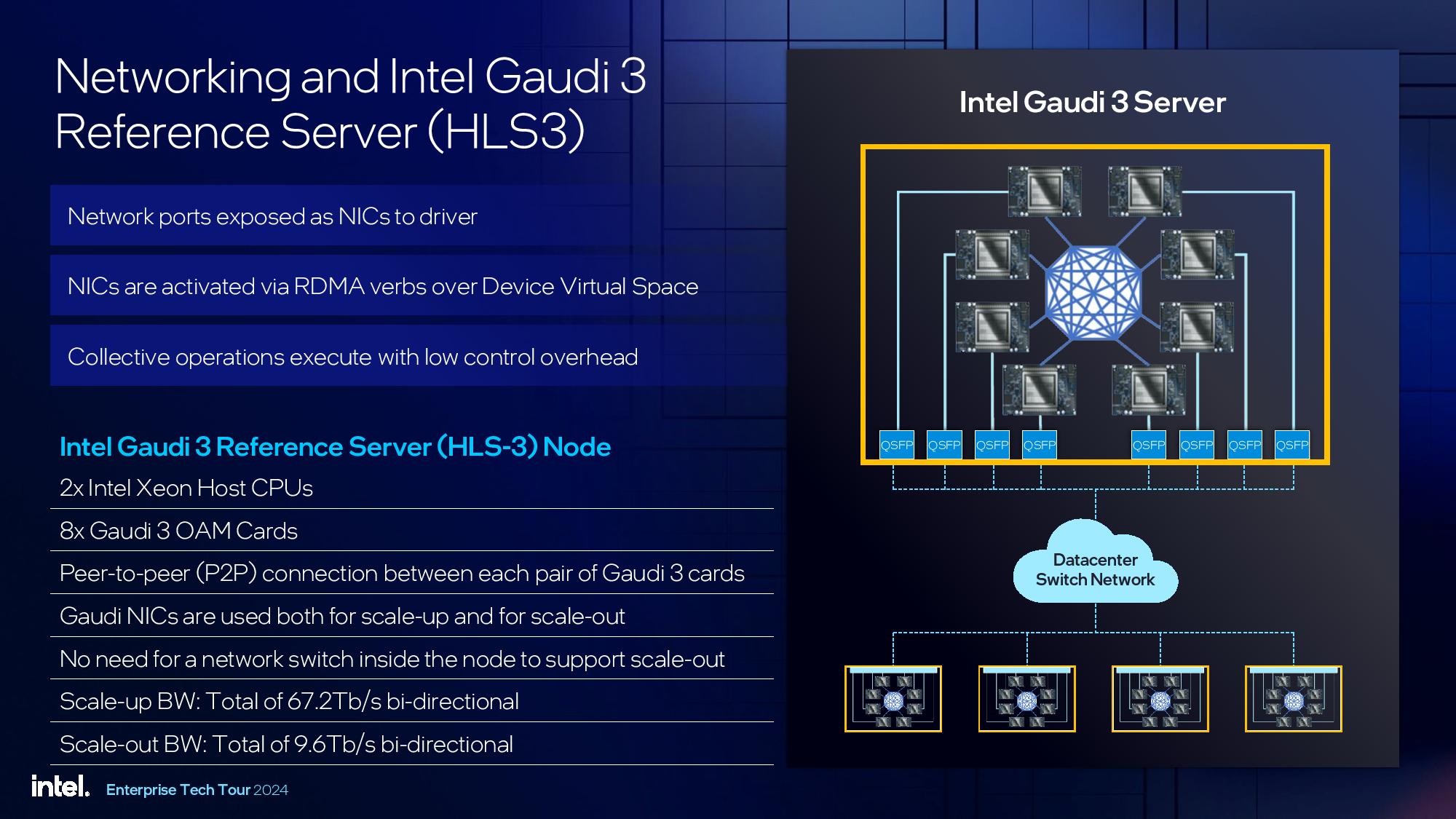

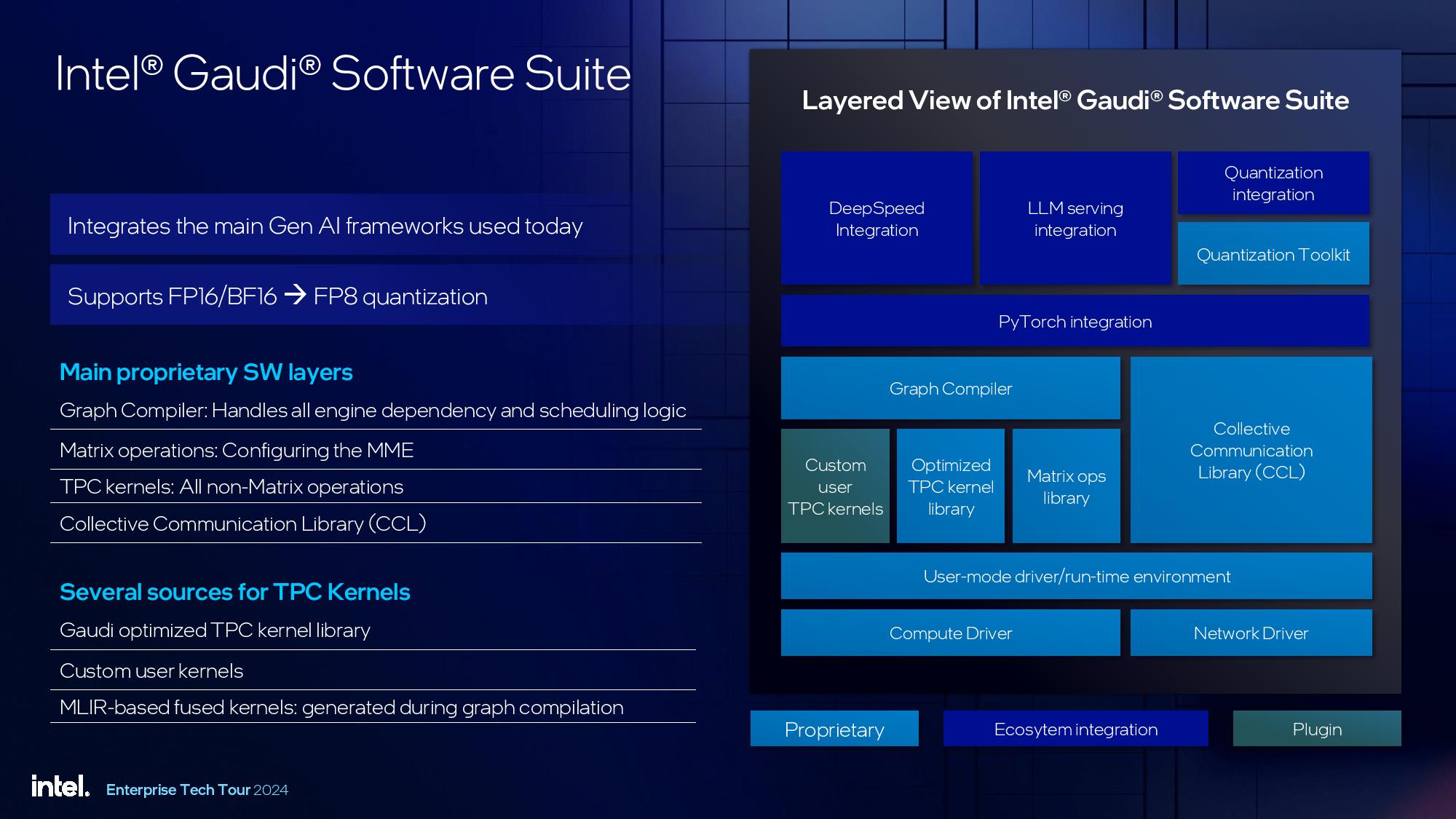

Intel’s Gaudi 3 processor uses two chiplets that pack 64 tensor processor cores (TPCs, 256×256 MAC structure with FP32 accumulators), eight matrix multiplication engines (MMEs, 256-bit wide vector processor), and 96MB of on-die SRAM cache with a 19.2 TB/s bandwidth. Also, Gaudi 3 integrates 24 200 GbE networking interfaces and 14 media engines — with the latter capable of handling H.265, H.264, JPEG, and VP9 to support vision processing. The processor is accompanied by 128GB of HBM2E memory in eight memory stacks offering a massive bandwidth of 3.67 TB/s.

Intel’s Gaudi 3 represents a massive improvement when compared to Gaudi 2, which has 24 TPCs, two MMEs, and carries 96GB of HBM2E memory. However, it looks like Intel simplified both TPCs and MMEs as the Gaudi 3 processor only supports FP8 matrix operations as well as BFloat16 matrix and vector operations (i.e., no more FP32, TF32, and FP16).

When it comes to performance, Intel says that Gaudi 3 can offer up to 1856 BF16/FP8 matrix TFLOPS as well as up to 28.7 BF16 vector TFLOPS at around 600W TDP. Compared to Nvidia’s H100, at least on paper, Gaudi 3 offers slightly lower BF16 matrix performance (1,856 vs 1,979 TFLOPS), two times lower FP8 matrix performance (1,856 vs 3,958 TFLOPS), and significantly lower BF16 vector performance (28.7 vs 1,979 TFLOPS).

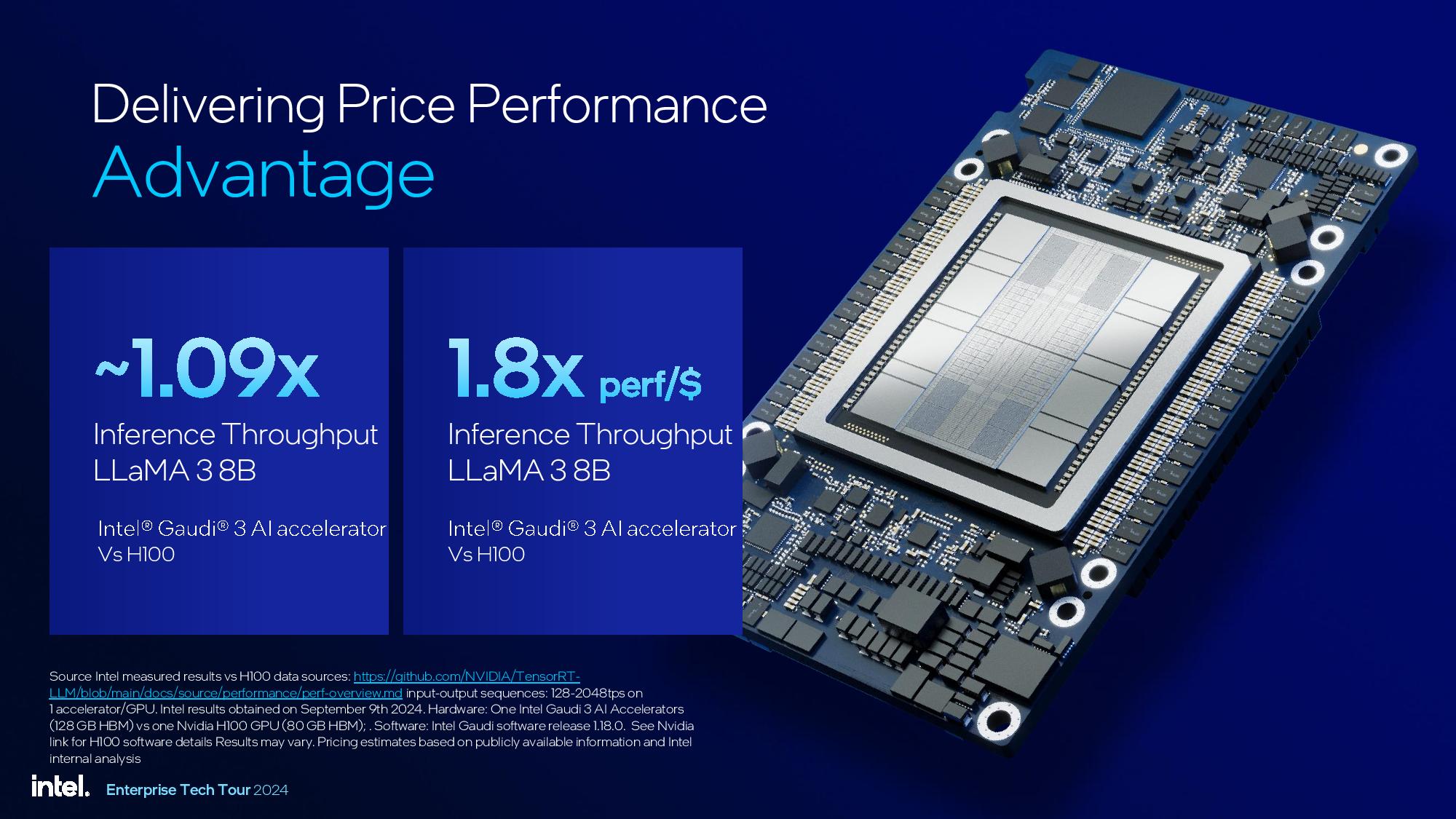

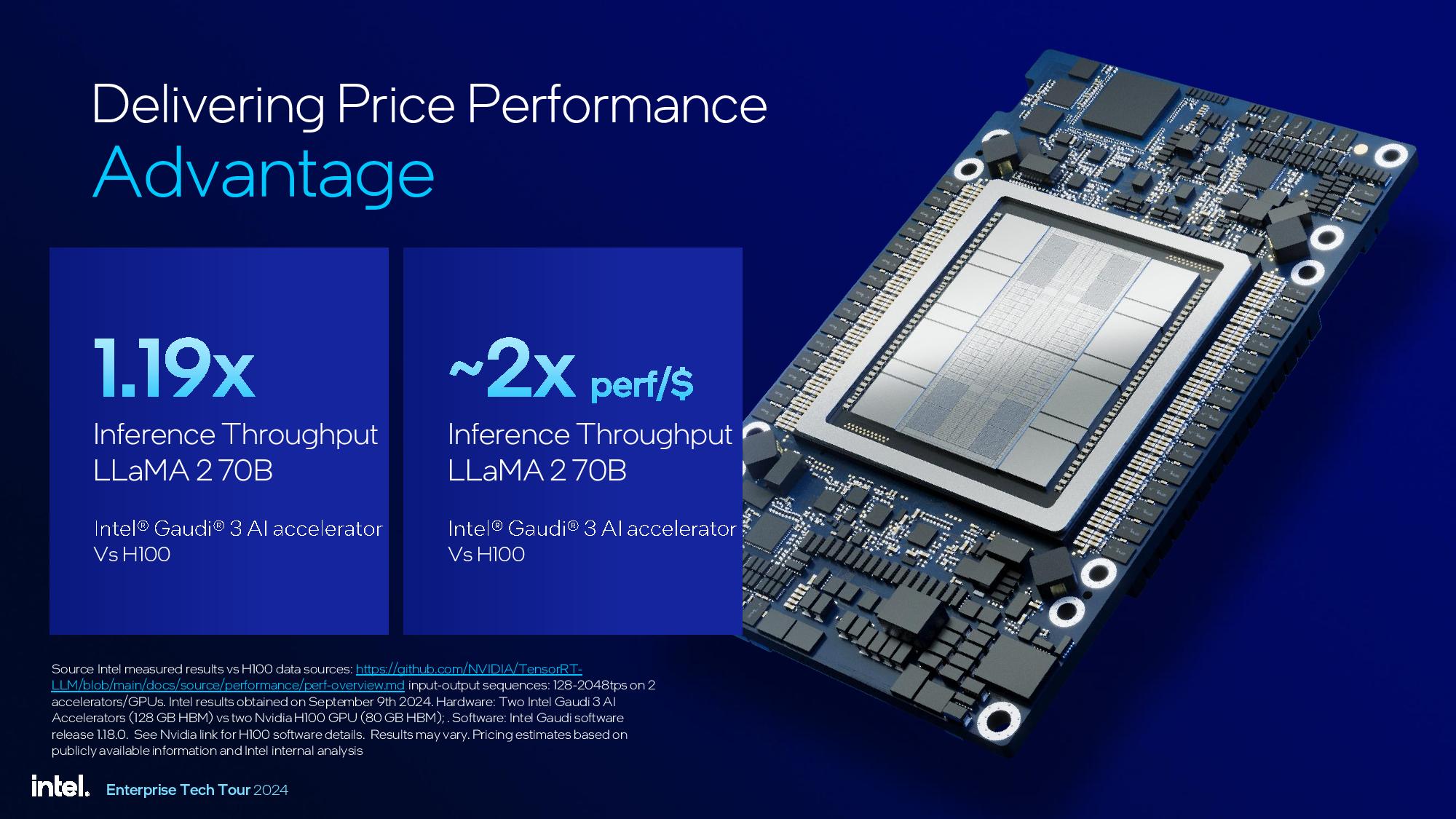

More important than the raw specs will be actual real-world performance of Gaudi 3. It needs to compete with AMD’s Instinct MI300-series as well as Nvidia’s H100 and B100/B200 processors. And this is something that remains to be seen, as a lot depends on software and other factors. For now, Intel showed some slides claiming that Gaudi 3 can offer significant price performance advantage compared to Nvidia’s H100.

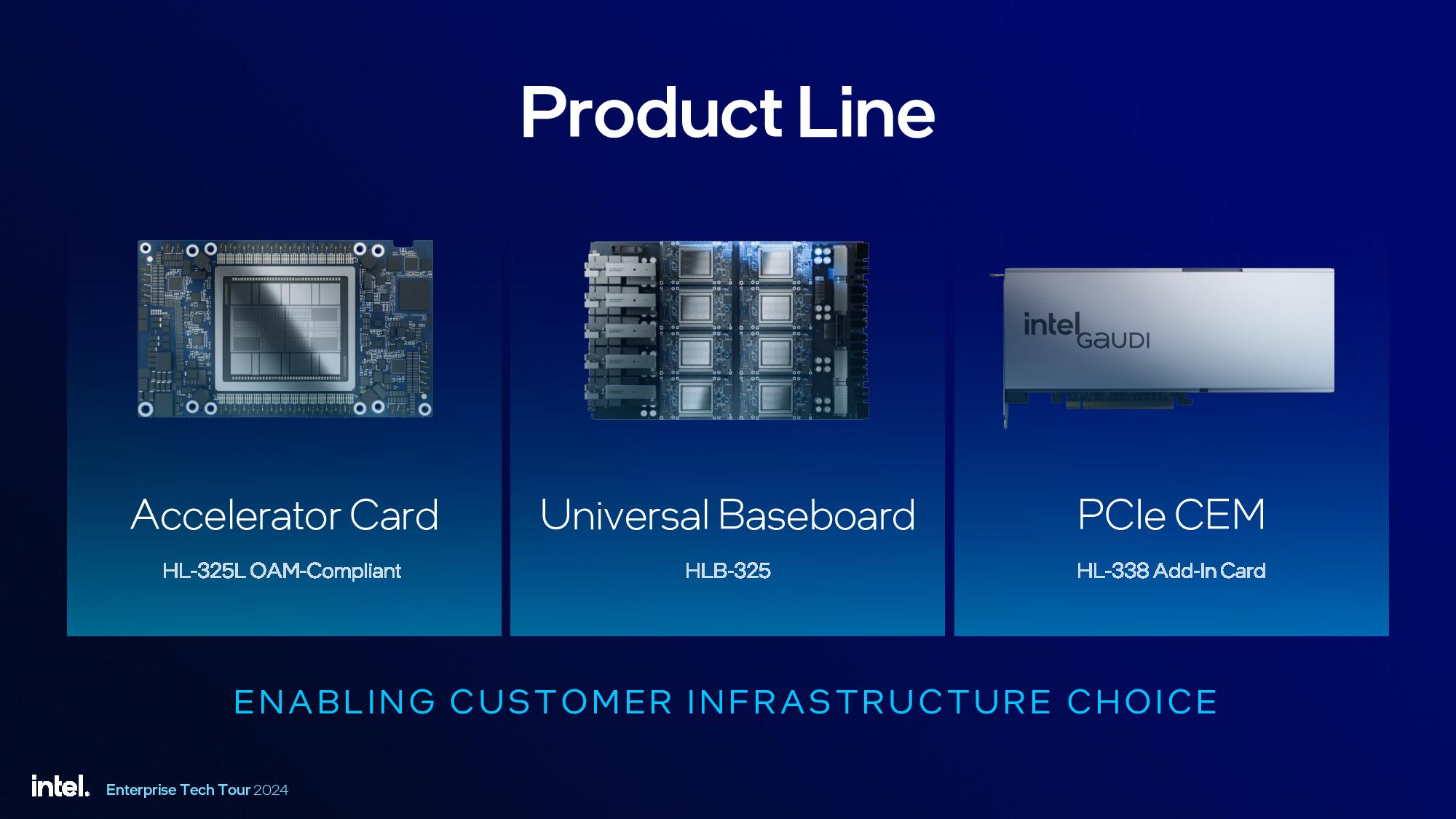

Earlier this year, Intel indicated that an accelerator kit based on eight Gaudi 3 processors on a baseboard will cost $125,000, which means that each one will cost around $15,625. By contrast, an Nvidia H100 card is currently available for $30,678, so Intel indeed plans to have a big price advantage over its competitor. Yet, with the potentially massive performance advantages offered by Blackwell-based B100/B200 GPUs, it remains to be seen whether the blue company will be able to maintain its advantage over its rival.

“Demand for AI is leading to a massive transformation in the datacenter, and the industry is asking for choice in hardware, software, and developer tools,” said Justin Hotard, Intel executive vice president and general manager of the Data Center and Artificial Intelligence Group. “With our launch of Xeon 6 with P-cores and Gaudi 3 AI accelerators, Intel is enabling an open ecosystem that allows our customers to implement all of their workloads with greater performance, efficiency, and security.”

Intel’s Gaudi 3 AI accelerators will be available from IBM Cloud and Intel Tiber Developer Cloud. Also, systems based on Intel’s Xeon 6 and Gaudi 3 will be generally available from Dell, HPE, and Supermicro in the fourth quarter, with systems from Dell and Supermicro shipping in October and machines from Supermicro shipping in December.