Tech

John Hopkins and Stanford robots learn surgery by watching videos – SiliconANGLE

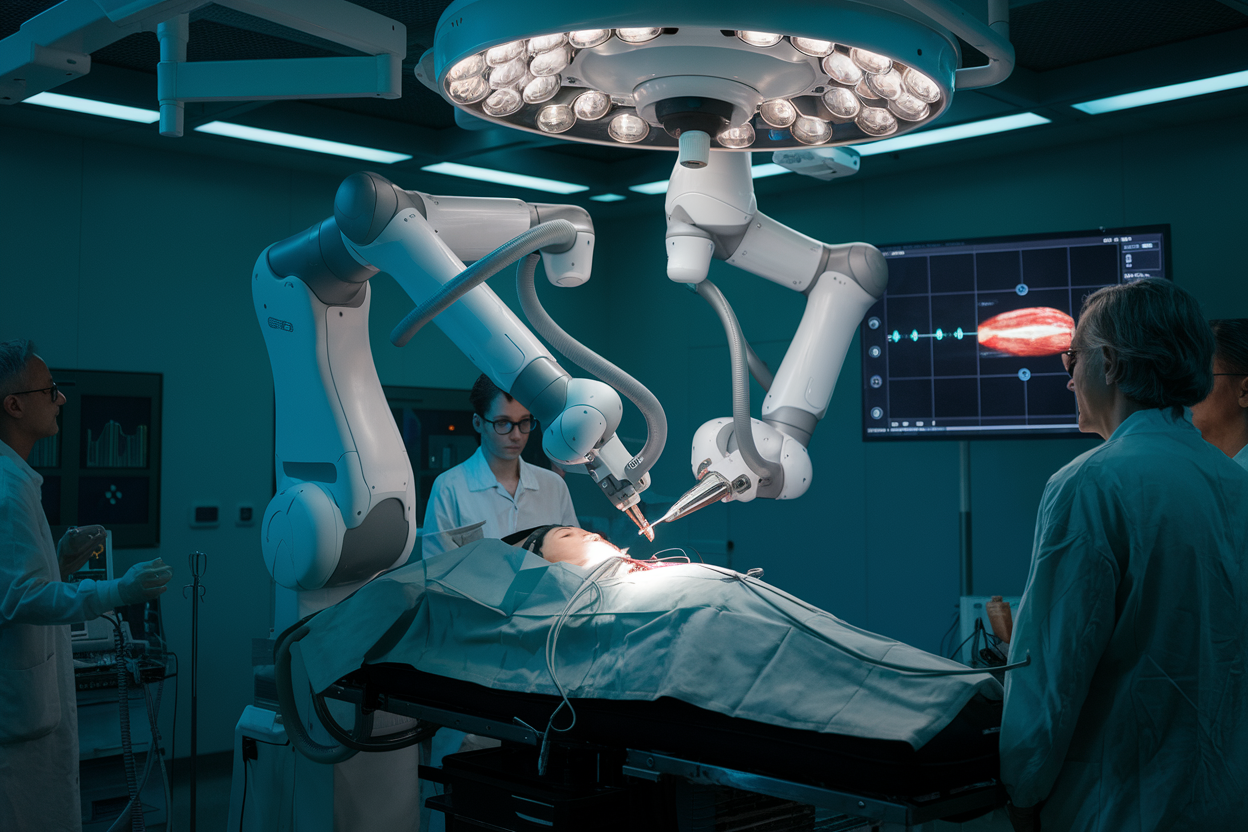

Researchers from John Hopkins University and Stanford University have revealed details of how they are training robots with videos to perform surgical tasks with the skill of human doctors, in what could be a significant step forward in medical robotics.

Robotics in surgery is not new, with various use cases over a number of years. But where the new technology from Johns Hopkins and Stanford gets interesting is how it leverages imitation learning to train robots through observation rather than explicit programming.

The researchers equipped their existing da Vinci Surgical System with a machine-learning model capable of analyzing surgical procedures recorded by cameras mounted on the robot’s instruments. The videos, captured during real surgeries, provide a detailed visual and kinematic representation of the tasks performed by human surgeons.

To train the robots, the team used a deep learning architecture similar to those found in advanced artificial intelligence language models but adapted it to process surgical data. The adapted system analyzes video inputs alongside motion data to learn the precise movements required to complete tasks such as needle manipulation, tissue handling and suturing.

The idea here is that by focusing on relative movements — adjusting based on the robot’s current position rather than following rigid, predefined paths — the model overcomes limitations in the accuracy of the da Vinci system’s kinematics.

Mimicry is one thing, but the model goes further with the inclusion of a feedback mechanism that allows the robot to evaluate its own performance. Using simulated environments, the system can compare its actions against the ideal trajectories demonstrated in the training videos, allowing the robot to refine its techniques and achieve levels of precision and dexterity comparable to highly experienced surgeons, all without the need for constant human oversight during training.

To ensure that the robots could generalize their skills, the model was also exposed to a diverse range of surgical styles, environments and tasks. According to the researchers, the approach enhances the system’s adaptability by allowing it to handle the nuances and unpredictability of real-world surgical procedures, which can be highly variable depending on the patient and surgeon.

“In our work, we’re not trying to replace the surgeon. We just want to make things easier for the surgeon,” Axel Krieger, an associate professor at Johns Hopkins Whiting School of Engineering who supervised the research, told the Washington Post. “Imagine, do you want a tired surgeon, where you’re the last patient of the day and the surgeon is super-exhausted? Or do you want a robot that is doing a part of that surgery and really helping out the surgeon?”

Image: SiliconANGLE/Ideogram

Your vote of support is important to us and it helps us keep the content FREE.

One click below supports our mission to provide free, deep, and relevant content.

Join our community on YouTube

Join the community that includes more than 15,000 #CubeAlumni experts, including Amazon.com CEO Andy Jassy, Dell Technologies founder and CEO Michael Dell, Intel CEO Pat Gelsinger, and many more luminaries and experts.

THANK YOU