Tech

Nvidia GB200 packs 4 GPUs, 2 CPUs in to 5.4 kW board

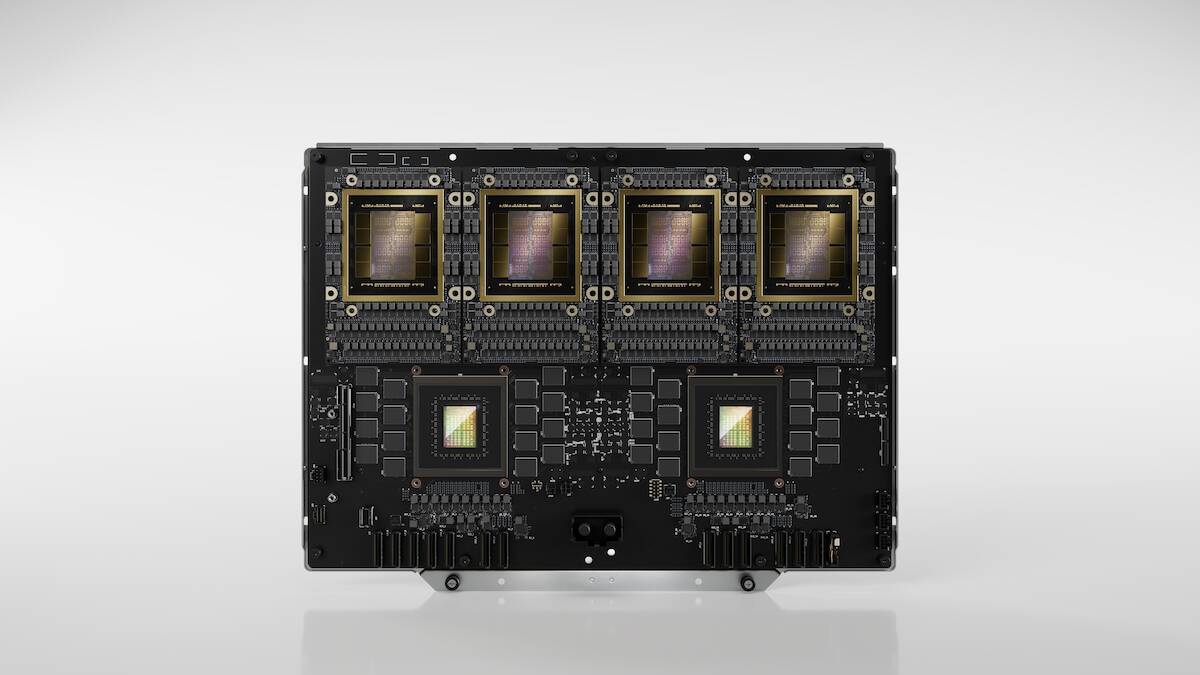

SC24 Nvidia’s latest HPC and AI chip is a massive single board computer packing four Blackwell GPUs, 144 Arm Neoverse cores, up to 1.3 terabytes of HBM, and a scorching 5.4 kilowatt TDP.

In many respects, the all-new GB200 NVL4 form factor, detailed at Supercomputing in Atlanta this week, looks like two of Nvidia’s upcoming Grace-Blackwell Superchips that have been stitched together.

However, unlike the 2.7 kilowatt GB200 boards we’ve looked at previously, the speedy NVLink communications found on Nvidia’s NVL4 configuration are limited to the four Blackwell GPUs and two Grace CPUs on the board. All I/O going on or off the board will be handled by your typical Ethernet or InfiniBand NICs.

The GB200 NVL4 is essentially two GB200 Superchips glued together minus the off-board NVLink – Click to enlarge

While this might seem like an odd choice, it actually aligns pretty neatly with how many HPC systems have been constructed. For example, the Cray EX Blades found in Frontier featured one third-gen Epyc CPU alongside four MI250X accelerators.

This also means that the major HPC system builders such as HPE, Eviden, and Lenovo aren’t limited to Nvidia’s proprietary interconnects for scaling up and out. Both HPE and Eviden have their own interconnect tech.

In fact, HPE has already teased new EX systems, due out in late 2025, that’ll make use of Nvidia’s GB200 NVL4 boards. The EX154n, announced last week, will pack up to 56 of the extra-wide Superchips – one per blade – into its massive liquid-cooled HPC cabinets.

In this configuration, a single EX cabinet can churn out over 10 petaFLOPS of FP64 vector or matrix compute. That may sound like a lot, but if highly precise scientific computing is all you care about, HPE’s AMD-based systems do offer higher floating point performance.

The MI300A APUs found in Cray’s EX255a blades each boast 61.3 teraFLOPS of vector FP64 or 122.6 teraFLOPS of matrix FP64 compared to the 45 teraFLOPS of double-precision vector/matrix performance seen in each Blackwell GPU.

For AI-centric workloads, the performance gap is much narrower as each MI300A can output 3.9 petaFLOPS of sparse FP8 performance. So, for a fully packed EX cabinet, you’d be looking at roughly 2 exaFLOPS of FP8 grunt compared to about 2.2 exaFLOPS from the Blackwell system using less than half the GPUs – double that if you can take advantage of FP4 datatypes not supported by the MI300A.

While HPE Cray is among the first to announce support for Nvidia’s NVL4 form factor, we don’t expect it’ll be long before Eviden, Lenovo, and others start rolling out compute blades and servers of their own based on the design.

H200 PCIe cards get an NVL upgrade

Alongside Nvidia’s double-wide GB200 NVL4, Nvidia has also announced general availability for its PCIe-based H200 NVL config.

But before you get too excited, similar to the H100 NVL we got in early 2023, the H200 NVL is essentially just a bunch of double-width PCIe cards – up to four this time around – which have been glued together with an NVLink bridge.

As with Nvidia’s larger SXM-based DGX and HGX platforms, this NVLink bridge allows the GPUs to pool compute and memory resources to handle larger tasks without bottlenecking on slower PCIe 5.0 x16 interfaces that cap out at roughly 128 GBps of bidirectional bandwidth compared to 900 GBps for NVLink.

Maxed out, the H200 NVL can support up to 564 GB of HBM3e memory and 13.3 petaFLOPS of peak FP8 performance with sparsity. Again, this is because it’s just four H200 PCIe cards stuck together with a really fast interconnect bridge.

However, all of that perf comes at the expense of power and thermals. Each H200 card in the four-stack is rated for up to 600 W of power or 2.4 kilowatts in total.

With that said, the approach does have its advantages. For one, these cards can be deployed in just about any 19-inch rack server with sufficient space, power, and airflow to keep them cool. ®