Tech

Surgical robot learns to automate basic tasks via imitation learning

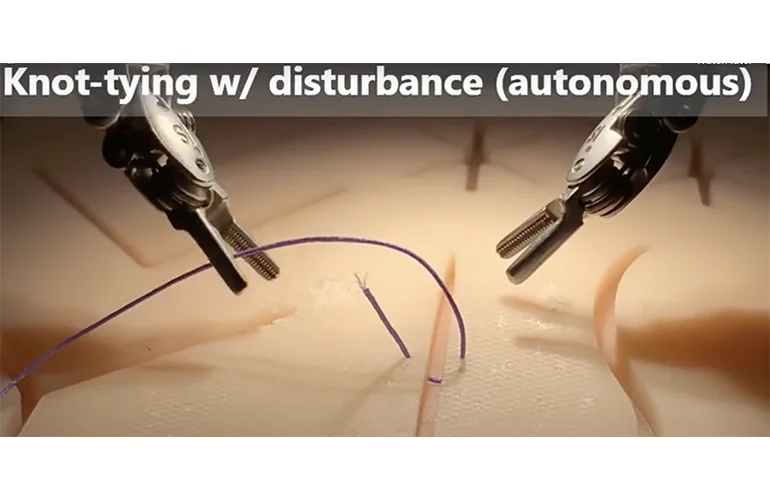

The next frontier of surgical robotics may well be autonomous—or at least far more self-directed than current systems. Traditional platforms rely on joystick-like hand controllers, which keeps surgeons firmly in command of each movement. Yet recent experiments at Johns Hopkins and Stanford point to a significant shift: advanced imitation learning methods that enable robots to perform fundamental surgical tasks with minimal human intervention. The research team demonstrated this capability by showing that robots can learn complex maneuvers after analyzing video recordings of experienced surgeons. By integrating both visual inputs and approximate kinematic references, the robots then build sophisticated procedural models capable of carrying out a range of delicate operations. Examples include tissue manipulation, needle handling, and knot-tying.

The Washington Post recently highlighted the research (paywalled), which is awaiting publication in the Proceedings of Machine Learning Research.

In an abstract, the researchers also speak to the promise of tapping the “large repository of clinical data, which contains approximate kinematics” in robot learning “without further corrections.”

In studies using the da Vinci Research Kit (dVRK), policies based on camera-centric, absolute positioning repeatedly failed to manipulate tissue or handle sutures when confronted with the system’s well-known kinematic hurdles. This resulted in near-zero success for some tasks. By contrast, relative action formulations—where motions are defined relative to the robot’s current end-effector or camera frame—achieved substantially better results. In fact, certain subtasks, like tissue lifting and needle pickup, often succeeded in all trials, while full knot-tying approached 90% success (18/20).

This demonstration underscores just how far surgical robotics has come—illustrating the leap from basic, teleoperated assistance to a more autonomous system that can quickly adapt and correct its own movements. Equipped with relative action capabilities, the dVRK-based setup avoids relying on absolute position data that often drifts or loses accuracy. Instead, it reorients each motion according to the current location of its end-effector or camera frame, giving the robot the finesse to handle sensitive tissues and delicate instruments.

Meanwhile, Intuitive—the developer of the da Vinci Surgical System—has been working on more sophisticated approaches to control, with CEO Gary Guthart and UC Berkeley professor Ken Goldberg introducing the concept of “augmented dexterity.” Under this framework, a surgical robot can handle specific subtasks autonomously (e.g., suturing, debridement) while keeping the surgeon close at hand for critical decisions or complex maneuvers. By coupling advanced imaging and AI-driven guidance with real-time human supervision, Intuitive aims to bridge the gap between pure teleoperation and fully automated functionality. This effort includes ongoing hardware and software refinements (such as 3D modeling, procedure mapping, and AI-based tissue recognition) that could free surgeons from routine elements of a procedure—further boosting efficiency and consistency in operating rooms.

The advances in surgical robotics comes at a time of significant growth in robotics more generally. Throughout 2024, the field saw major strides in humanoid robot development, with companies like Boston Dynamics introducing an upgraded electric Atlas and Tesla highlighting the second-generation version of its Optimus robot. Meanwhile, startups such as Figure rolled out units capable of handling labor-intensive tasks in manufacturing and logistics. In addition, NVIDIA announced Jetson Thor—a compact computer designed to empower humanoid robots.