Tech

What does machine unlearning mean for AI?

Key Takeaways

- Deep learning models can memorize training data verbatim, making it difficult to remove sensitive information without starting from scratch.

- Machine unlearning is a growing field aiming to remove specific data from trained models, but is challenging and costly.

- Various techniques, such as exact unlearning and approximate unlearning, exist to mitigate the impact of removing data from models.

Deep learning models have powered the AI ‘revolution’ of the last two years, giving us access to everything from flashy new search tools to ridiculous image generators. But these models, amazing as they are, have the ability to effectively memorize training information and repeat it verbatim, which is a potential problem. Not only that, once trained, it’s extremely difficult to truly remove data from a model like GPT-4. Say your ML model was accidentally trained on data which contained someone’s bank details, how could you ‘un-train’ the model without starting from scratch?

Luckily, there’s an area of research working on a solution. Machine unlearning is a developing but increasingly interesting field of research, with some serious players starting to get involved. So what is machine unlearning, and can LLMs ever truly forget what they’ve once been given?

Related

Apple’s ChatGPT deal is Apple’s way of cheating in the assistant race

WWDC came and went, and one of the biggest announcements was Apple’s ChatGPT deal to superpower Siri.

How models are trained

A large dataset is needed for any large LLM or ML model

Source: Lenovo

As we’ve covered here before, machine learning models use a large body of training data (sometimes known as a corpus) to generate model weights – i.e. to pre-train the model. It’s this data that directly defines what a model is capable of ‘knowing’. After this pre-training stage, a model is refined to improve its results. In the case of transformer LLM models like ChatGPT, this refinement often takes the form of RLHF (reinforcement learning with human feedback), where a humans provide direct feedback to the model to improve its answers.

Training one of these models comes with extraordinary costs. A report by The Information earlier this year cited ChatGPT daily operating costs of around $700,000 dollars. Training these models requires massive GPU compute power, which is both expensive and in increasingly short supply.

Enter machine unlearning

What if we want to remove a bit of training data?

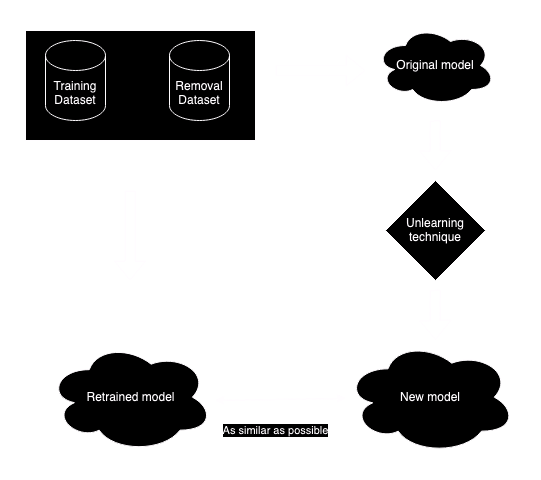

Machine unlearning is really as it sounds. Can we remove a specific bit of data from an already trained model? Machine unlearning is a developing (Google recently announced its first machine unlearning challenge) into a hugely important field of research for deep learning. While it might sound simple, it’s far from easy. The trivial answer is to retrain the model, minus the dataset to be removed. However, as we’ve mentioned, this is often prohibitively expensive and/or time consuming. For privacy-centric federated learning models, a second problem exists – the original dataset may no longer be available. The real objective of machine unlearning is to produce a model as close as possible to a completely retrained model, less the problematic data, in order to get as close as possible to fully retraining the model without actually doing so.

Reproduced from [1]

If we can’t retrain the model, can we remove specific weights in order to hinder the models’ knowledge of a target set of data? The likely answer here is also, no. Firstly, ensuring that a target data set has been completely removed by intervening directly in the model is almost impossible; it’s likely that fragments of the target data would remain. Secondly, the impact on the model’s overall performance is similarly difficult to quantify, and may have adverse impacts not only on overall performance but on other specific areas of the models’ knowledge. For that reason (and others), it’s generally considered impractical to directly remove elements from a model.

This technique of removing specific model parameters is sometimes known as

model shifting

.

Techniques already exist for machine unlearning

There are several existing algorithms for machine unlearning, which can be largely broken down into a few types. Exact unlearning attempts to make the outputs of a retrained model and the original model indistinguishable, apart from the unlearning-specific dataset. This is the most extreme form of unlearning, and provides the strongest guarantee that no unwanted data can be extracted. Strong unlearning is easier to implement than exact unlearning, but only requires the two models be approximately indistinguishable. However, this does not guarantee that some information won’t still remain from the extracted dataset. Finally, weak unlearning is the easiest to implement, but does not guarantee that the expunged training data is no longer held internally. Put together, strong and weak unlearning are sometimes known as approximate unlearning.

Machine unlearning techniques

Now this gets a bit technical, but we’ll run through some common techniques for machine unlearning. Exact unlearning methods are the hardest to implement on large LLMs, and often work best on simple, structured models. This might include techniques like reverse nearest neighbors, which attempts to compensate for the removal of a data point by adjusting its neighbors. K-nearest neighbors is a similar idea, but removes data points based on their proximity to a target bit of data instead of adjusting them. Another common idea is to divide the dataset into subsets, and train a series of partial models that can later be combined (often known as sharding). If a specific bit of data needs to be removed, the dataset which contains it can be retrained and then combined with existing datasets.

Approximate unlearning methods are more common. These might include incremental learning, which builds on top of the existing model to adjust its output and ‘unlearn’ data. This is most effective for small updates and removals, and is a part of the ongoing fine-tuning of models. Gradient based methods are similar to RNN above, in that they attempt to compensate for removed data points by reversing gradient updates applied during training. These can be accurate, but are often computationally expensive and struggle with larger models.

There are other techniques we won’t cover here, but generally they offer some tradeoff between computational cost, accuracy, and how well they’re able to scale to large models.

Machine unlearning is an increasingly important topic

‘Mistakes’ in training data could become more costly

Source: Unsplash

Machine unlearning is likely to be a hot topic over the next few years, especially as LLMs have become increasingly complex and expensive to train. There’s an increasing risk that regulators or judges may ask creators of large models to expunge specific bits of data from their AI, whether due to licensing or copyright infringement. GDPR or ‘right to be forgotten’ legislation already exists in some countries worldwide. The likes of OpenAI have already been caught in significant controversy using unlicensed training data from the New York Times, and the increased use of licensed user-generated content has the potential to cause ongoing issues around content ownership (as Stack Overflow has already found out). OpenAI has also been embroiled in controversies stemming from the use of copyrighted artwork online (as have many others) in training their models, spawning a whole new debate about novel modifications.

Unlearning is a developing field

As the AI landscape slows down from the frantic rate of progress during 2023, regulators are starting to catch up on the issues around training AIs. The race for training data is quickly turning the internet into an increasingly secular, divided place, as demonstrated by Google’s recent exclusivity deal with Reddit. Whether the courts and regulators ever draw a hard enough line to force retraining and removal of data from models past a surface level, we’ll have to see. But privacy implications aside, machine unlearning has the promise to be a tricky but useful technique, not just for forgetting data from models, but for correcting errors in their training data down the line.